こんにちは!ウェブ担当の加藤です。マーケ関連のデータ分析や整備もやっています。

Informatica は、データを転送・変換するための強力で立派な手段を提供します。CData JDBC Driver for SparkSQL を利用することで、Informatica の強力なデータ転送および操作機能とシームレスに統合される、業界で実証済みの標準に基づくドライバーにアクセスできます。このチュートリアルでは、Informatica PowerCenter でSpark を転送および参照する方法を示します。

![Informatica PowerCenter からSpark にデータ連携。]()

ドライバーをデプロイ

ドライバーをInformatica PowerCenter サーバーに展開するために、インストールディレクトリのlib サブフォルダにあるCData JAR および.lic ファイルを次のフォルダにコピーします。Informatica-installation-directory\services\shared\jars\thirdparty.

Developer ツールでSpark を使用するには、インストールディレクトリのlib サブフォルダにあるCData JAR および.lic ファイルを次のフォルダにコピーする必要があります。

- Informatica-installation-directory\client\externaljdbcjars

- Informatica-installation-directory\externaljdbcjars

JDBC 接続の作成

以下のステップに従って、Informatica Developer に接続します。

- [Connection Explorer]ペインで[domain]を右クリックし、[Create a Connection]をクリックします。

- 表示される[New Database Connection]ウィザードで、接続の名前とId を入力し、[Type]メニューで[JDBC]を選択します。

- [JDBC Driver Class Name]のプロパティで次のコードを入力します。

cdata.jdbc.sparksql.SparkSQLDriver

- [Connection String]プロパティでは、Spark の接続プロパティを使用してJDBC URLを入力します。

SparkSQL への接続

SparkSQL への接続を確立するには以下を指定します。

- Server:SparkSQL をホストするサーバーのホスト名またはIP アドレスに設定。

- Port:SparkSQL インスタンスへの接続用のポートに設定。

- TransportMode:SparkSQL サーバーとの通信に使用するトランスポートモード。有効な入力値は、BINARY およびHTTP です。デフォルトではBINARY が選択されます。

- AuthScheme:使用される認証スキーム。有効な入力値はPLAIN、LDAP、NOSASL、およびKERBEROS です。デフォルトではPLAIN が選択されます。

Databricks への接続

Databricks クラスターに接続するには、以下の説明に従ってプロパティを設定します。Note:必要な値は、「クラスター」に移動して目的のクラスターを選択し、

「Advanced Options」の下にある「JDBC/ODBC」タブを選択することで、Databricks インスタンスで見つけることができます。

- Server:Databricks クラスターのサーバーのホスト名に設定。

- Port:443

- TransportMode:HTTP

- HTTPPath:Databricks クラスターのHTTP パスに設定。

- UseSSL:True

- AuthScheme:PLAIN

- User:'token' に設定。

- Password:パーソナルアクセストークンに設定(値は、Databricks インスタンスの「ユーザー設定」ページに移動して「アクセストークン」タブを選択することで取得できます)。

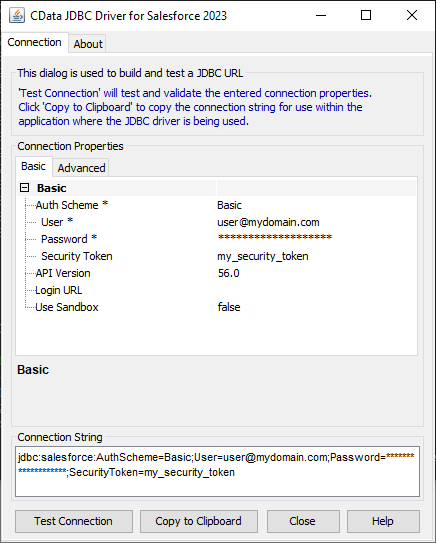

ビルトイン接続文字列デザイナ

JDBC URL の構成については、Spark JDBC Driver に組み込まれている接続文字列デザイナを使用してください。JAR ファイルのダブルクリック、またはコマンドラインからJAR ファイルを実行します。

java -jar cdata.jdbc.sparksql.jar

接続プロパティを入力し、接続文字列をクリップボードにコピーします。

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

以下は一般的な接続文字列です。

jdbc:sparksql:Server=127.0.0.1;

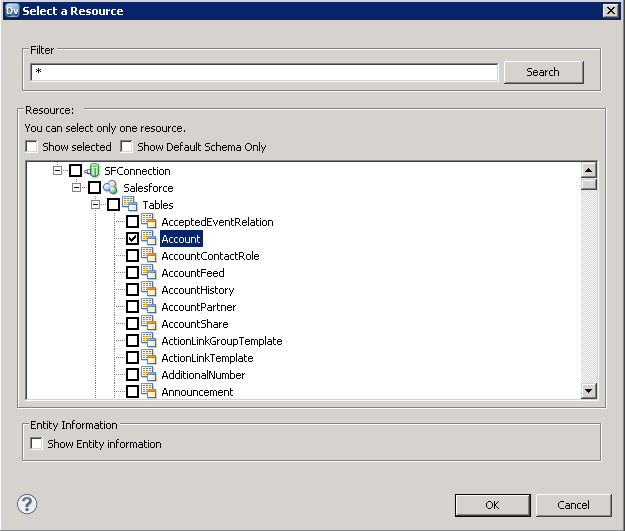

Spark テーブルを参照

ドライバーJAR をクラスパスに追加してJDBC 接続を作成すると、Informatica のSpark エンティティにアクセスできるようになります。以下のステップに従ってSpark に接続し、Spark テーブルを参照します。

- リポジトリに接続します。

- [Connection Explorer]で、[connection]を右クリックし、[Connect]をクリックします。

- [Show Default Schema Only]オプションをクリアします。

![The driver models Spark entities as relational tables.(Salesforce is shown.)]()

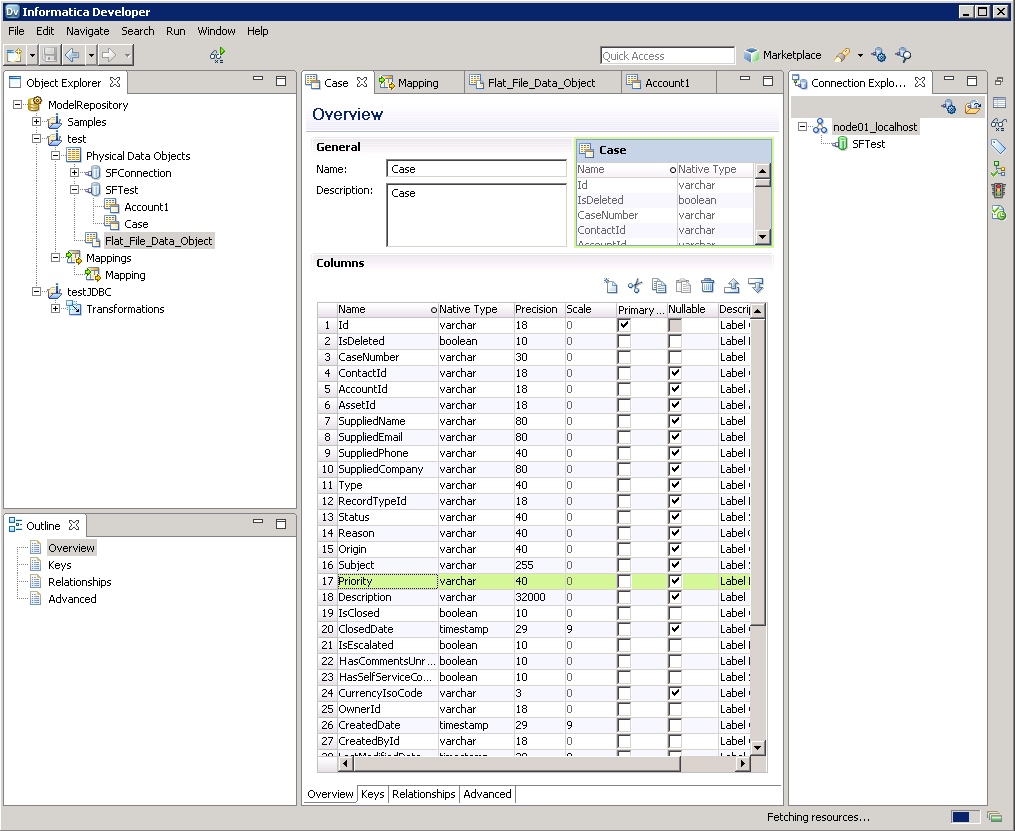

以下より、[Data Viewer]でSpark テーブルを参照できるようになります。テーブルの[node]を右クリックし、[Open]をクリックします。[Data Viewer]で[Run]をクリックします。

![Table data and metadata in the Data Viewer.(Salesforce is shown.)]()

Spark データオブジェクトの作成

以下のステップに従って、プロジェクトにSpark テーブルを追加します。

- Spark でテーブルを選択し、右クリックして[Add to Project]をクリックします。

- 表示されるダイアログでリソースごとにデータオブジェクトを作成するオプションを選択します。

- [Select Location]ダイアログで、プロジェクトを選択します。

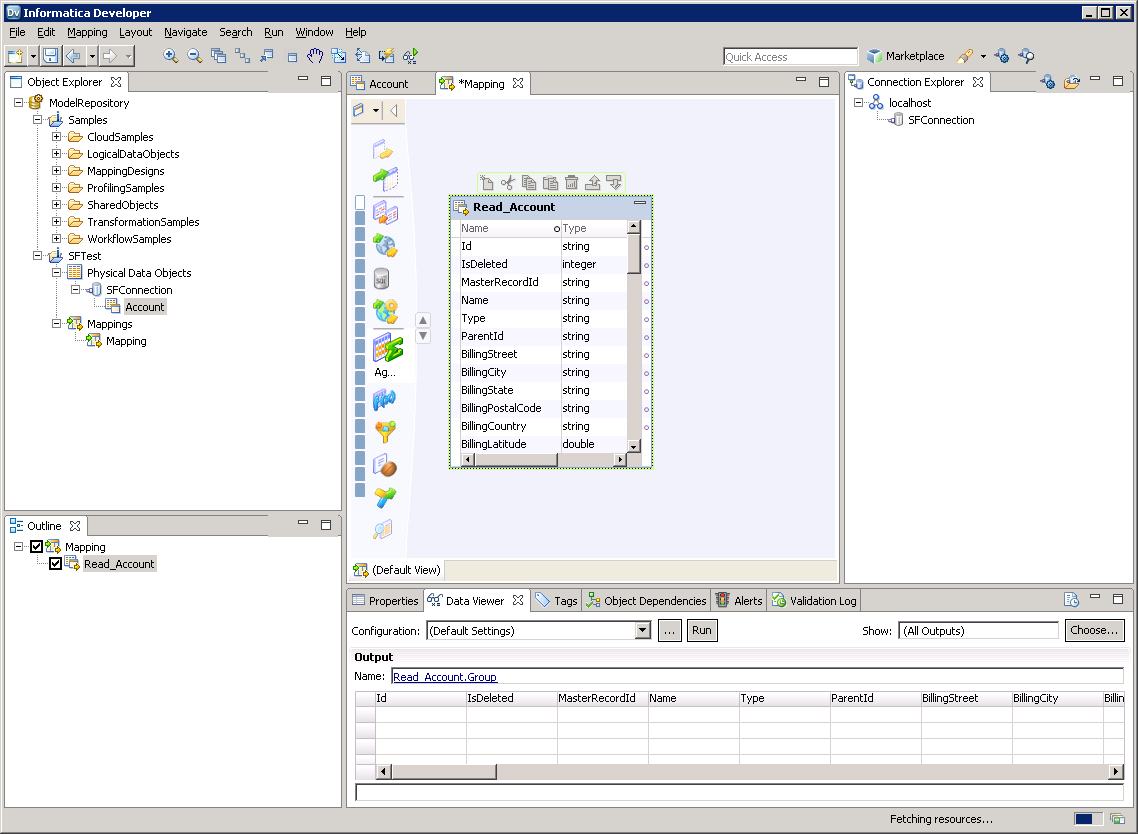

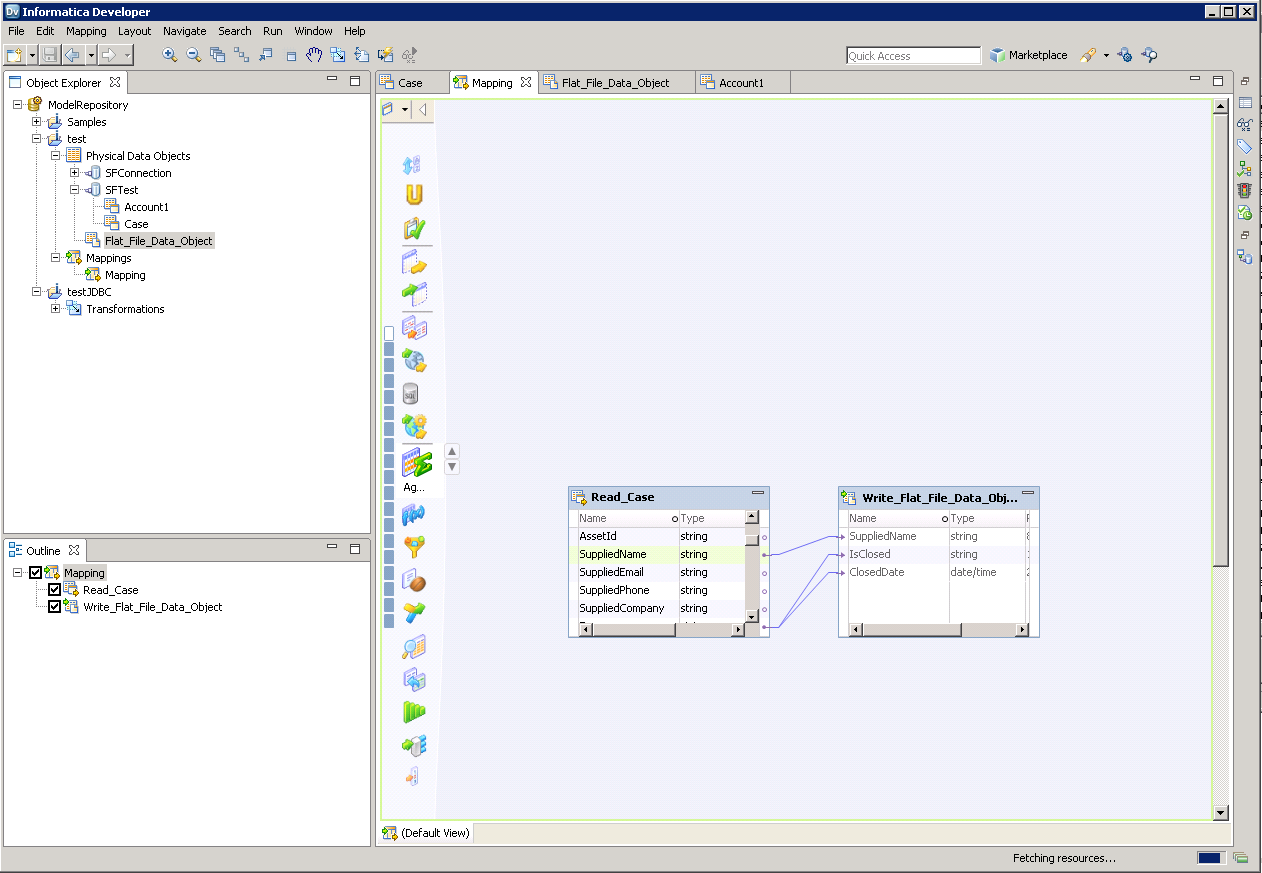

マッピングの作成

以下のステップに従って、マッピングにSpark ソースを追加します。

- [Object Explorer]でプロジェクトを右クリックし、[New]->[Mapping]と進みます。

- Spark 接続のノードを展開し、テーブルのデータオブジェクトをエディターにドラッグします。.

- 表示されるダイアログで、[Read]オプションを選択します。

![The source Spark table in the mapping.(Salesforce is shown.)]()

以下のステップに従って、Spark カラムをフラットファイルにマッピングします。

- [Object Explorer]でプロジェクトを右クリックし、[New]->[Data Object]と進みます。

- [Flat File Data Object]->[Create as Empty]->[Fixed Width]と選択していきます。

- Spark オブジェクトのプロパティで必要な行を選択して右クリックし、[copy]をクリックします。行をフラットファイルのプロパティにペーストします。

- フラットファイルのデータオブジェクトをマッピングにドラッグします。

- 表示されるダイアログで、[Write]オプションを選択します。

- クリックしてドラッグすることで、列を接続します。

Spark を転送するために、ワークスペースで右クリックし、[Run Mapping]をクリックします。

![The completed mapping.(Salesforce is shown.)]()