Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →How to import Amazon S3 Data into Apache Solr

Use the CData JDBC Driver for Amazon S3 in Data Import Handler and create an automated import of Amazon S3 data to Apache Solr Enterprise Search platform.

The Apache Solr platform is a popular, blazing-fast, open source enterprise search solution built on Apache Lucene.

Apache Solr is equipped with the Data Import Handler (DIH), which can import data from databases and, XML, CSV, and JSON files. When paired with the CData JDBC Driver for Amazon S3, you can easily import Amazon S3 data to Apache Solr. In this article, we show step-by-step how to use CData JDBC Driver in Apache Solr Data Import Handler and import Amazon S3 data for use in enterprise search.

Create an Apache Solr Core and a Schema for Importing Amazon S3

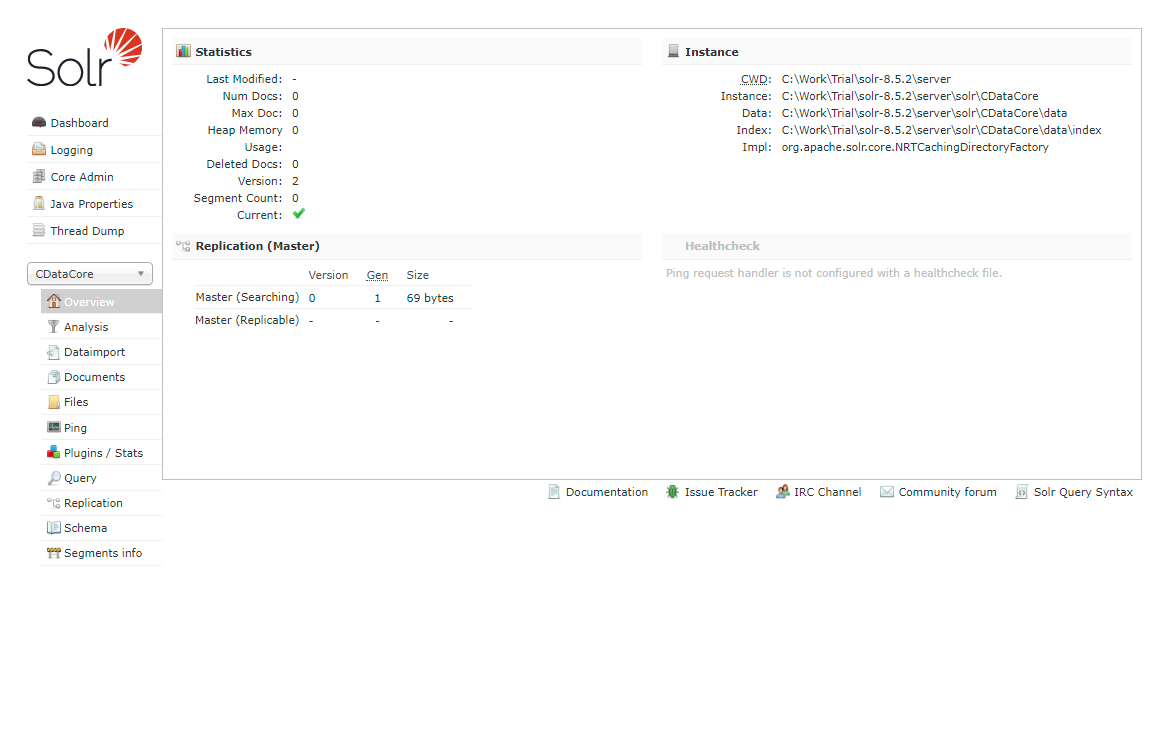

- Run Apache Solr and create a Core.

> solr create -c CDataCoreFor this article, Solr is running as a standalone instance in the local environment and you can access the core at this URL: http://localhost:8983/solr/#/CDataCore/core-overview - Create a schema consisting of "field" objects to represent the columns of the Amazon S3 data to be imported and a unique key for the entity. LastModifiedDate, if it exists in Amazon S3, is used for incremental updates. If it does not exist, you cannot do the deltaquery in the later section. Save the schema in the managed-schema file created by Apache Solr.

- Install the CData Amazon S3 JDBC Driver. Copy the JAR and license file (cdata.amazons3.jar and cdata.jdbc.amazons3.lic) to the Solr directory.

- CData JDBC JAR file: C:\Program Files\CData\CData JDBC Driver for Amazon S3 ####\lib

- Apache Solr: solr-8.5.2\server\lib

AmazonS3UniqueKey

Now we are ready to use Amazon S3 data in Solr.

Define an Import of Amazon S3 to Apache Solr

In this section, we walk through configuring the Data Import Handler.

- Modify the Config file of the created Core. Add the JAR file reference and add the DIH RequestHander definition.

<lib dir="${solr.install.dir:../../../..}/dist/" regex="solr-dataimporthandler-.*\.jar" /> <requestHandler name="/dataimport" class="org.apache.solr.handler.dataimport.DataImportHandler"> <lst name="defaults"> <str name="config">solr-data-config.xml</str> </lst> </requestHandler> - Next, create a solr-data-config.xml at the same level. In this article, we retrieve a table from Amazon S3, but you can use a custom SQL query to request data as well. The Driver Class and a sample JDBC Connection string are in the sample code below.

<dataConfig> <dataSource driver="cdata.jdbc.amazons3.AmazonS3Driver" url="jdbc:amazons3:AccessKey=a123;SecretKey=s123;"> </dataSource> <document> <entity name="ObjectsACL" query="SELECT Id,AmazonS3Column1,AmazonS3Column2,AmazonS3Column3,AmazonS3Column4,AmazonS3Column5,AmazonS3Column6,AmazonS3Column7,LastModifiedDate FROM ObjectsACL" deltaQuery="SELECT Id FROM ObjectsACL where LastModifiedDate >= '${dataimporter.last_index_time}'" deltaImportQuery="SELECT Id,AmazonS3Column1,AmazonS3Column2,AmazonS3Column3,AmazonS3Column4,AmazonS3Column5,AmazonS3Column6,AmazonS3Column7,LastModifiedDate FROM ObjectsACL where Id=${dataimporter.delta.Id}"> <field column="Id" name="Id" ></field> <field column="AmazonS3Column1" name="AmazonS3Column1" ></field> <field column="AmazonS3Column2" name="AmazonS3Column2" ></field> <field column="AmazonS3Column3" name="AmazonS3Column3" ></field> <field column="AmazonS3Column4" name="AmazonS3Column4" ></field> <field column="AmazonS3Column5" name="AmazonS3Column5" ></field> <field column="AmazonS3Column6" name="AmazonS3Column6" ></field> <field column="AmazonS3Column7" name="AmazonS3Column7" ></field> <field column="LastModifiedDate" name="LastModifiedDate" ></field> </entity> </document> </dataConfig> - In the query section, set the SQL query that select the data from Amazon S3. deltaQuery and deltaImportquery define the ID and the conditions when using incremental updates from the second import of the same entity.

- After all settings are done, restart Solr.

> solr stop -all > solr start

Run a DataImport of Amazon S3 Data.

- Execute DataImport from the URL below:

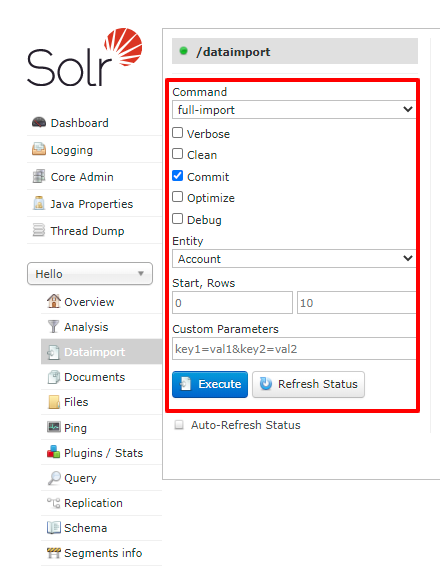

http://localhost:8983/solr/#/CDataCore/dataimport//dataimport![Load Amazon S3 data to Solr using Data Import.]()

- Select the "full-import" Command, choose the table from Entity, and click "Execute."

![Execute full import in Solr.]()

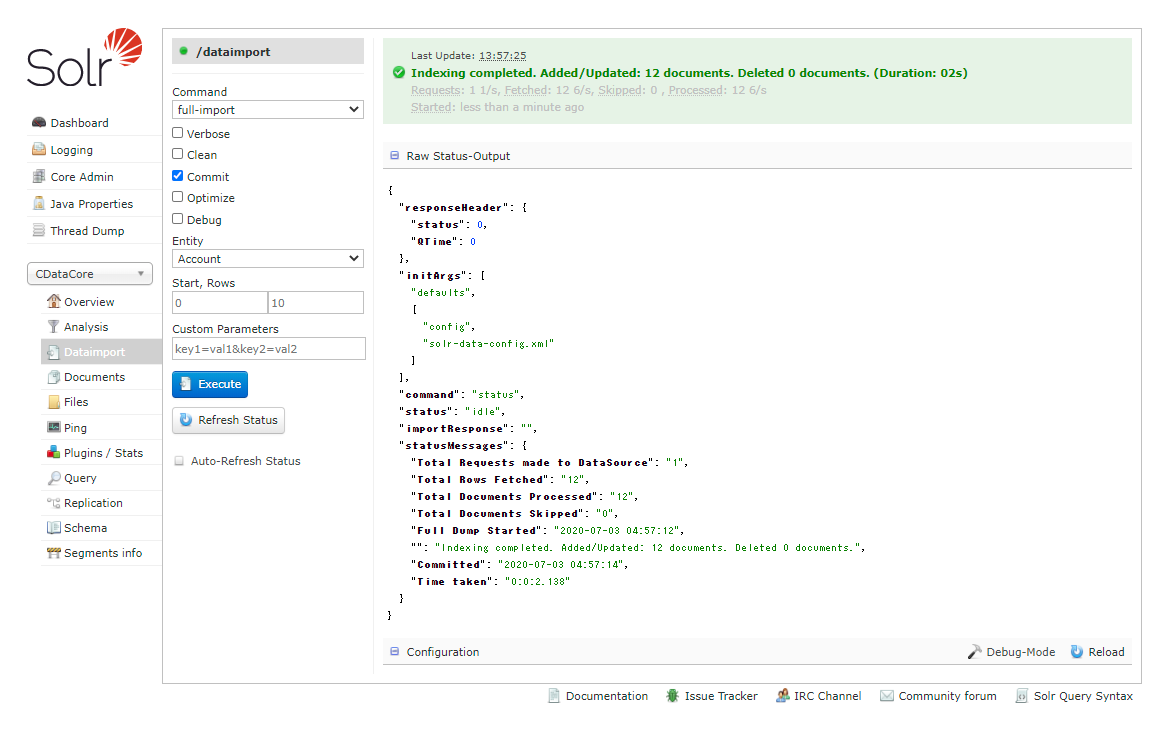

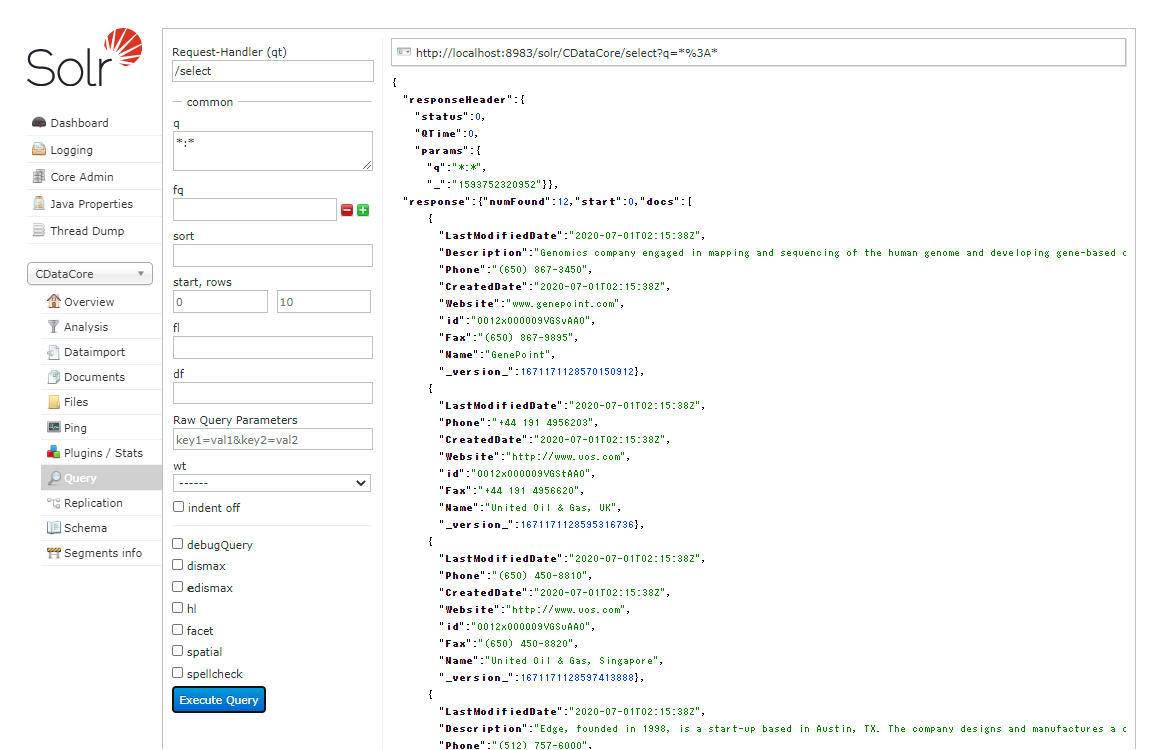

- Check the result of the import from the Query.

![Check the full import result of Amazon S3.]()

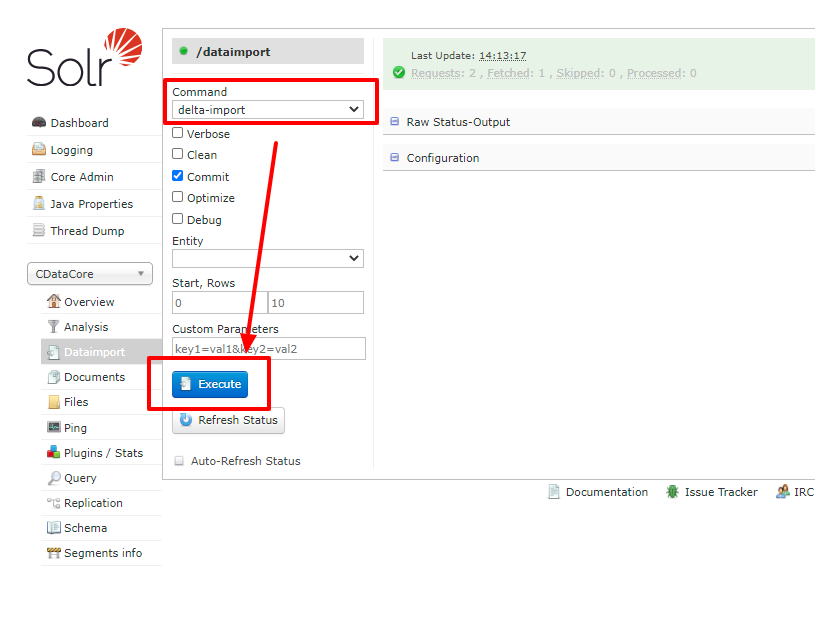

- Try an incremental update using deltaQuery. Modify some data in the original Amazon S3 data set. Select the "delta-import" command this time from DataImport window and click "Execute."

![Execute Delta import in Solr.]()

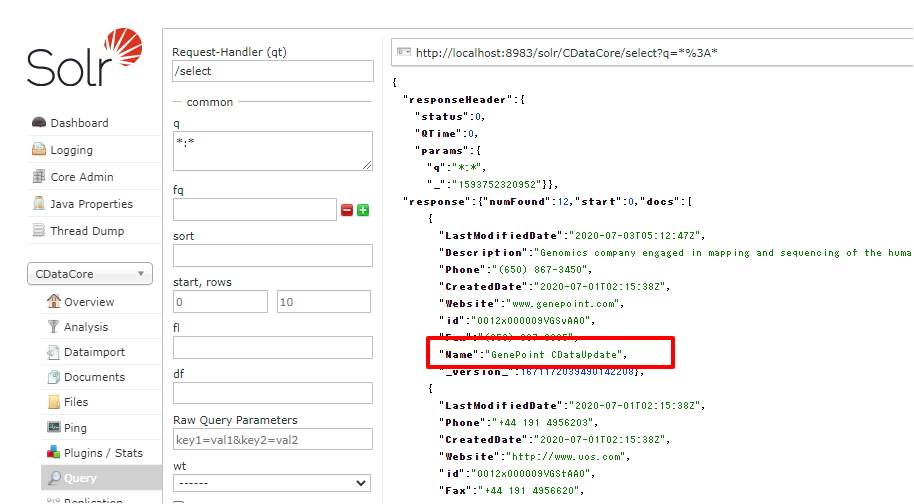

- Check the result of the incremental update.

![Check the delta import result of Amazon S3.]()

Using the CData JDBC Driver for Amazon S3 you are able to create an automated import of Amazon S3 data into Apache Solr. Download a free, 30 day trial of any of the 200+ CData JDBC Drivers and get started today.