Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Integrate Redshift with External Services using SnapLogic

Use CData JDBC drivers in SnapLogic to integrate Redshift with External Services.

SnapLogic is an integration platform-as-a-service (iPaaS) that allows users to create data integration flows with no code. When paired with the CData JDBC Drivers, users get access to live data from more than 250+ SaaS, Big Data and NoSQL sources, including Redshift, in their SnapLogic workflows.

With built-in optimized data processing, the CData JDBC Driver offers unmatched performance for interacting with live Redshift data. When platforms issue complex SQL queries to Redshift, the driver pushes supported SQL operations, like filters and aggregations, directly to Redshift and utilizes the embedded SQL engine to process unsupported operations client-side (often SQL functions and JOIN operations). Its built-in dynamic metadata querying lets you work with Redshift data using native data types.

Connect to Redshift in SnapLogic

To connect to Redshift data in SnapLogic, download and install the CData Redshift JDBC Driver. Follow the installation dialog. When the installation is complete, the JAR file can be found in the installation directory (C:/Program Files/CData/CData JDBC Driver for Amazon Redshift/lib by default).

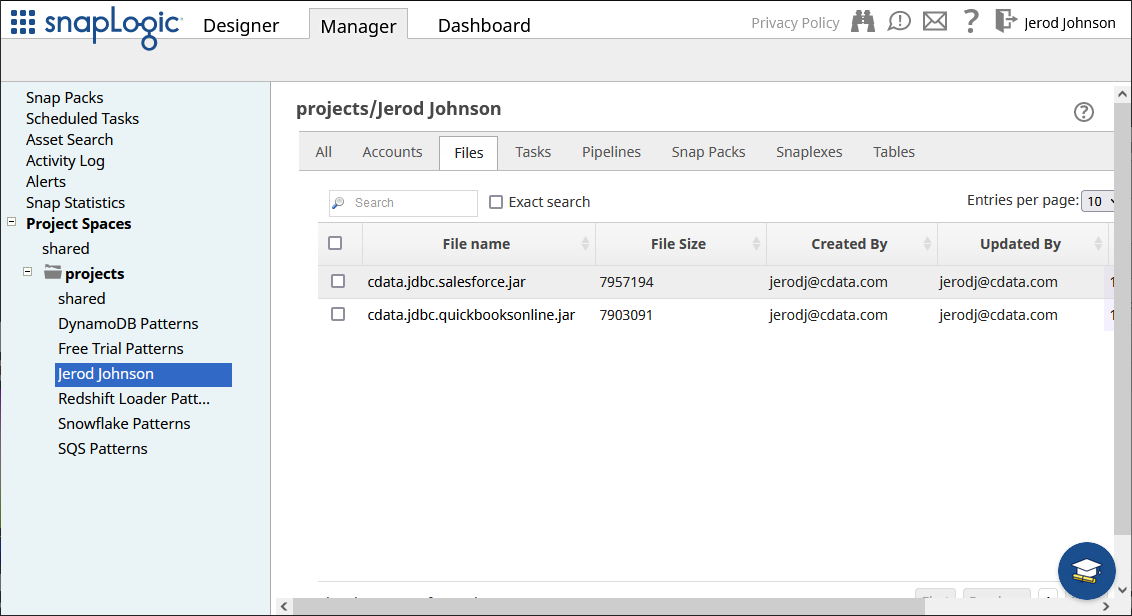

Upload the Redshift JDBC Driver

After installation, upload the JDBC JAR file to a location in SnapLogic (for example, projects/Jerod Johnson) from the Manager tab.

Configure the Connection

Once the JDBC Driver is uploaded, we can create the connection to Redshift.

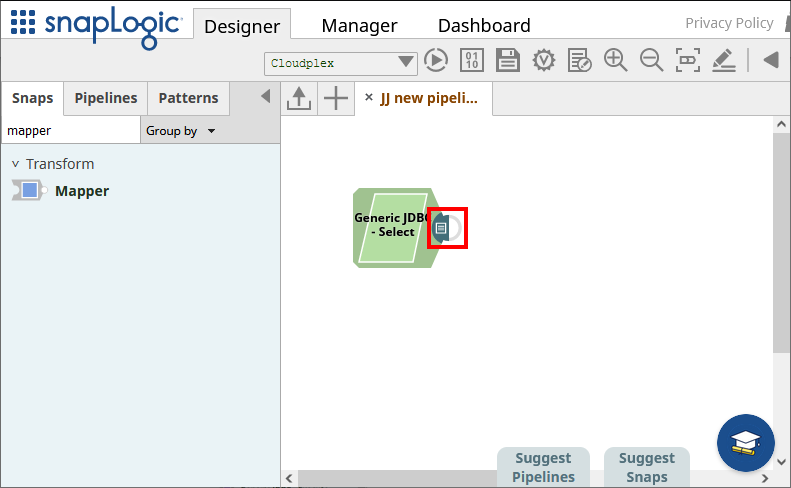

- Navigate to the Designer tab

- Expand "JDBC" from Snaps and drag a "Generic JDBC - Select" snap onto the designer

![Adding a Generic JDBC snap onto the designer]()

- Click Add Account (or select an existing one) and click "Continue"

- In the next form, configure the JDBC connection properties:

- Under JDBC JARs, add the JAR file we previously uploaded

- Set JDBC Driver Class to cdata.jdbc.redshift.RedshiftDriver

Set JDBC URL to a JDBC connection string for the Redshift JDBC Driver, for example:

jdbc:redshift:User=admin;Password=admin;Database=dev;Server=examplecluster.my.us-west-2.redshift.amazonaws.com;Port=5439;RTK=XXXXXX;

NOTE: RTK is a trial or full key. Contact our Support team for more information.

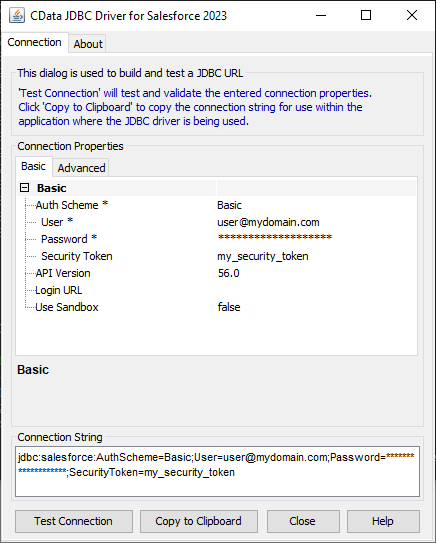

![Configuring a connection (Salesforce is shown)]()

Built-In Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Redshift JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.redshift.jar

Fill in the connection properties and copy the connection string to the clipboard.

To connect to Redshift, set the following:

- Server: Set this to the host name or IP address of the cluster hosting the Database you want to connect to.

- Port: Set this to the port of the cluster.

- Database: Set this to the name of the database. Or, leave this blank to use the default database of the authenticated user.

- User: Set this to the username you want to use to authenticate to the Server.

- Password: Set this to the password you want to use to authenticate to the Server.

You can obtain the Server and Port values in the AWS Management Console:

- Open the Amazon Redshift console (http://console.aws.amazon.com/redshift).

- On the Clusters page, click the name of the cluster.

- On the Configuration tab for the cluster, copy the cluster URL from the connection strings displayed.

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

- After entering the connection properties, click "Validate" and "Apply"

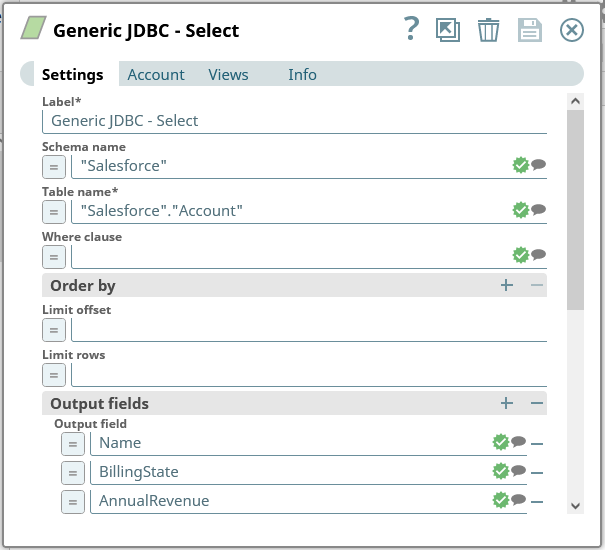

Read Redshift Data

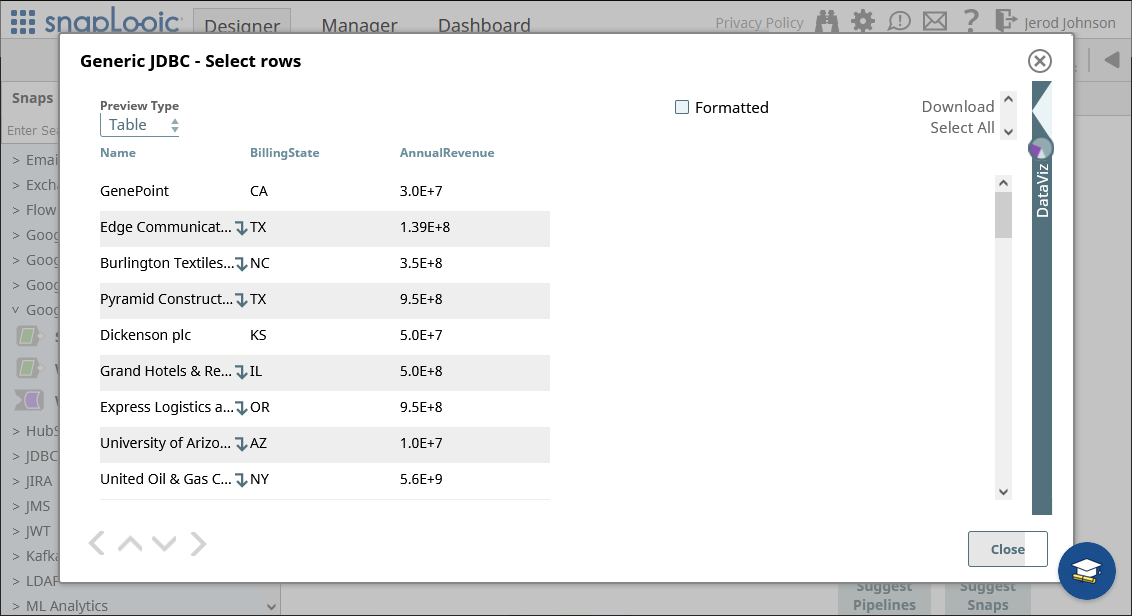

In the form that opens after validating and applying the connection, configure your query.

- Set Schema name to "Redshift"

- Set Table name to a table for Redshift using the schema name, for example: "Redshift"."Orders" (use the drop-down to see the full list of available tables)

- Add Output fields for each item you wish to work with from the table

Save the Generic JDBC - Select snap.

With connection and query configured, click the end of the snap to preview the data (highlighted below).

Once you confirm the results are what you expect, you can add additional snaps to funnel your Redshift data to another endpoint.

Piping Redshift Data to External Services

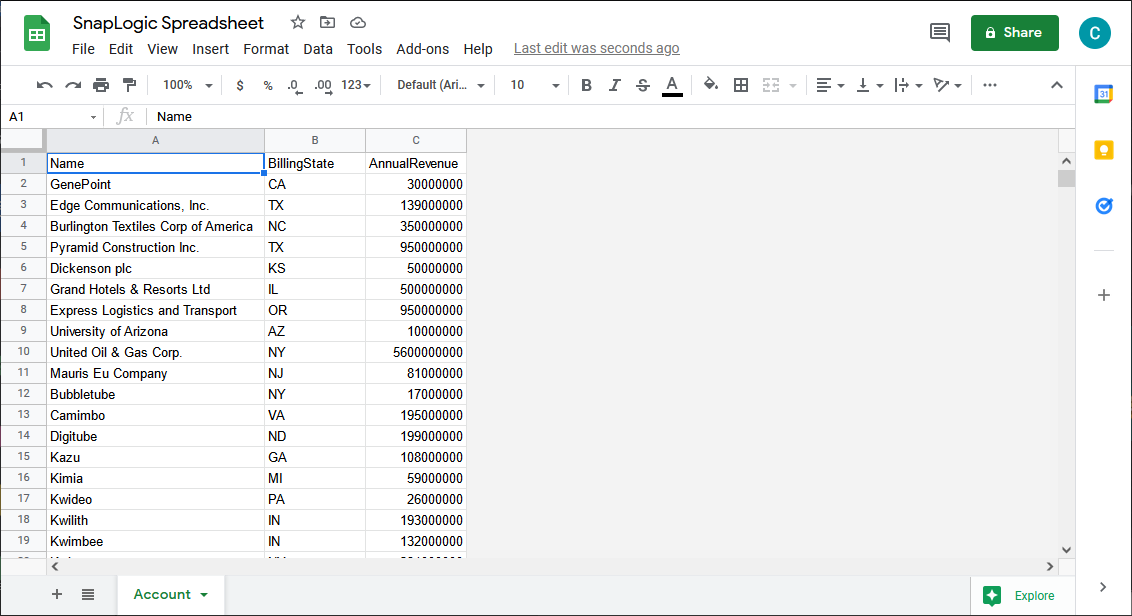

For this article, we will load data in a Google Spreadsheet. You can use any of the supported snaps, or even use a Generic JDBC snap with another CData JDBC Driver, to move data into an external service.

- Start by dropping a "Worksheet Writer" snap onto the end of the "Generic JDBC - Select" snap.

- Add an account to connect to Google Sheets

![Connecting to Google]()

- Configure the Worksheet Writer snap to write your Redshift data to a Google Spreadsheet

![Writing to a Google Spreadsheet]()

You can now execute the fully configured pipeline to extract data from Redshift and push it into a Google Spreadsheet.

Piping External Data to Redshift

As mentioned above, you can also use the JDBC Driver for Redshift in SnapLogic to write data to Redshift. Start by adding a Generic JDBC - Insert or Generic JDBC - Update snap to the dashboard.

- Select the existing "Account" (connection) or create a new one

- Configure the query:

- Set Schema name to "Redshift"

- Set Table name to a table for Redshift using the schema name, for example: "Redshift"."Orders" (use the drop-down to see the full list of available tables)

![Configuring a INSERT snap (Salesforce is shown)]()

- Save the Generic JDBC - Insert/Update snap

At this point, you have configured a snap to write data to Redshift, inserting new records or updating existing ones.

More Information & Free Trial

Using the CData JDBC Driver for Amazon Redshift you can create a pipeline in SnapLogic for integrating Redshift data with external services. For more information about connecting to Redshift, check at our CData JDBC Driver for Amazon Redshift page. Download a free, 30 day trial of the CData JDBC Driver for Amazon Redshift and get started today.