Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Connect to Spark Data in as an External Source in Dremio

Use the CData JDBC Driver to connect to Spark as an External Source in Dremio.

The CData JDBC Driver for Apache Spark implements JDBC Standards and allows various applications, including Dremio, to work with live Spark data. Dremio is a data lakehouse platform designed to empower self-service, interactive analytics on the data lake. With the CData JDBC Driver, you can include live Spark data as a part of your enterprise data lake. This article describes how to connect to Spark data from Dremio as an External Source.

The CData JDBC Driver enables high-speed access to live Spark data in Dremio. Once you install the driver, authenticate with Spark and gain immediate access to Spark data within your data lake. By surfacing Spark data using native data types and handling complex filters, aggregations, & other operations automatically, the CData JDBC Driver grants seamless access to Spark data.

Build the ARP Connector

To use the CData JDBC Driver in Dremio, you need to build an Advanced Relation Pushdown (ARP) Connector. You can view the source code for the Connector on GitHub or download the ZIP file (GitHub.com) directly. Once you copy or extract the files, run the following command from the root directory of the connector (the directory containing the pom.xml file) to build the connector.

mvn clean install

Once the JAR file for the connector is built (in the target directory), you are ready to copy the ARP connector and JDBC Driver to your Dremio instance.

Installing the Connector and JDBC Driver

Install the ARP Connector to %DREMIO_HOME%/jars/ and the JDBC Driver for Spark to %DREMIO_HOME%/jars/3rdparty. You can use commands similar to the following:

ARP Connector

docker cp PATH\TO\dremio-sparksql-plugin-20.0.0.jar dremio_image_name:/opt/dremio/jars/

JDBC Driver for Spark

docker cp PATH\TO\cdata.jdbc.sparksql.jar dremio_image_name:/opt/dremio/jars/3rdparty/

Connecting to Spark

Spark will now appear as an External Source option in Dremio. The ARP Connector built uses a JDBC URL to connect to Spark data. The JDBC Driver has a built-in connection string designer that you can use (see below).

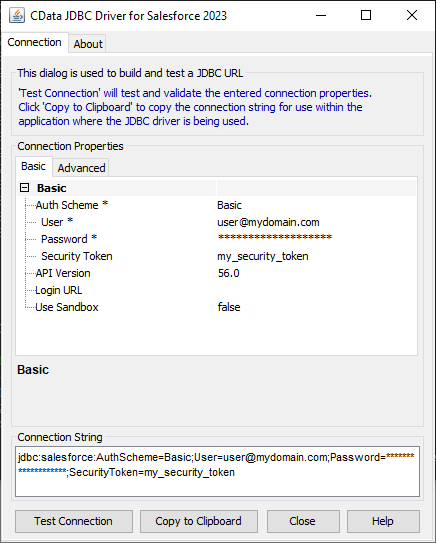

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Spark JDBC Driver. Double-click the JAR file or execute the jar file from the command line.

java -jar cdata.jdbc.sparksql.jar

Fill in the connection properties and copy the connection string to the clipboard.

Set the Server, Database, User, and Password connection properties to connect to SparkSQL.

NOTE: To use the JDBC Driver in Dremio, you will need a license (full or trial) and a Runtime Key (RTK). For more information on obtaining this license (or a trial), contact our sales team.

Add the Runtime Key (RTK) to the JDBC URL. You will end up with a JDBC URL similar to the following:

jdbc:sparksql:RTK=5246...;Server=127.0.0.1;

Access Spark as an External Source

To add Spark as an External Source, click to add a new source and select SparkSQL. Copy the JDBC URL and paste it into the New SparkSQL Source wizard.

Save the connection and you are ready to query live Spark data in Dremio, easily incorporating Spark data into your data lake.

More Information & Free Trial

Using the CData JDBC Driver for Apache Spark in Dremio, you can incorporate live Spark data into your data lake. Check out our CData JDBC Driver for Apache Spark page for more information about connecting to Spark. Download a free, 30 day trial of the CData JDBC Driver for Apache Spark and get started today.