ノーコードでクラウド上のデータとの連携を実現。

詳細はこちら →CData Software Japan - ナレッジベース

Latest Articles

- MySQL のデータをノーコードでREST API として公開する方法:CData API Server

- CData Sync AMI をAmazon Web Services(AWS)で起動

- Connect Cloud Guide: Derived Views, Saved Queries, and Custom Reports

- Connect Cloud Guide: SSO (Single Sign-On) and User-Defined Credentials

- Connect Cloud クイックスタート

- Shopify APIのバージョンアップに伴う弊社製品の対応について

Latest KB Entries

- DBAmp: Serial Number Expiration Date Shows 1999 or Expired

- CData Drivers のライセンスについて

- Spring4Shell に関する概要

- Update Required: HubSpot Connectivity

- CData Sync で差分更新を設定

- Apache Log4j2 Overview

ODBC Drivers

- [ article ] データ統合ツールQlik Replicate を使ってDB2 のデータをMySQL ...

- [ article ] CDATAQUERY 関数を使って、Excel スプレッドシートにOData を自動挿入

- [ article ] PolyBase で外部データソースとしてAct CRM を連携利用

- [ article ] MicroStrategy Web でActiveCampaign ODBC Driver を使用

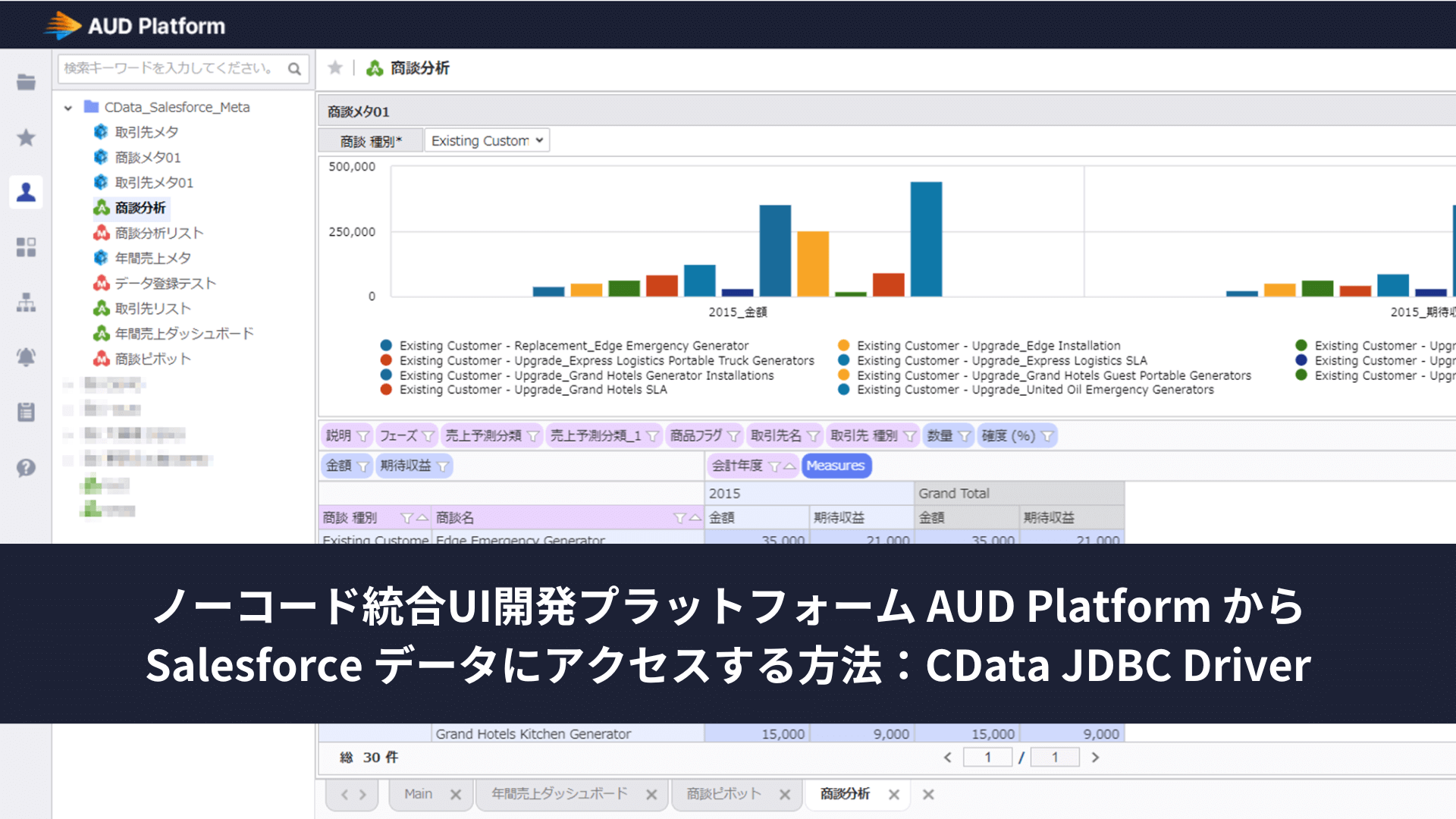

JDBC Drivers

- [ article ] DbVisualizer で QuickBase データに連携しクエリを作成

- [ article ] ColdFusion にリアルタイムSpark データをインポートしてアプリケーションを構築

- [ article ] Elasticsearch JDBC ドライバーを使用したOBIEE でのElasticsearch ...

- [ article ] ETL/ELT のEmbulk を使ってSAP Business One データをDB ...

SSIS Components

- [ article ] Zoho Books をSSIS 経由でSQL サーバーにバックアップする

- [ article ] Zendesk をSSIS 経由でSQL サーバーにバックアップする

- [ article ] TigerGraph データからSQL Server ...

- [ article ] Google Campaign Manager データからSQL Server ...

ADO.NET Providers

- [ article ] LINQ to Authorize.Net データに連携してみた

- [ article ] SSIS を使ってAdobe Commerce データをSQL Server にインポート

- [ article ] HCL Domino データを使ったCrystal Reports を発行

- [ article ] ADO.NET 経由でTIBCO Spotfire でKafka データに連携してをビジュアライズ

Excel Add-Ins

- [ article ] CData Software ODBC Driver を使ってAsprovaをExcel ...

- [ article ] Microsoft Power BI Designer でCData Software ODBC ...

- [ article ] Excel を使ってGoogle Ad Manager にデータを追加したり、Google Ad ...

- [ article ] Excel を使ってXML にデータを追加したり、XML のデータを編集する方法

API Server

- [ article ] Tableau からOData にJDBC Driver で連携してビジュアライズ

- [ article ] データベース・ソリューションSkyLink でOData データを連携利用

- [ article ] Power Apps データフローを使ってOData データをMicrosoft Dataverse ...

- [ article ] ColdFusion でOData データに連携する方法

Data Sync

- [ article ] SQLite へのDynamics 365 データのETL/ELT ...

- [ article ] SAS Data Sets データをSQL Server に連携して利用する4つの方法を比較

- [ article ] ローカルCSV ファイルへのTally データのETL/ELT ...

- [ article ] MongoDB へのMonday.com データのETL/ELT ...

Windows PowerShell

- [ article ] Microsoft Dataverse データをPowerShell script でSQL ...

- [ article ] OneNote データをPowerShell でMySQL にレプリケーションする方法

- [ article ] PowerShell からOData データに接続してデータの取得・更新・挿入・削除・CSV ...

- [ article ] PowerShell からCosmos DB データに接続してデータの更新・挿入・削除を実行する方法

FireDAC Components

- [ article ] Delphi のPostgreSQL データへのデータバインドコントロール

- [ article ] Delphi のZoho Projects データへのデータバインドコントロール

- [ article ] Delphi のActiveCampaign データへのデータバインドコントロール

- [ article ] Delphi のYahoo! Shopping データへのデータバインドコントロール