Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Importing Google BigQuery Data into H2O

CData JDBC Drivers provide self-service integration with machine learning and AI platforms such as H2O. The CData JDBC Driver for Google BigQuery allows you to import BigQuery tables to H2OFrames in memory. This article details how to use the JDBC driver in R or Python to import BigQuery data into H2O and create a Generalized Linear Model (GLM) based on the data.

The CData JDBC Drivers offer unmatched performance for interacting with live BigQuery data in H2O due to optimized data processing built into the driver. With embedded dynamic metadata querying, you can visualize and analyze BigQuery data using native H2O data types.

Start H2O with the JDBC Driver

Install the CData JDBC Driver for BigQuery and copy the JAR file (cdata.jdbc.bigquery.jar) and accompanying LIC file (cdata.jdbc.bigquery.lic) to the folder containing the H2O JAR file (h2o.jar). Once copied, you will need to start H2O with the JDBC Driver:

$ java -cp "cdata.jdbc.bigquery.jar:h2o.jar" water.H2OApp

Import BigQuery Data into H20

With H2O running, we can connect to the instance and use the import_sql_table function to import Google BigQuery data into the H2O instance.

Connecting to a Dataset

You can connect to a specific project and dataset by providing authentication to Google and then setting the Project and Dataset properties.

If you want to view a list of information about the available datasets and projects for your Google Account, execute a query to the Datasets or Projects view after you authenticate.

Authenticating to Google

You can authenticate with a Google account, a Google Apps account, or a service account. A service account is required to delegate domain-wide access. The authentication process follows the OAuth 2.0 authentication standard. See the Getting Started section of the help documentation for an authentication how-to.

Import Data from a Table

In the code samples below (R and Python), we use the import_sql_table function to import a Google BigQuery table into an H2O cloud. For this article, we will import a table representing DVD rental payments (download the CSV).

- connection_url - The JDBC URL to connect to Google BigQuery using the CData JDBC Driver. For example, jdbc:bigquery:DataSetId=MyDataSetId;ProjectId=MyProjectId;InitiateOAuth=GETANDREFRESH;

- table - The name of the BigQuery table to import.

- columns - (Optional) The list of column names to import from the BigQuery table. All columns are imported by default.

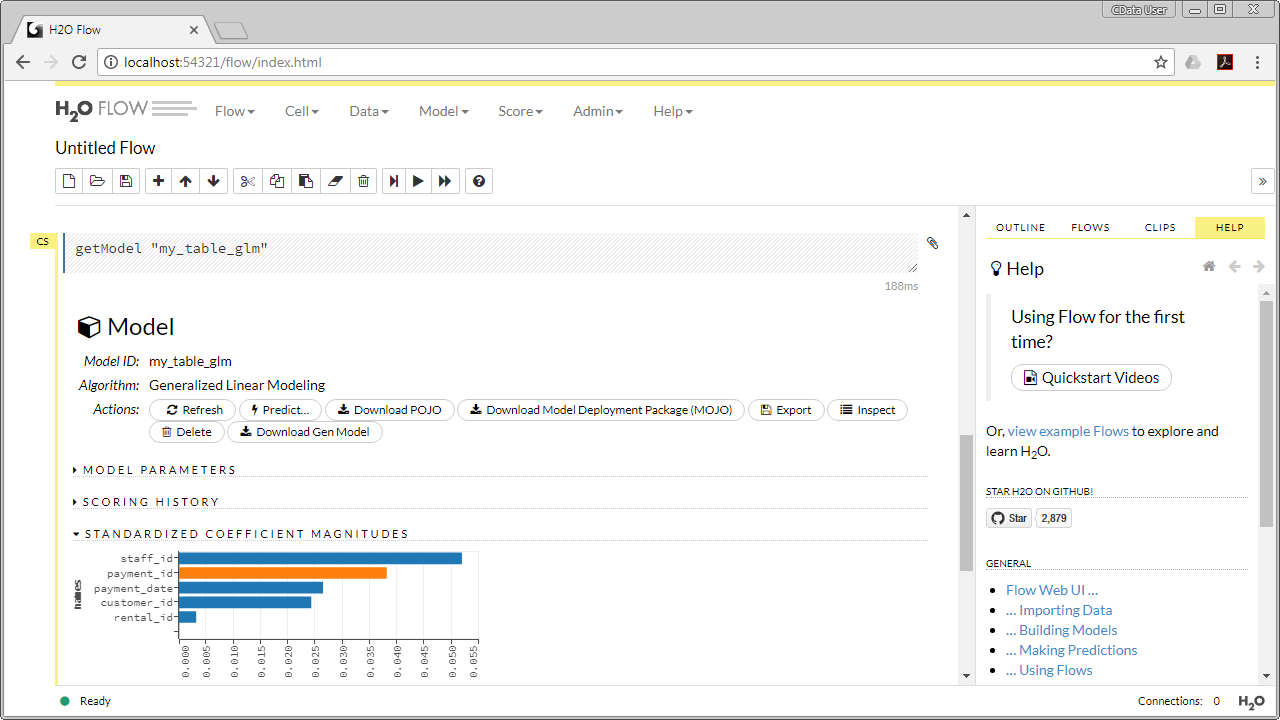

Create a Model

Once the data is imported, we can create a GLM, using the existing data as the training set. In R, this simply means calling the glm function, passing the predictor names, response variable and training frame. In Python, we import the H20GeneralizedLinearEstimator class and train the model based on the same parameters. With the model created and trained, you are ready to validate and create predictions based on new sets.

Code Samples

R Code

In R, we connect to the H2O instance (or create a new instance), set the variables, and import the table. Once the table is imported, we fit a GLM to the table using the glm function, passing the following parameters:

- x - The vector containing the predictor variables to use in building the model.

- y - The name of the response variable in the data.

- training_frame - The Id of the training data frame.

With the GLM fit, we simply display the model.

library(h2o) h2o.init() h2o.init(strict_version_check = FALSE) connection_url <- "jdbc:bigquery:DataSetId=MyDataSetId;ProjectId=MyProjectId;InitiateOAuth=GETANDREFRESH;" table <- "payment" my_table <- h2o.import_sql_table(connection_url, table, username = "", password = "") # X is the index of the response variable pred_names <- names(my_table)[-X] my_table_glm <- h2o.glm(x = pred_names, y = "amount", training_frame = my_table)

Python Code

In Python, we connect to the H2O instance (or create a new instance), import the H2OGeneralizedLinearEstimator class, set the variables, and import the table. Once the table is imported, we create a GLM and then train the model, passing the following parameters (by default the train method uses all columns in the training frame except the response variable as predictor variables):

- y - The name of the response variable in the data.

- training_frame - The Id of the training data frame.

import h2o h2o.init() h2o.init(strict_version_check = False) from h2o.estimators.glm import H2OGeneralizedLinearEstimator connection_url = "jdbc:googlebigquery:DataSetId=MyDataSetId;ProjectId=MyProjectId;InitiateOAuth=GETANDREFRESH;" table = "payment" my_table = h2o.import_sql_table(connection_url, table, username = "", password = "") my_glm = H2OGeneralizedLinearEstimator(model_id='my_table_glm') my_glm.train(y = 'amount', training_frame = my_table)

With the code run, you now have a new model in H2O based on the "payment" table using the "amount" column as the response variable.

With the new GLM, you are ready to have H2O validate and make predictions on new data based on the "payment" table, allowing you to use machine learning and AI algorithms to drive analytics and create actionable insights to drive business.