Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Amazon Redshift: Million Row Challenge

Use the CData JDBC Driver to upload one million rows into Amazon Redshift in less than two minutes.

In this article, we take on the challenge of loading one million rows of data into Amazon Redshift. To meet the challenge, we use the our JDBC Driver for Redshift in a simple Java application paired with a CSV file.

200x Faster than the Native Drivers

As outlined in several other articles, our drivers are industry leaders when it comes to performance. Our developers have spent countless hours optimizing the performance in processing data, for reads and writes, ensuring that our drivers are not the bottleneck that limits your ability to succeed.

In the context of Amazon Redshift, we reduce the time to insert one million rows of data from six or more hours (using the native drivers) to less than two minutes (approximately 200 times faster than the native drivers).

The Test

To reproduce our results, you need only download a trial of the CData Software JDBC Driver for Amazon Redshift and configure a new table on a Redshift cluster. From there, you are prepared to experience the CData difference by loading data faster than with any other driver. For this article, we used the JDBC driver in a simple, custom Java application, but thanks to the breadth of our offerings, you can see the same performance in many other tools and development platforms, including most popular BI, reporting, and ETL tools, custom applications in Java, .NET, and other languages, PowerShell, Microsoft SSIS, and many more.

- Configure an Amazon Redshift cluster. Once the cluster is configured, create the DDL for the target table.

DROP TABLE customer_load_challenge; CREATE TABLE customer_load_challenge ( cust_id INTEGER NOT NULL, name VARCHAR(25) NOT NULL, address VARCHAR(25) NOT NULL, city VARCHAR(10) NOT NULL, nation VARCHAR(15) NOT NULL, region VARCHAR(12) NOT NULL, phone VARCHAR(15) NOT NULL );

- Download a free trial of the CData Software JDBC Driver for Amazon Redshift.

- Download the CSV file with sample data.

- Create a simple application to batch the data from the CSV file and load each batch into Amazon Redshift. Our application made use of the addBatch() method for a PreparedStatement to maximize the size of each batch (and therefore minimize the number of calls made to Amazon Redshift).

Sample Code

//one batch Connection connection = DriverManager.getConnection("jdbc:redshift:server=" + server + ";port=" + port + ";user=" + user + ";password=" + password + ";database=dev;AllowPreparedStatement=true;"); String cmd = "INSERT INTO customer_load_challenge (cust_id, name, address, city, nation, region, phone ) VALUES (?,?,?,?,?,?,?)"; PreparedStatement pstmt = connection.prepareStatement(cmd); for (int row = 0; row < rows.length; row++){ String[] line = rows[row].split(","); for (int i = 0 ; i < line.length; i++) { pstmt.setString(i + 1, line[i]); } pstmt.addBatch(); } int[] affected = pstmt.executeBatch(); - Execute the application.

The Results

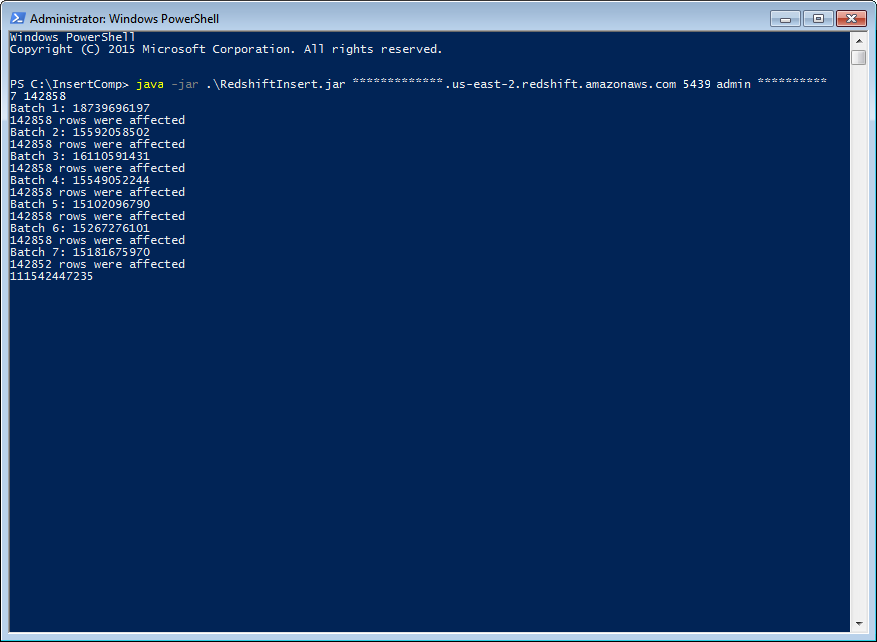

Below, you can see the results of loading one million rows in 7 batches (the batch size was based on Redshift limitations), printing the number of nanoseconds for each batch, along with the total number of nanoseconds required to insert the entire set (approximately 1 minute, 51 seconds):

More Information & Free Trials

Reducing the time to load one million rows from around six hours to under two minutes is just one of the benefits of using the drivers by CData Software. With our richly featured, high-performance drivers, you get a single, uniform experience with all of your data, no matter where it is (cloud-based or on-premises, SaaS or application-based, NoSQL or RDBMS), backed by a world-class Support Team. Download a free, 30 day trial of any of our Amazon Redshift drivers and experience the CData difference today.