Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Integrate Kafka Data in Your Informatica Cloud Instance

Use the CData JDBC Driver for Apache Kafka with the Informatica Cloud Secure Agent to access live Kafka data from Informatica Cloud.

Informatica Cloud allows you to perform extract, transform, and load (ETL) tasks in the cloud. With the Cloud Secure Agent and the CData JDBC Driver for Apache Kafka, you get live access to Kafka data, directly within Informatica Cloud. In this article, we will walk through downloading and registering the Cloud Secure Agent, connecting to Kafka through the JDBC Driver and generating a mapping that can be used in any Informatica Cloud process.

Informatica Cloud Secure Agent

To work with the Kafka data through the JDBC Driver, install the Cloud Secure Agent.

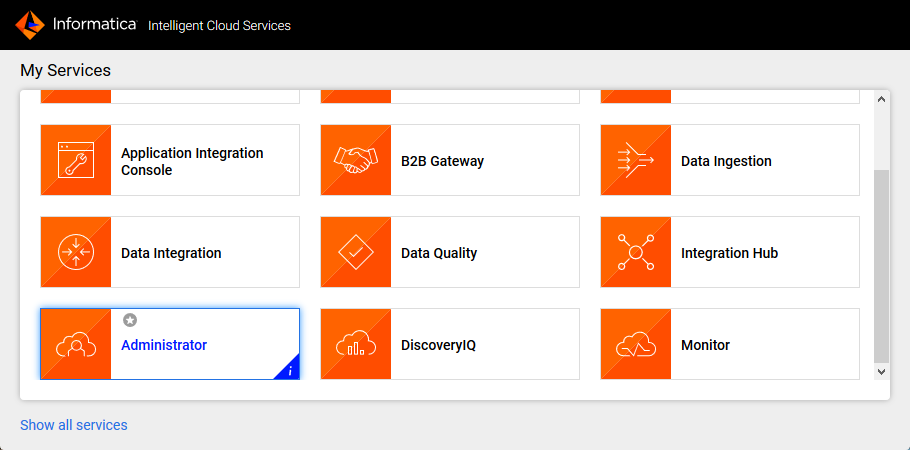

- Navigate to the Administrator page in Informatica Cloud

![]()

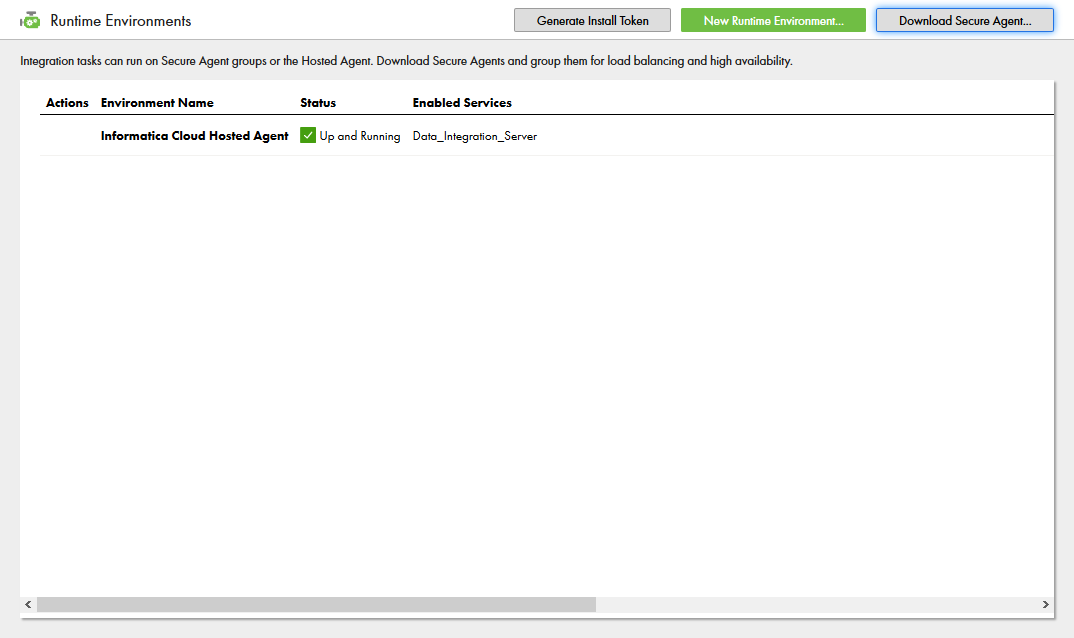

- Select the Runtime Environments tab

- Click "Download Secure Agent"

![]()

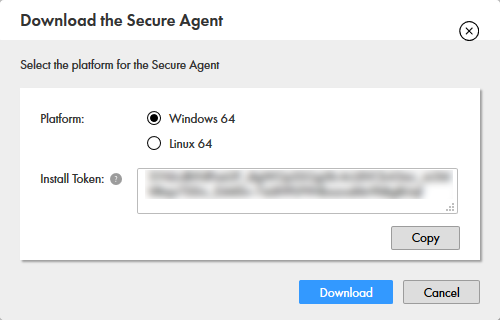

- Make note of the Install Token

![]()

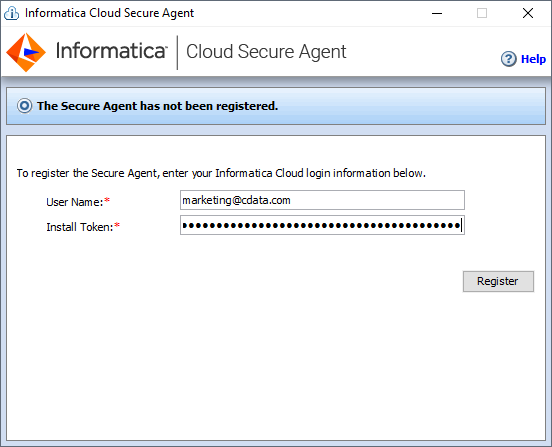

- Run the installer on the client machine and register the Cloud Secure Agent with your username and install token

![]()

NOTE: It may take some time for all of the Cloud Secure Agent services to get up and running.

Connecting to the Kafka JDBC Driver

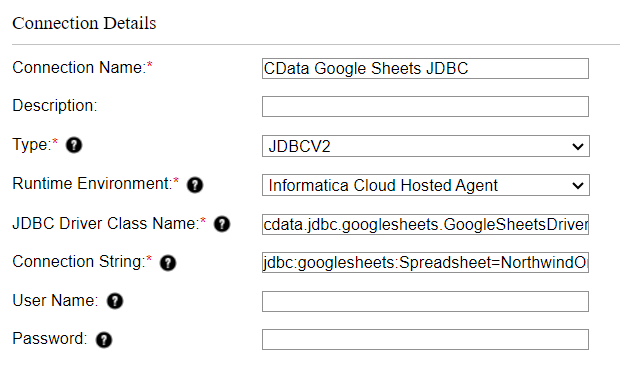

With the Cloud Secure Agent installed and running, you are ready to connect to Kafka through the JDBC Driver. Start by clicking the Connections tab and clicking New Connection. Fill in the following properties for the connection:

- Connection Name: Name your connection (i.e.: CData Kafka Connection)

- Type: Select "JDBC_IC (Informatica Cloud)"

- Runtime Environment: Select the runtime environment where you installed the Cloud Secure Agent

- JDBC Connection URL: Set this to the JDBC URL for Kafka. Your URL will look similar to the following:

jdbc:apachekafka:User=admin;Password=pass;BootStrapServers=https://localhost:9091;Topic=MyTopic;Set BootstrapServers and the Topic properties to specify the address of your Apache Kafka server, as well as the topic you would like to interact with.

Authorization Mechanisms

- SASL Plain: The User and Password properties should be specified. AuthScheme should be set to 'Plain'.

- SASL SSL: The User and Password properties should be specified. AuthScheme should be set to 'Scram'. UseSSL should be set to true.

- SSL: The SSLCert and SSLCertPassword properties should be specified. UseSSL should be set to true.

- Kerberos: The User and Password properties should be specified. AuthScheme should be set to 'Kerberos'.

You may be required to trust the server certificate. In such cases, specify the TrustStorePath and the TrustStorePassword if necessary.

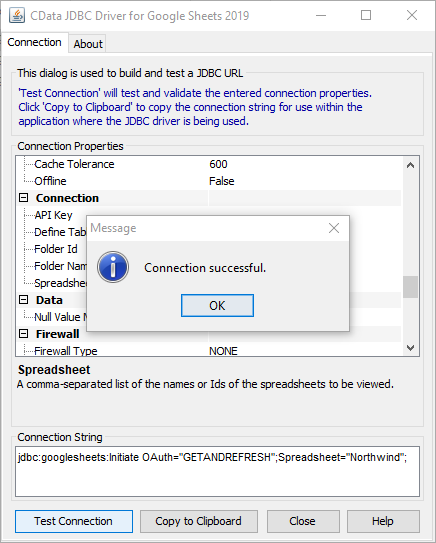

Built-In Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Kafka JDBC Driver. Either double-click the .jar file or execute the .jar file from the command-line.

java -jar cdata.jdbc.apachekafka.jarFill in the connection properties and copy the connection string to the clipboard.

![Using the built-in connection string designer to generate a JDBC URL (Google Sheets is shown.)]()

- JDBC Jar Directory: Set this to the lib folder in the installation location for the JDBC Driver (on Windows, typically C:\Program Files\CData[product_name]\)

- Driver Class: Set this to cdata.jdbc.apachekafka.ApacheKafkaDriver

- Username: Set this to the username for Kafka

- Password: Set this to the password for Kafka

Create a Mapping for Kafka Data

With the connection to Kafka configured, we can now access Kafka data in any Informatica process. The steps below walk through creating a mapping for Kafka to another data target.

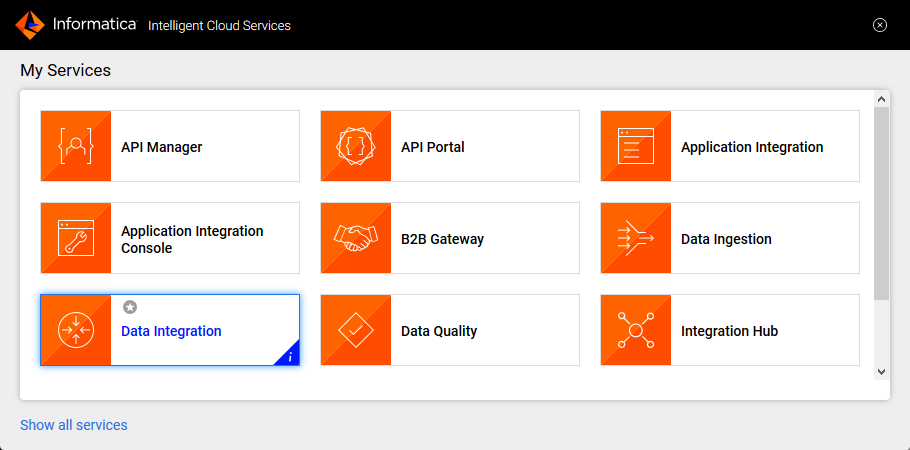

- Navigate to the Data Integration page

![]()

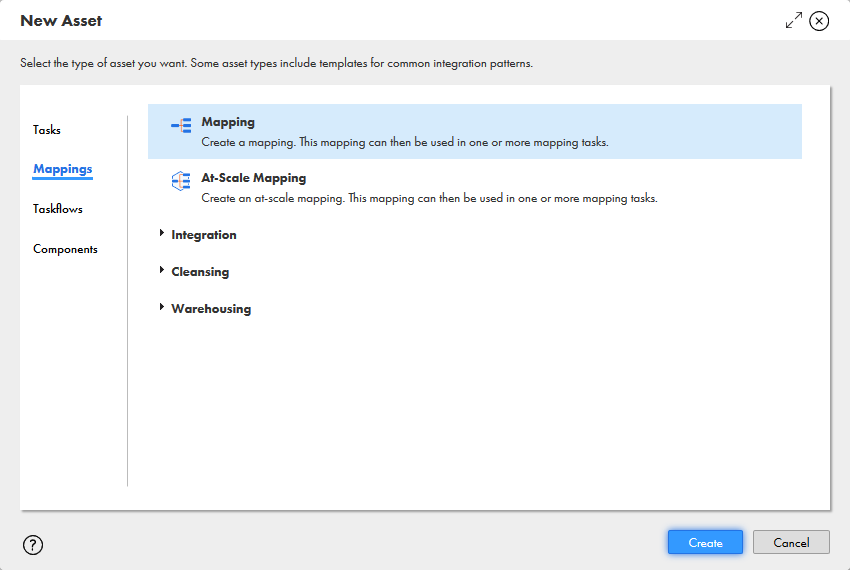

- Click New.. and select Mapping from the Mappings tab

![]()

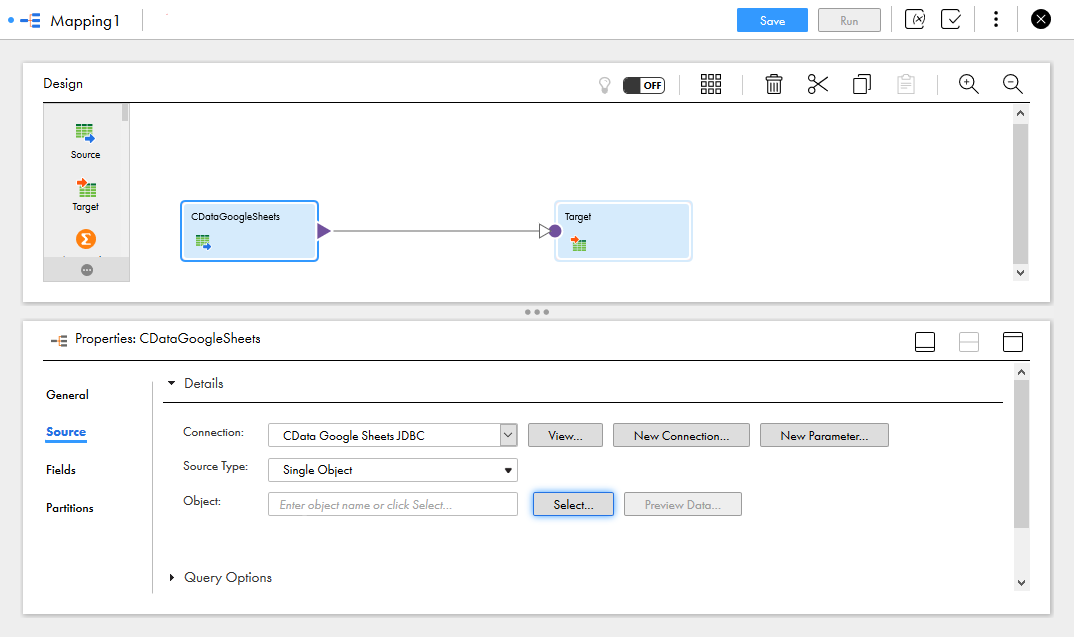

- Click the Source Object and in the Source tab, select the Connection and set the Source Type

![Selecting the Source Connection and Source Type]()

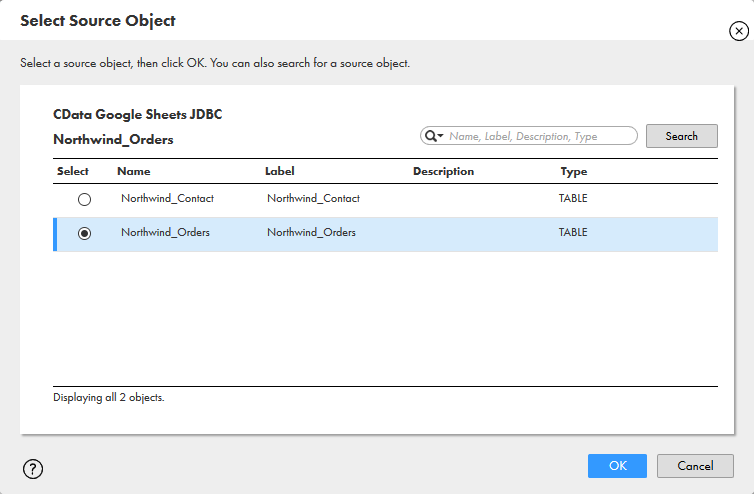

- Click "Select" to choose the table to map

![Selecting the Source Object]()

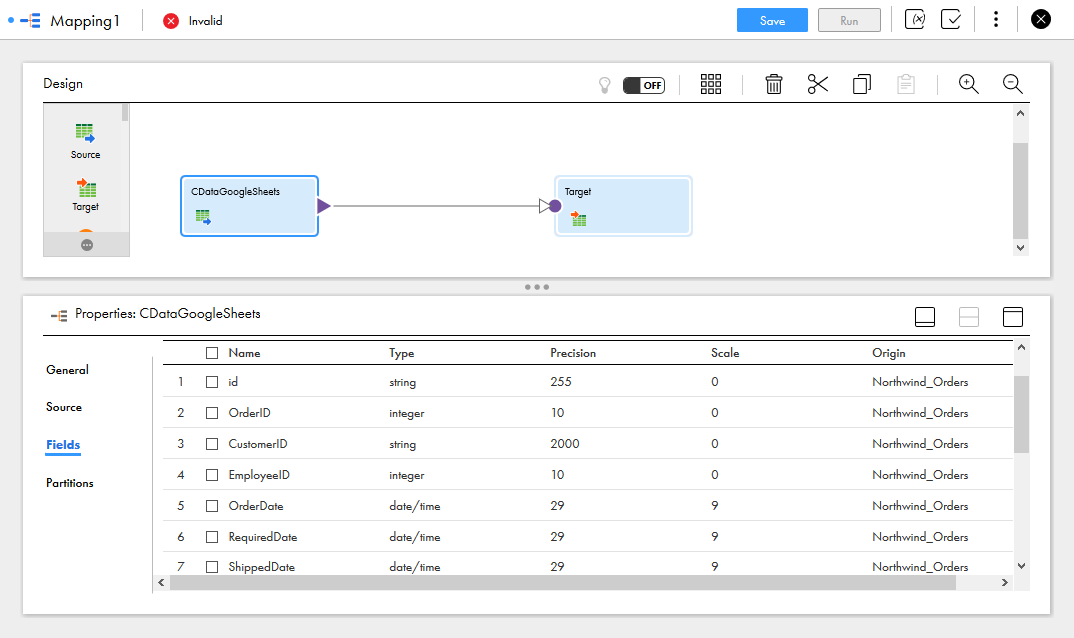

- In the Fields tab, select the fields from the Kafka table to map

![Selecting Source Fields to map]()

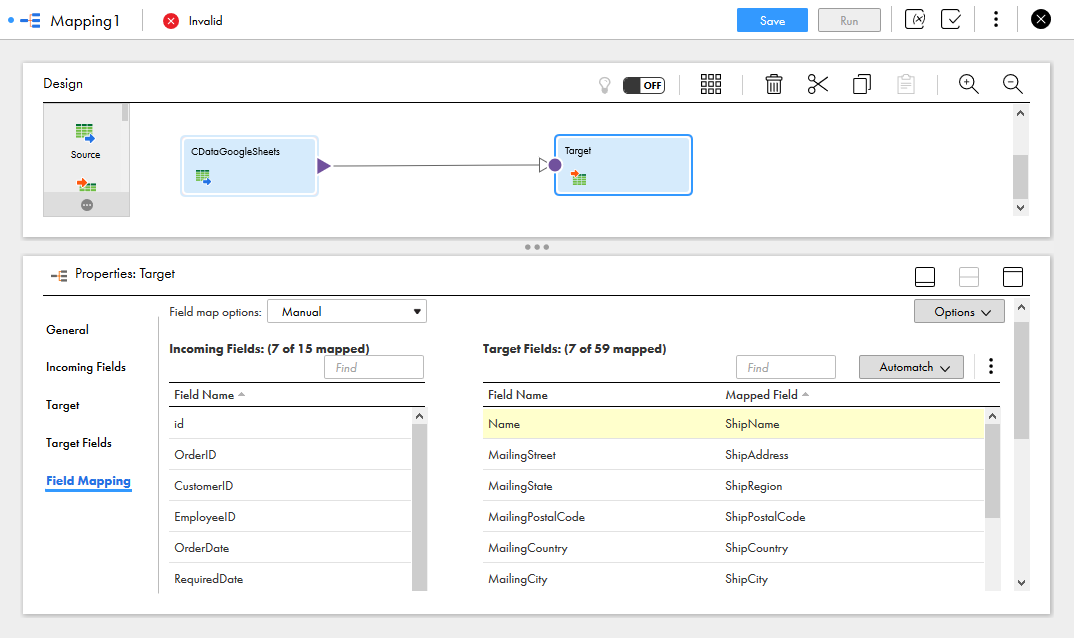

- Click the Target object and configure the Target source, table and fields. In the Field Mapping tab, map the source fields to the target fields.

![Selecting the Target Field Mappings]()

With the mapping configured, you are ready to start integrating live Kafka data with any of the supported connections in Informatica Cloud. Download a free, 30-day trial of the CData JDBC Driver for Apache Kafka and start working with your live Kafka data in Informatica Cloud today.