Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Access Live Kafka Data in TIBCO Data Virtualization

Use the CData TIBCO DV Adapter for Kafka to create a Kafka data source in TIBCO Data Virtualization Studio and gain access to live Kafka data from your TDV Server.

TIBCO Data Virtualization (TDV) is an enterprise data virtualization solution that orchestrates access to multiple and varied data sources. When paired with the CData TIBCO DV Adapter for Kafka, you get federated access to live Kafka data directly within TIBCO Data Virtualization. This article walks through deploying an adapter and creating a new data source based on Kafka.

With built-in optimized data processing, the CData TIBCO DV Adapter offers unmatched performance for interacting with live Kafka data. When you issue complex SQL queries to Kafka, the adapter pushes supported SQL operations, like filters and aggregations, directly to Kafka. Its built-in dynamic metadata querying allows you to work with and analyze Kafka data using native data types.

Deploy the Kafka TIBCO DV Adapter

In a console, navigate to the bin folder in the TDV Server installation directory. If there is a current version of the adapter installed, you will need to undeploy it.

.\server_util.bat -server localhost -user admin -password ******** -undeploy -version 1 -name ApacheKafka

Extract the CData TIBCO DV Adapter to a local folder and deploy the JAR file (tdv.apachekafka.jar) to the server from the extract location.

.\server_util.bat -server localhost -user admin -password ******** -deploy -package /PATH/TO/tdv.apachekafka.jar

You may need to restart the server to ensure the new JAR file is loaded properly, which can be accomplished by running the composite.bat script located at: C:\Program Files\TIBCO\TDV Server <version>\bin. Note that reauthenticating to the TDV Studio is required after restarting the server.

Sample Restart Call

.\composite.bat monitor restartOnce you deploy the adapter, you can create a new data source in TDV Studio for Kafka.

Create a Kafka Data Source in TDV Studio

With the CData TIBCO DV Adapter for Kafka, you can easily create a data source for Kafka and introspect the data source to add resources to TDV.

Create the Data Source

- Right-click on the folder you wish to add the data source to and select New -> New Data Source.

- Scroll until you find the adapter (e.g. Kafka) and click Next.

- Name the data source (e.g. CData Kafka Source).

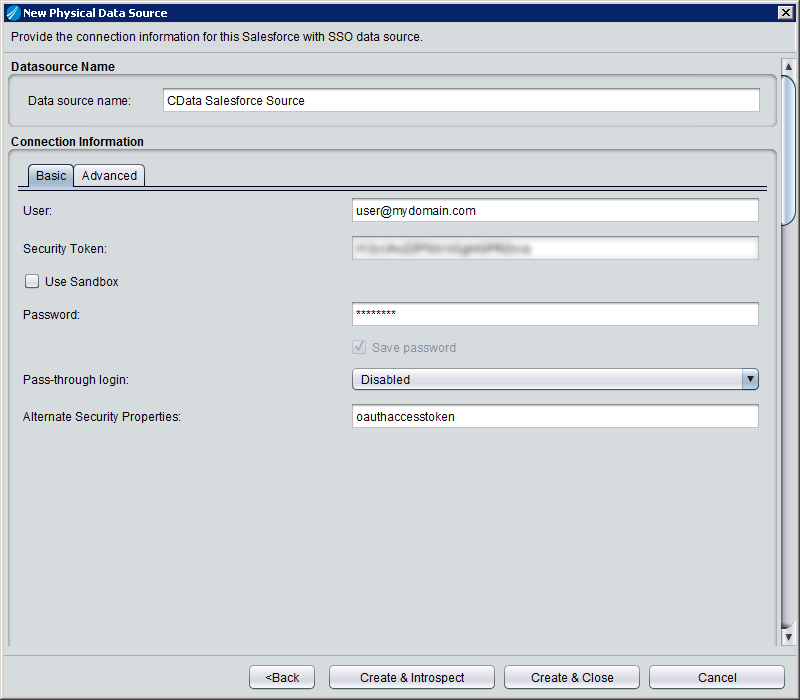

Fill in the required connection properties.

Set BootstrapServers and the Topic properties to specify the address of your Apache Kafka server, as well as the topic you would like to interact with.

Authorization Mechanisms

- SASL Plain: The User and Password properties should be specified. AuthScheme should be set to 'Plain'.

- SASL SSL: The User and Password properties should be specified. AuthScheme should be set to 'Scram'. UseSSL should be set to true.

- SSL: The SSLCert and SSLCertPassword properties should be specified. UseSSL should be set to true.

- Kerberos: The User and Password properties should be specified. AuthScheme should be set to 'Kerberos'.

You may be required to trust the server certificate. In such cases, specify the TrustStorePath and the TrustStorePassword if necessary.

![Filling in Connection Information (Salesforce is shown.)]()

- Click Create & Close.

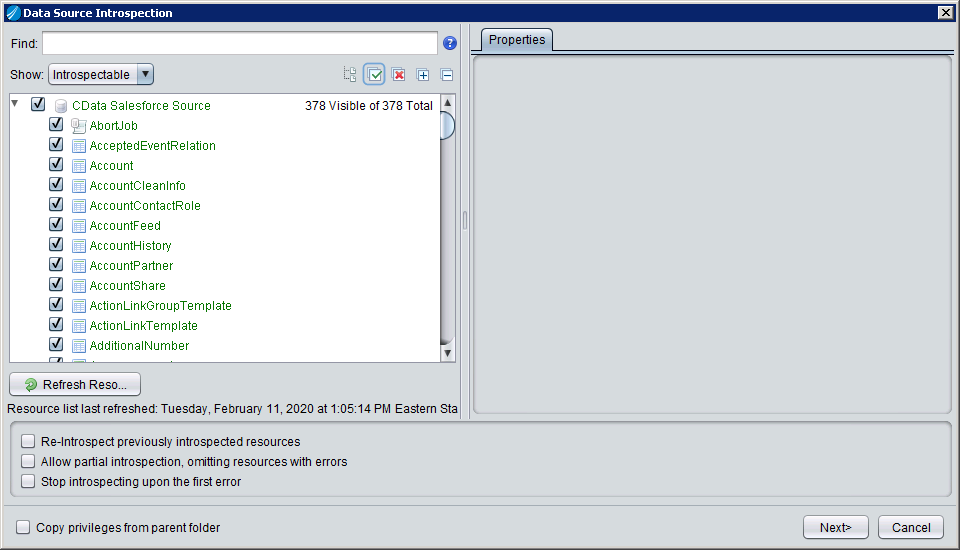

Introspect the Data Source

Once the data source is created, you can introspect the data source by right-clicking and selecting Open. In the dashboard, click Add/Remove Resources and select the Tables, Views, and Stored Procedures to include as part of the data source. Click Next and Finish to add the selected Kafka tables, views, and stored procedures as resources.

After creating and introspecting the data source, you are ready to work with Kafka data in TIBCO Data Virtualization just like you would any other relational data source. You can create views, query using SQL, publish the data source, and more.