Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Create a Data Access Object for Spark Data using JDBI

A brief overview of creating a SQL Object API for Spark data in JDBI.

JDBI is a SQL convenience library for Java that exposes two different style APIs, a fluent style and a SQL object style. The CData JDBC Driver for Spark integrates connectivity to live Spark data in Java applications. By pairing these technologies, you gain simple, programmatic access to Spark data. This article walks through building a basic Data Access Object (DAO) and the accompanying code to read and write Spark data.

Create a DAO for the Spark Customers Entity

The interface below declares the desired behavior for the SQL object to create a single method for each SQL statement to be implemented.

public interface MyCustomersDAO {

//insert new data into Spark

@SqlUpdate("INSERT INTO Customers (Country, Balance) values (:country, :balance)")

void insert(@Bind("country") String country, @Bind("balance") String balance);

//request specific data from Spark (String type is used for simplicity)

@SqlQuery("SELECT Balance FROM Customers WHERE Country = :country")

String findBalanceByCountry(@Bind("country") String country);

/*

* close with no args is used to close the connection

*/

void close();

}

Open a Connection to Spark

Collect the necessary connection properties and construct the appropriate JDBC URL for connecting to Spark.

Set the Server, Database, User, and Password connection properties to connect to SparkSQL.

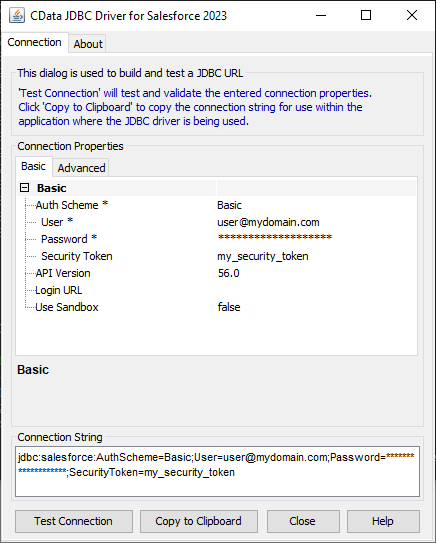

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Spark JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.sparksql.jar

Fill in the connection properties and copy the connection string to the clipboard.

A connection string for Spark will typically look like the following:

jdbc:sparksql:Server=127.0.0.1;

Use the configured JDBC URL to obtain an instance of the DAO interface. The particular method shown below will open a handle bound to the instance, so the instance needs to be closed explicitly to release the handle and the bound JDBC connection.

DBI dbi = new DBI("jdbc:sparksql:Server=127.0.0.1;");

MyCustomersDAO dao = dbi.open(MyCustomersDAO.class);

//do stuff with the DAO

dao.close();

Read Spark Data

With the connection open to Spark, simply call the previously defined method to retrieve data from the Customers entity in Spark.

//disply the result of our 'find' method

String balance = dao.findBalanceByCountry("US");

System.out.println(balance);

Write Spark Data

It is also simple to write data to Spark, using the previously defined method.

//add a new entry to the Customers entity

dao.insert(newCountry, newBalance);

Since the JDBI library is able to work with JDBC connections, you can easily produce a SQL Object API for Spark by integrating with the CData JDBC Driver for Spark. Download a free trial and work with live Spark data in custom Java applications today.