Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →How to work with Adobe Analytics Data in Apache Spark using SQL

Access and process Adobe Analytics Data in Apache Spark using the CData JDBC Driver.

Apache Spark is a fast and general engine for large-scale data processing. When paired with the CData JDBC Driver for Adobe Analytics, Spark can work with live Adobe Analytics data. This article describes how to connect to and query Adobe Analytics data from a Spark shell.

The CData JDBC Driver offers unmatched performance for interacting with live Adobe Analytics data due to optimized data processing built into the driver. When you issue complex SQL queries to Adobe Analytics, the driver pushes supported SQL operations, like filters and aggregations, directly to Adobe Analytics and utilizes the embedded SQL engine to process unsupported operations (often SQL functions and JOIN operations) client-side. With built-in dynamic metadata querying, you can work with and analyze Adobe Analytics data using native data types.

Install the CData JDBC Driver for Adobe Analytics

Download the CData JDBC Driver for Adobe Analytics installer, unzip the package, and run the JAR file to install the driver.

Start a Spark Shell and Connect to Adobe Analytics Data

- Open a terminal and start the Spark shell with the CData JDBC Driver for Adobe Analytics JAR file as the jars parameter:

$ spark-shell --jars /CData/CData JDBC Driver for Adobe Analytics/lib/cdata.jdbc.adobeanalytics.jar - With the shell running, you can connect to Adobe Analytics with a JDBC URL and use the SQL Context load() function to read a table.

Adobe Analytics uses the OAuth authentication standard. To authenticate using OAuth, you will need to create an app to obtain the OAuthClientId, OAuthClientSecret, and CallbackURL connection properties. See the "Getting Started" section of the help documentation for a guide.

Retrieving GlobalCompanyId

GlobalCompanyId is a required connection property. If you do not know your Global Company ID, you can find it in the request URL for the users/me endpoint on the Swagger UI. After logging into the Swagger UI Url, expand the users endpoint and then click the GET users/me button. Click the Try it out and Execute buttons. Note your Global Company ID shown in the Request URL immediately preceding the users/me endpoint.

Retrieving Report Suite Id

Report Suite ID (RSID) is also a required connection property. In the Adobe Analytics UI, navigate to Admin -> Report Suites and you will get a list of your report suites along with their identifiers next to the name.

After setting the GlobalCompanyId, RSID and OAuth connection properties, you are ready to connect to Adobe Analytics.

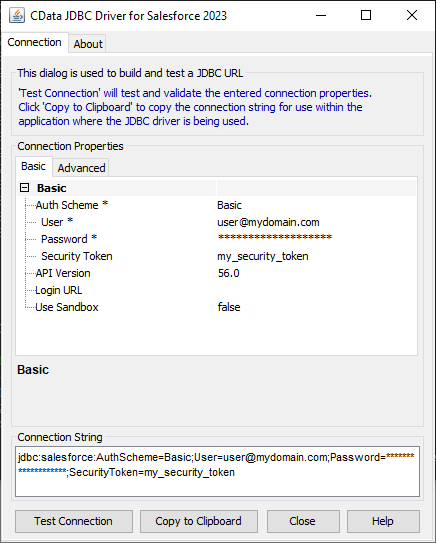

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Adobe Analytics JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.adobeanalytics.jarFill in the connection properties and copy the connection string to the clipboard.

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

Configure the connection to Adobe Analytics, using the connection string generated above.

scala> val adobeanalytics_df = spark.sqlContext.read.format("jdbc").option("url", "jdbc:adobeanalytics:GlobalCompanyId=myGlobalCompanyId; RSID=myRSID; OAuthClientId=myOauthClientId; OauthClientSecret=myOAuthClientSecret; CallbackURL=myCallbackURL;").option("dbtable","AdsReport").option("driver","cdata.jdbc.adobeanalytics.AdobeAnalyticsDriver").load() - Once you connect and the data is loaded you will see the table schema displayed.

Register the Adobe Analytics data as a temporary table:

scala> adobeanalytics_df.registerTable("adsreport")-

Perform custom SQL queries against the Data using commands like the one below:

scala> adobeanalytics_df.sqlContext.sql("SELECT Page, PageViews FROM AdsReport WHERE City = Chapel Hill").collect.foreach(println)You will see the results displayed in the console, similar to the following:

![Data in Apache Spark (Salesforce is shown)]()

Using the CData JDBC Driver for Adobe Analytics in Apache Spark, you are able to perform fast and complex analytics on Adobe Analytics data, combining the power and utility of Spark with your data. Download a free, 30 day trial of any of the 200+ CData JDBC Drivers and get started today.