Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →How to connect PolyBase to Databricks

Use CData drivers and PolyBase to create an external data source in SQL Server 2019 with access to live Databricks data.

PolyBase for SQL Server allows you to query external data by using the same Transact-SQL syntax used to query a database table. When paired with the CData ODBC Driver for Databricks, you get access to your Databricks data directly alongside your SQL Server data. This article describes creating an external data source and external tables to grant access to live Databricks data using T-SQL queries.

NOTE: PolyBase is only available on SQL Server 19 and above, and only for Standard SQL Server.

The CData ODBC drivers offer unmatched performance for interacting with live Databricks data using PolyBase due to optimized data processing built into the driver. When you issue complex SQL queries from SQL Server to Databricks, the driver pushes down supported SQL operations, like filters and aggregations, directly to Databricks and utilizes the embedded SQL engine to process unsupported operations (often SQL functions and JOIN operations) client-side. And with PolyBase, you can also join SQL Server data with Databricks data, using a single query to pull data from distributed sources.

Connect to Databricks

If you have not already, first specify connection properties in an ODBC DSN (data source name). This is the last step of the driver installation. You can use the Microsoft ODBC Data Source Administrator to create and configure ODBC DSNs. To create an external data source in SQL Server using PolyBase, configure a System DSN (CData Databricks Sys is created automatically).

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

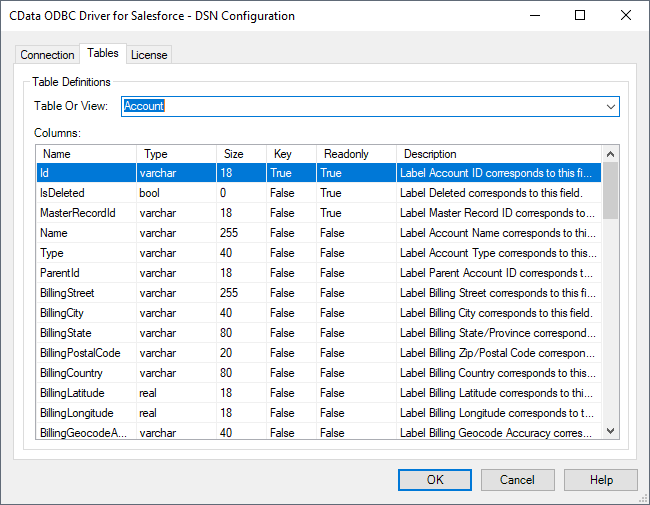

Click "Test Connection" to ensure that the DSN is connected to Databricks properly. Navigate to the Tables tab to review the table definitions for Databricks.

Create an External Data Source for Databricks Data

After configuring the connection, you need to create a master encryption key and a credential database for the external data source.

Creating a Master Encryption Key

Execute the following SQL command to create a new master key, 'ENCRYPTION,' to encrypt the credentials for the external data source.

CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'password';

Creating a Credential Database

Execute the following SQL command to create credentials for the external data source connected to Databricks data.

NOTE: IDENTITY and SECRET correspond with the User and Password properties for Databricks.

CREATE DATABASE SCOPED CREDENTIAL databricks_creds WITH IDENTITY = 'databricks_username', SECRET = 'databricks_password';

Create an External Data Source for Databricks

Execute a CREATE EXTERNAL DATA SOURCE SQL command to create an external data source for Databricks with PolyBase:

- Set the LOCATION parameter , using the DSN and credentials configured earlier.

NOTE: SERVERNAME and PORT corresponds to the Server and Port connection properties for Databricks. PUSHDOWN is set to ON by default, meaning the ODBC Driver can leverage server-side processing for complex queries.

CREATE EXTERNAL DATA SOURCE cdata_databricks_source WITH ( LOCATION = 'odbc://SERVERNAME[:PORT]', CONNECTION_OPTIONS = 'DSN=CData Databricks Sys', -- PUSHDOWN = ON | OFF, CREDENTIAL = databricks_creds );

Create External Tables for Databricks

After creating the external data source, use CREATE EXTERNAL TABLE statements to link to Databricks data from your SQL Server instance. The table column definitions must match those exposed by the CData ODBC Driver for Databricks. You can refer to the Tables tab of the DSN Configuration Wizard to see the table definition.

Sample CREATE TABLE Statement

The statement to create an external table based on a Databricks Customers would look similar to the following:

CREATE EXTERNAL TABLE Customers( City [nvarchar](255) NULL, CompanyName [nvarchar](255) NULL, ... ) WITH ( LOCATION='Customers', DATA_SOURCE=cdata_databricks_source );

Having created external tables for Databricks in your SQL Server instance, you are now able to query local and remote data simultaneously. Thanks to built-in query processing in the CData ODBC Driver, you know that as much query processing as possible is being pushed to Databricks, freeing up local resources and computing power. Download a free, 30-day trial of the ODBC Driver for Databricks and start working with live Databricks data alongside your SQL Server data today.