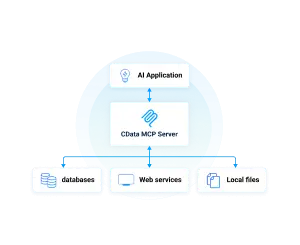

Large language models (LLMs) are only as useful as the data they can access. While the Model Context Protocol (MCP) provides a standard for linking LLMs to external systems, the utility of that connection depends entirely on the quality and architecture of the server implementation. For enterprise teams seeking scalable, secure, and high-performance data access through AI, CData MCP Servers provide a powerful solution rooted in our decade-long investment in enterprise-grade data connectivity.

This post offers a technical look at how CData MCP Servers are constructed, how they expose enterprise data to AI tools, and why their architecture matters for secure and efficient AI deployments.

Architecture: Native integration with CData Drivers

CData MCP Servers are local services—Windows executables that wrap a CData driver and expose an MCP-compliant interface designed for use with Claude Desktop (compatibility with other AI clients is in the works). Each server leverages the driver’s existing SQL engine and enterprise capabilities for session management, query processing, and API integration.

Tool exposure and alignment

CData MCP Servers implement a complete set of core MCP tools aligned with database integration and capabilities, including:

get_tables

get_columns

get_primarykeys

get_procedures

get_procedure_params

run_query

run_procedure

These tools are uniform across all supported sources, and no custom extensions are required. Because of this consistency, AI clients can discover and interact with entities using standardized logic, without requiring custom engineering to handle special cases on a per source basis.

The tool interface is backed directly by the driver’s capabilities. For example, when an AI issues a get_procedure_params call, the MCP Server uses the driver's metadata discovery engine to return the actual parameters required by the procedure to perform a specific action, dynamically scoped to the connected system.

Dynamic schema discovery and metadata exposure

Metadata is retrieved per connection, reflecting the active user’s permissions and the current state of the source system. In most cases, schema information is fetched in real time, giving the LLM access to custom fields, dynamic reports, virtual views, and other runtime-defined objects.

To improve performance, some MCP Servers use cached metadata or schema files to reduce repeated lookups. This balance between live introspection and cached discovery ensures a responsive AI experience while minimizing API overhead.

Data and action access through SQL and procedures

CData MCP Servers expose enterprise data as a tabular model, regardless of the source’s underlying data structure. Business systems (like CRMs or ERPs) are translated into a consistent SQL interface that LLMs can natively understand and query.

Read operations are performed via standard SELECT statements. Write operations—such as inserting a lead, updating a status, or deleting a record—use INSERT, UPDATE, and DELETE semantics.

For non-query-based interactions, such as sending emails or triggering workflows, each MCP Server exposes stored procedures. These procedures are discovered via the get_procedures tool and executed with run_procedure.

The names and parameter structures are unaltered from the driver level, meaning the AI can introspect and interact without needing vendor-specific translation logic.

Optimized execution for LLM-driven workloads

MCP Servers are optimized for performance by leveraging advanced features in the underlying drivers:

Query pushdown: SQL filters, joins, and aggregations are translated and pushed to the source system whenever possible. This minimizes processing in the MCP Server itself and reduces latency.

Parallel paging: Results are retrieved in parallel where supported, speeding up large query responses.

Streaming mode: For large datasets, records are streamed incrementally to avoid memory bottlenecks.

These optimizations are critical for interactive AI use cases, where users expect real-time responsiveness even when querying complex or high-volume systems.

Enterprise-grade security, by design

Every data request made through a CData MCP Server is scoped by the permissions and identity of the authenticated user. The server accesses data in place—never moving or copying it—ensuring compliance with enterprise data governance standards.

CData supports multiple enterprise authentication schemes, including:

OAuth

SSO (e.g., Okta, Azure AD)

Kerberos

Basic auth (API keys, username/password)

Because authentication is globally configured per server, admins can centrally enforce credential policies while exposing access to multiple AI tools.

Additionally, the CData platform is:

This allows enterprise IT teams to adopt MCP-based AI solutions with full confidence in the platform’s security posture.

Deployment and diagnostics

The initial release of CData MCP Servers is available as a Windows desktop service, tested with Claude Desktop and compatible with any LLM client that supports MCP. Servers run locally, and each instance can be configured independently to connect with a specific source system leveraging user and service-level accounts.

For monitoring and diagnostics, servers provide text-based logging configurable per instance. Logs include session activity, tool invocations, query execution traces, and error reporting—giving administrators insight into AI activity and system performance.

Build your own MCP Server with CData JDBC Drivers

Not only are we launching a free beta for our own MCP Servers, but we’re also showcasing how easy it is to create your own MCP server on top of a CData JDBC Driver. We’ve started with an open-source, read-only server on Github that you are free to fork and modify for your own experimentation. Check out the repository and see how easy our connectivity model makes it to build an MCP server that has access to the entirety of an enterprise data source (without the need for dozens of tools to retrieve data from your business systems): cdata-jdbc-mcp-server (Github).

Ask questions with confidence

CData MCP Servers offer a technically rigorous, production-ready implementation of the Model Context Protocol—fueled by proven driver infrastructure and decades of experience in secure, standards-based data integration.

If your AI tools are ready to work with enterprise data, CData provides the architecture to make that connection seamless, performant, and safe.

To explore the technology hands-on, download a free MCP Server or visit the CData Community to share insights, ask questions, and help shape the future of enterprise-ready AI.