In April 2020, at the height of the COVID-19 pandemic, the world saw an unforeseen surge in consumer demand for a commodity that we had all taken for granted: the humble toilet paper roll. With toilet paper sales surging 213% by May, many retailers were caught flat-footed by a demand surge that no economist had been able to predict. Except Amazon.

By then, the retail giant had already perfected the predictive forecasting model that effectively processed thousands of input variables to recognize, extrapolate, and respond to this skyrocketing consumer demand trend. Amazon’s data-driven demand forecasting and inventory allocation model has demonstrated faster delivery, lowered operational costs, and the agility it needs to meet these demand spikes—giving it a competitive edge and a coveted position as the largest e-commerce company by market cap.

Amazon is not alone. The household names of the retail industry, like Walmart, Nordstrom, and Costco all have one thing in common—they have prioritized investment into a data infrastructure that cleans and prepares mountains of operational data and efficiently delivers it to analytics teams. This data is then turned into insights and automations that power supply chain optimizations, marketing spend allocation, and customized omnichannel customer experiences that build loyalty and market share.

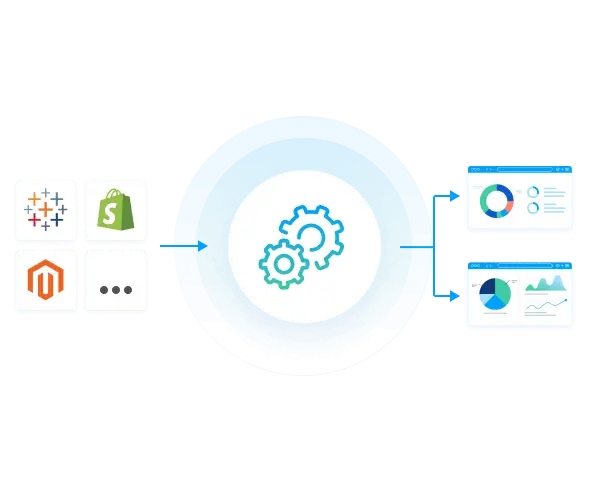

In working with data teams at many of these retailers, we at CData have a unique vantage point into the data foundations that power their success. Notably, these organizations have built a modern data architecture that intelligently automates data engineering processes to create a central, governed source of truth. Analytics teams efficiently access this central source of truth to develop a competitive advantage for their organizations. Here, we take a closer look at each element of this data foundation.

A modern data architecture

According to MuleSoft, 62% of IT leaders say their organizations aren’t equipped with the right data access to fully leverage AI. Their sentiment is understandable, given what we know about the explosion of applications and technology ecosystems (Microsoft, Oracle, AWS, Salesforce, etc.) that make up the technology stacks of most enterprises. Harmonizing data across these systems is a foundational step retailers must cover before they can implement the type of sophisticated predictive analytics that contributed to Amazon’s success during the pandemic. Yet, fewer than half of retailers assess themselves as successful in achieving this.

The problem is compounded by the fact that modernization doesn’t occur overnight—individual components of a tech stack are modernized according to the CIO's priorities and budgets. This creates a growing gap between the legacy and modern systems in an organization’s tech stack. The pain of this integration challenge is felt by 68% of IT organizations, according to Forrester's Mike Gualtieri, during CData Foundations. The rate of change in the enterprise technology landscape means that this legacy-modern divide is here to stay.

This creates a challenge for data architects and IT teams: Integrated data access across systems is a prerequisite to realize the promise of enterprise AI, but the variety of ecosystems, styles of data access, and the legacy-modern divide prevents this integration. The solution is a modern data architecture that is agnostic to any ecosystem, data access pattern, or deployment (e.g., cloud-on-premises, legacy-modern).

Take the example of CData customer Office Depot. When they came to us, they were in the process of modernizing to a Snowflake data warehouse. They had invested hours into the development of SSAS cubes that were no longer compatible with their central source of truth in Snowflake. Why? Because SSAS is in the Microsoft ecosystem and is deployed on-premises. They needed an integration that could cross the ecosystem divide as well as the cloud-on-premises divide. CData was able to help refactor these SSAS cubes to Snowflake, allowing them to maintain business continuity with a 10x performance boost as compared to native connectivity into Snowflake.

Retailers that prioritize the need for a modern data access layer that integrates across ecosystems and bridges the cloud-on-premises divide will be best equipped to take advantage of the promise of enterprise AI.

Central, governed, efficient data access

It’s not enough simply to bridge across data ecosystems. Successful implementation of data-driven analytics requires that this data be made available to data scientists, business intelligence (BI), and analytics teams.

Here, we see another divide that needs to be bridged: according to a recent report by CData on data connectivity, 57% of IT professionals spend more than half of their workweek servicing data requests, and 63% of analytics teams are forced to wait weeks to months for their data. The status quo isn’t working for those who own and architect data systems or for those who are charged with processing this data into business outcomes.

This challenge can be particularly complex for systems that are inherently challenging to extract and prepare data from. For example, Workday and SAP, used widely by retailers to handle people operations and supply chains respectively, are notoriously challenging systems for analytics teams to access. This has created significant barriers for organizations looking to operationalize data from these mission-critical systems of record.

This challenge can be addressed for both sides, ultimately ensuring operational efficiency from end to end by building a central, governed data access layer. One that automates the data preparation step that analytics teams spend up to 80% of their time on.

Take CData customer BJ’s Wholesale as an example of what can be achieved here. BJ’s People Analytics team was faced with a manual, time-consuming process to export data from Workday and other sources to construct a full picture of the health of their workforce. By automating this data preparation step, they saved time for both their analytics and IT teams. They disseminated the insights to 1,500 leaders in the organization and leveraged this central data access layer to boost their employee retention by 10%.

Automation of data engineering

There are cases where the creation of a central access layer is made difficult by the extensive data engineering steps required to process and blend data before it can be fed into an analytics process. Currently, many organizations have implemented custom-code integrations, facilitated by development tools like Azure Data Factory (or simply through Python or Java code), for each system of record or data source from which data needs to be extracted and blended for analysis. This manual data engineering step proves to be a significant liability for organizations because it often turns into technical debt that must be maintained quarter after quarter, year after year—in perpetuity.

Additionally, these homegrown processes do not offer central visibility into the health of the data pipelines that are powering business outcomes for the organization. Given the intricate dependencies that exist across pipelines, the impact of a broken pipeline isn’t obvious until a thorough analysis is run. The inefficiency of this manual data engineering effort costs IT organizations inflated engineering headcounts, delayed timelines for project delivery, and missed opportunities for optimization of the business.

IT organizations that seek to automate as much of the repetitive, manual data engineering tasks as possible will be set up to enable transformative outcomes for business. This includes cost reduction (in engineer-hours required to extract and prepare data for analysis), acceleration of the analytics roadmap, and production of higher-quality insights that drive the business. Our customers have collectively shaved years off their implementation time for their data warehouses, achieved millions of dollars in annual savings, and enabled their business users to perform data engineering tasks with self-service.

The path forward

The retail landscape has fundamentally shifted, and the divide between data-driven organizations and their competitors continues to widen. The success stories of Amazon, Walmart, and other retail giants demonstrate that investing in robust data infrastructure isn't just a competitive advantage—it's becoming a prerequisite for survival in modern retail.

Retailers must focus on three critical elements:

- Building a modern, ecosystem-agnostic data architecture that bridges the legacy/modern divide.

- Establishing a central, governed data access layer that democratizes data across the organization.

- Automating data engineering processes to reduce technical debt and accelerate time-to-insight.

The retailers who succeed in implementing these foundational elements will be better positioned to respond rapidly to unexpected market shifts, optimize their supply chains with predictive analytics, and deliver personalized customer experiences at scale.

As we look to the future, the question isn't whether to invest in data infrastructure but rather how quickly organizations can transform their data operations to stay competitive in an increasingly data-driven retail landscape. Those who delay risk falling further behind, while those who embrace this transformation will be well-positioned to thrive in the next era of retail. The technology and solutions exist—it's up to retail leaders to prioritize and implement them effectively.

Explore CData Drivers and Connectors

Get blazing-fast access to live data for BI, reporting, and integration with seamless connectivity from data sources to the tools you use every day. Our standards-based connectors make data integration effortless—just point, click, and go.