Build Semantic Layer Views for Lakebase Data in APOS Live Data Gateway

APOS Live Data Gateway (LDG) is a data connection and data transformation solution that enables live data connectivity and expanded data source options for SAP Analytics Cloud and other SAP solutions. When paired with CData Connectors, users can build semantic layer views for live Lakebase data, enabling real-time analytics on Lakebase just like working with a relational database.

With built-in optimized data processing, the CData Connector offers unmatched performance for interacting with live Lakebase data. When you issue complex SQL queries to Lakebase, the driver pushes supported SQL operations, like filters and aggregations, directly to Lakebase and utilizes the embedded SQL engine to process unsupported operations client-side (often SQL functions and JOIN operations). Its built-in dynamic metadata querying allows you to work with and analyze Lakebase data using native data types.

Download and Install the Connector Files

In order to access Lakebase data through the APOS Live Data Gateway, you will need to download the connector files from APOS and install them on the machine hosting the Live Data Gateway. An APOS representative can deliver the necessary files.

- Install the CData DLL file (System.Data.CData.Lakebase.dll) to the APOS Live Data Gateway installation directory (C:\Program Files\Live Data Gateway\Admin\ by default).

- Install the CData JAR file (cdata.jdbc.lakebase.jar) to the ConnectionTest_lib folder in the installation directory (C:\Program Files\Live Data Gateway\Admin\ConnectionTest_lib\ by default).

- Install the CData JAR file (cdata.jdbc.lakebase.jar) to the lib folder in the Web UI installation directory (e.g.: C:\LDG_WebUI\lib\)

Configuring the Lakebase Connection String

Before establishing the connection to Lakebase from the APOS Live Data Gateway, you need to configure the Lakebase JDBC Connection String.

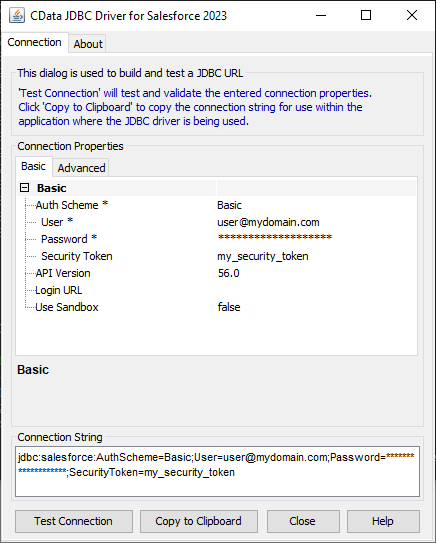

Built-in Connection String Designer

For assistance in constructing the JDBC Connection String, use the connection string designer built into the Lakebase JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.lakebase.jar

Fill in the connection properties and copy the connection string to the clipboard.

To connect to Databricks Lakebase, start by setting the following properties:- DatabricksInstance: The Databricks instance or server hostname, provided in the format instance-abcdef12-3456-7890-abcd-abcdef123456.database.cloud.databricks.com.

- Server: The host name or IP address of the server hosting the Lakebase database.

- Port (optional): The port of the server hosting the Lakebase database, set to 5432 by default.

- Database (optional): The database to connect to after authenticating to the Lakebase Server, set to the authenticating user's default database by default.

OAuth Client Authentication

To authenicate using OAuth client credentials, you need to configure an OAuth client in your service principal. In short, you need to do the following:

- Create and configure a new service principal

- Assign permissions to the service principal

- Create an OAuth secret for the service principal

For more information, refer to the Setting Up OAuthClient Authentication section in the Help documentation.

OAuth PKCE Authentication

To authenticate using the OAuth code type with PKCE (Proof Key for Code Exchange), set the following properties:

- AuthScheme: OAuthPKCE.

- User: The authenticating user's user ID.

For more information, refer to the Help documentation.

Your connection string will look similar to the following:

jdbc:Lakebase:DatabricksInstance=lakebase;Server=127.0.0.1;Port=5432;Database=my_database;InitiateOAuth=GETANDREFRESH;

Connecting to Lakebase & Creating a Semantic Layer View

After installing the connector files and configuring the connection string, you are ready to connect to Lakebase in the Live Data Gateway Admin tool and build a semantic layer view in the Live Data Gateway Web UI.

Configuring the Connection to Lakebase

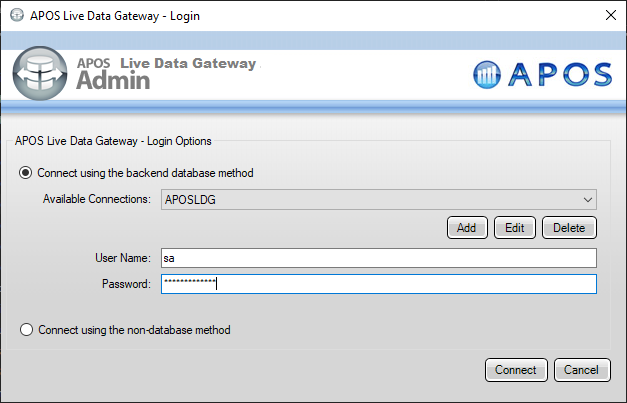

- Log into your APOS Live Data Gateway Manager

- If you haven't already, update your APOS LDG license file

- Click File -> Configurations

- Click on the "..." Menu for the License

- Select the license file from the APOS team that includes your CData Connector license

- In the APOS Live Data Gateway Manager, click "Add"

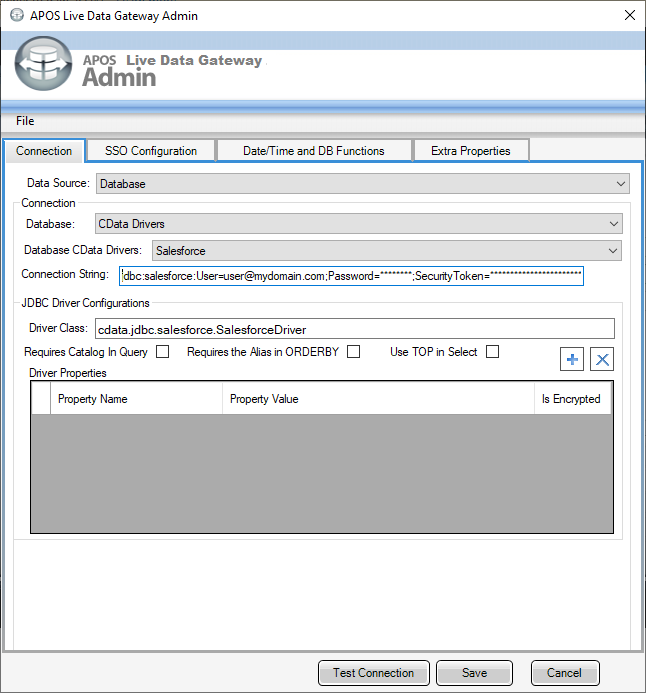

- In the APOS Live Data Gateway On the Connection tab, configure the connection:

- Set Data Source to "Database"

- Set Database to "CData Drivers"

- Set Database CData Drivers to "Lakebase"

- Set Connection String to the connection string configured earlier (e.g.:

jdbc:Lakebase:DatabricksInstance=lakebase;Server=127.0.0.1;Port=5432;Database=my_database;InitiateOAuth=GETANDREFRESH;

- Set Driver Class to "cdata.jdbc.lakebase.LakebaseDriver" (this should be set by default)

- Click Test Connection

- Click Save

- Give your connection a unique prefix (e.g. "lakebase")

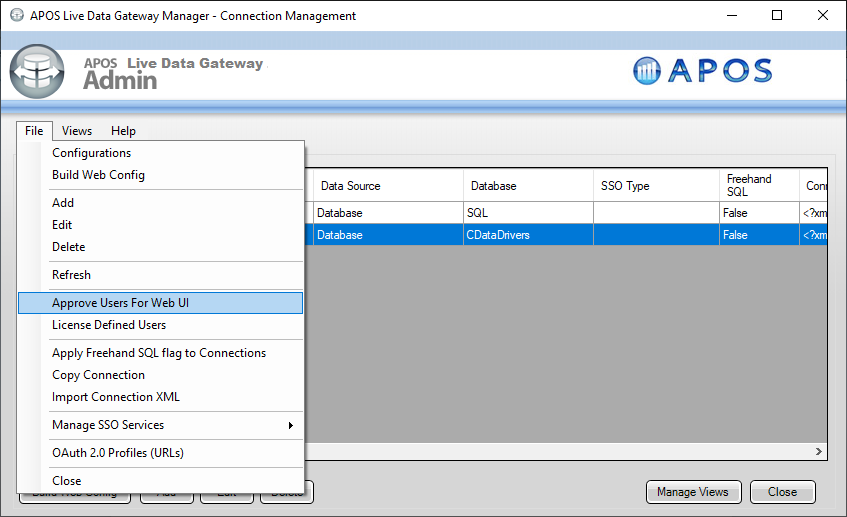

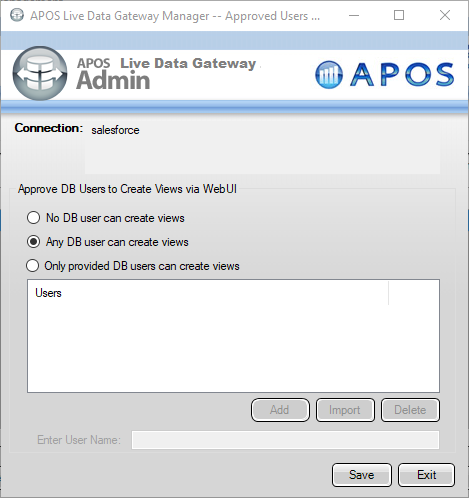

- Highlight the newly created connection and click File -> "Approve Users For Web UI"

- Approve the appropriate DB users to create views and click "Save"

At this point, we are ready to build our semantic layer view in the Live Data Gateway Web UI.

Creating a Semantic Layer View

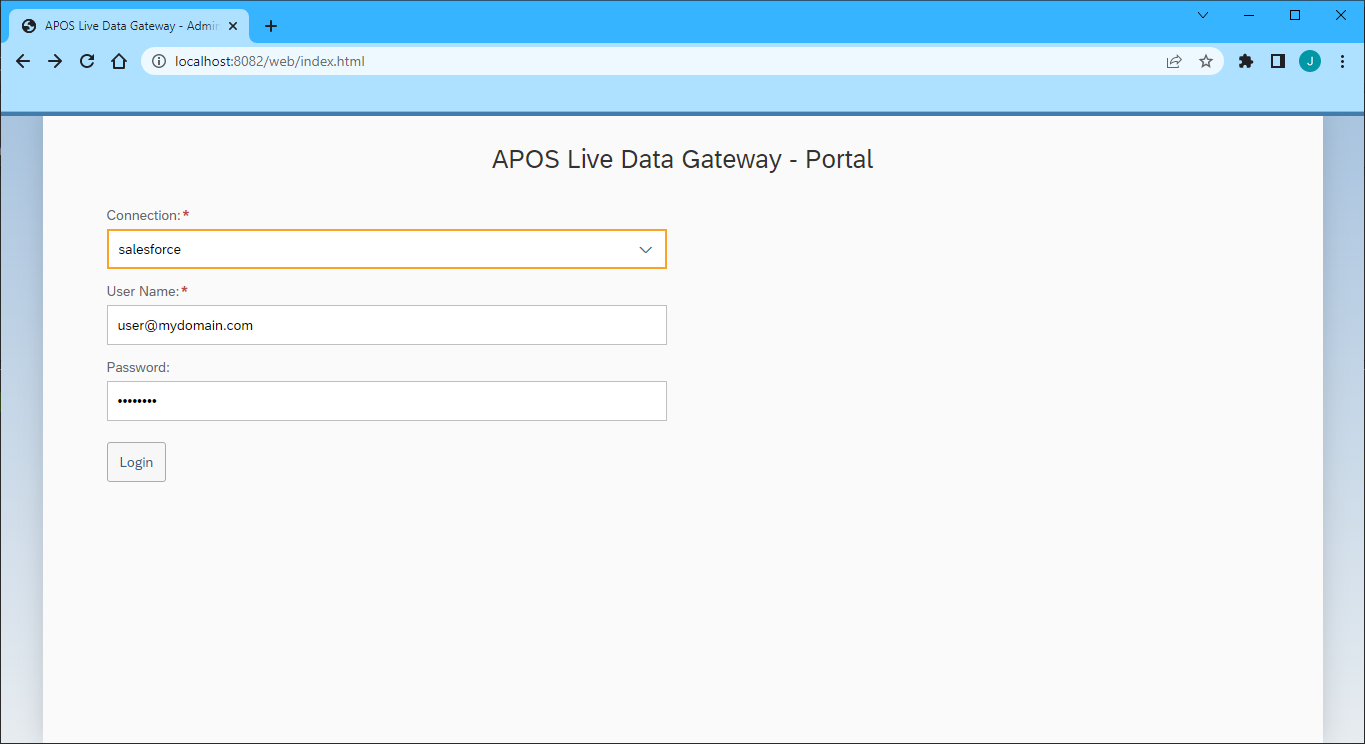

- In your browser, navigate to the APOS Live Data Gateway Portal

- Select a Connection (e.g. "lakebase")

- Since Lakebase does not require a User or Password to authenticate, you may use whatever values you wish for User Name and Password

- Click "Login"

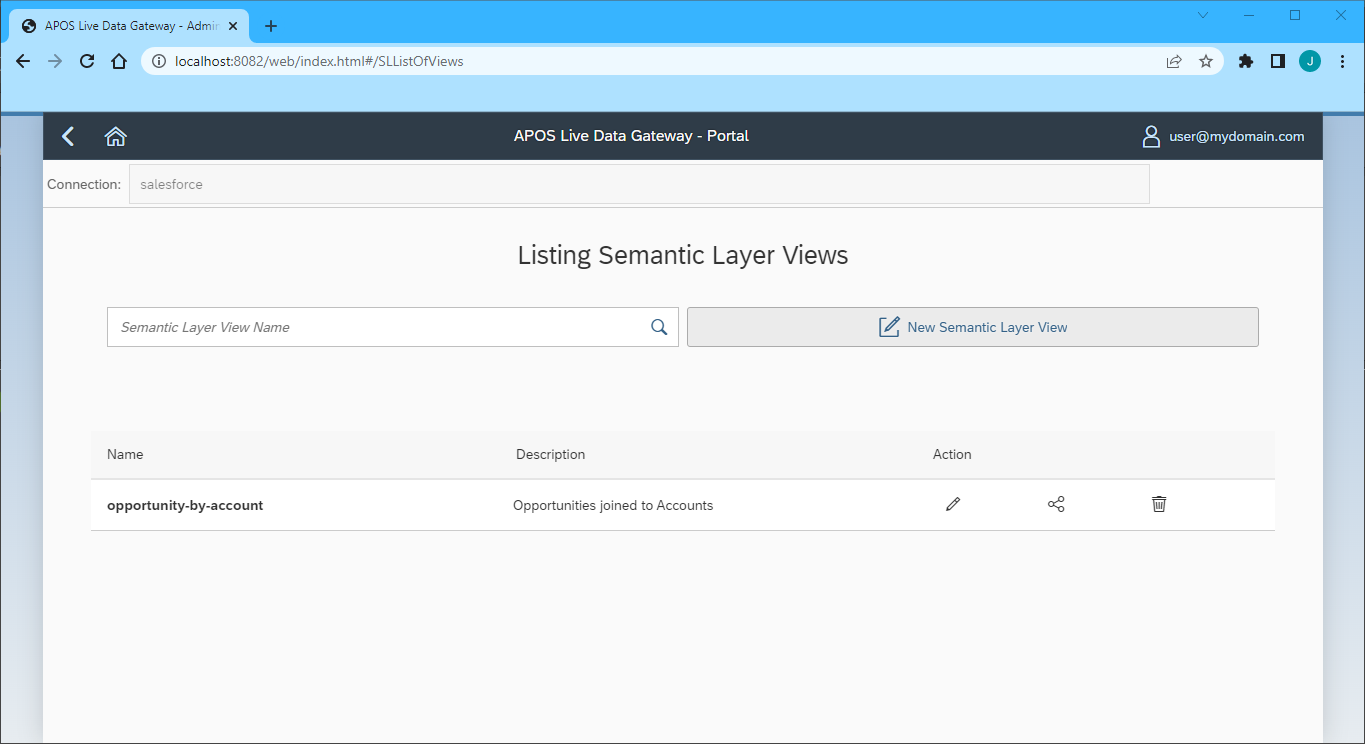

- Once connected, click "Semantic Layer" to create a new semantic layer view

- Click "New Semantic Layer View"

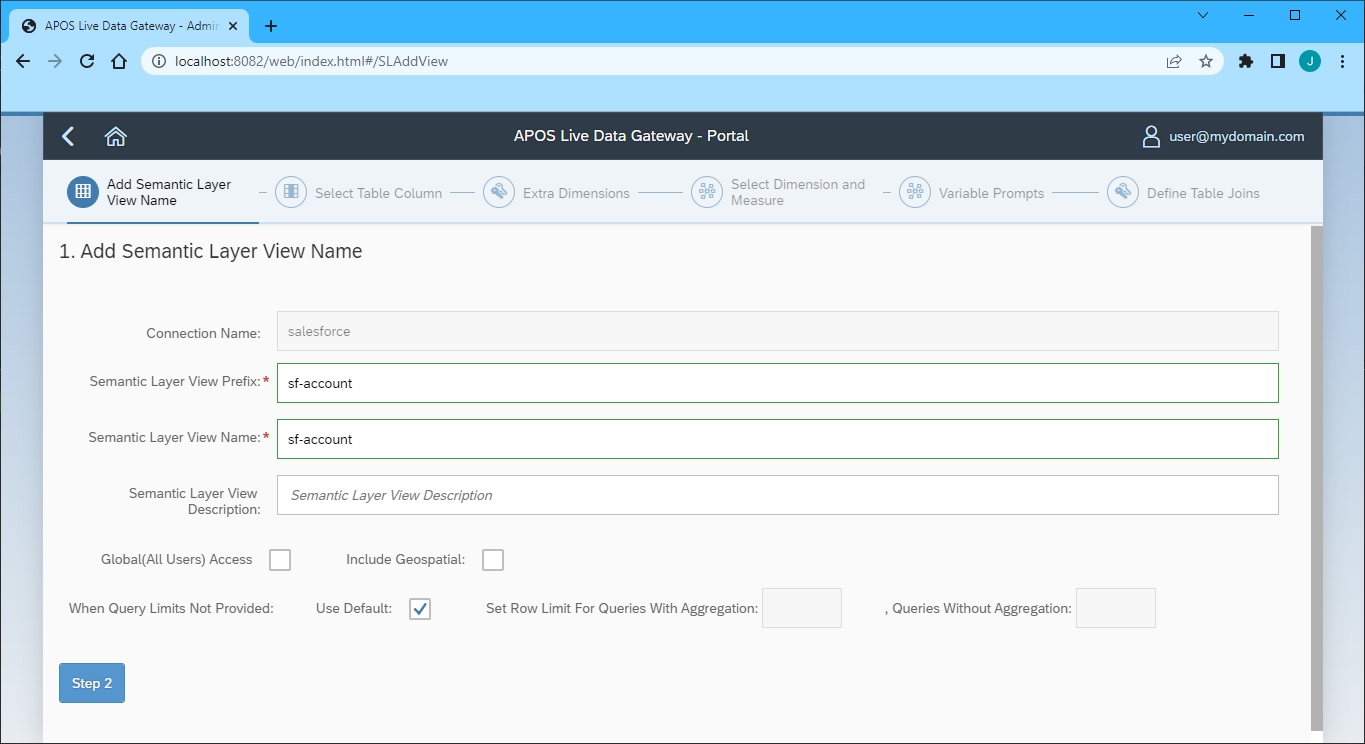

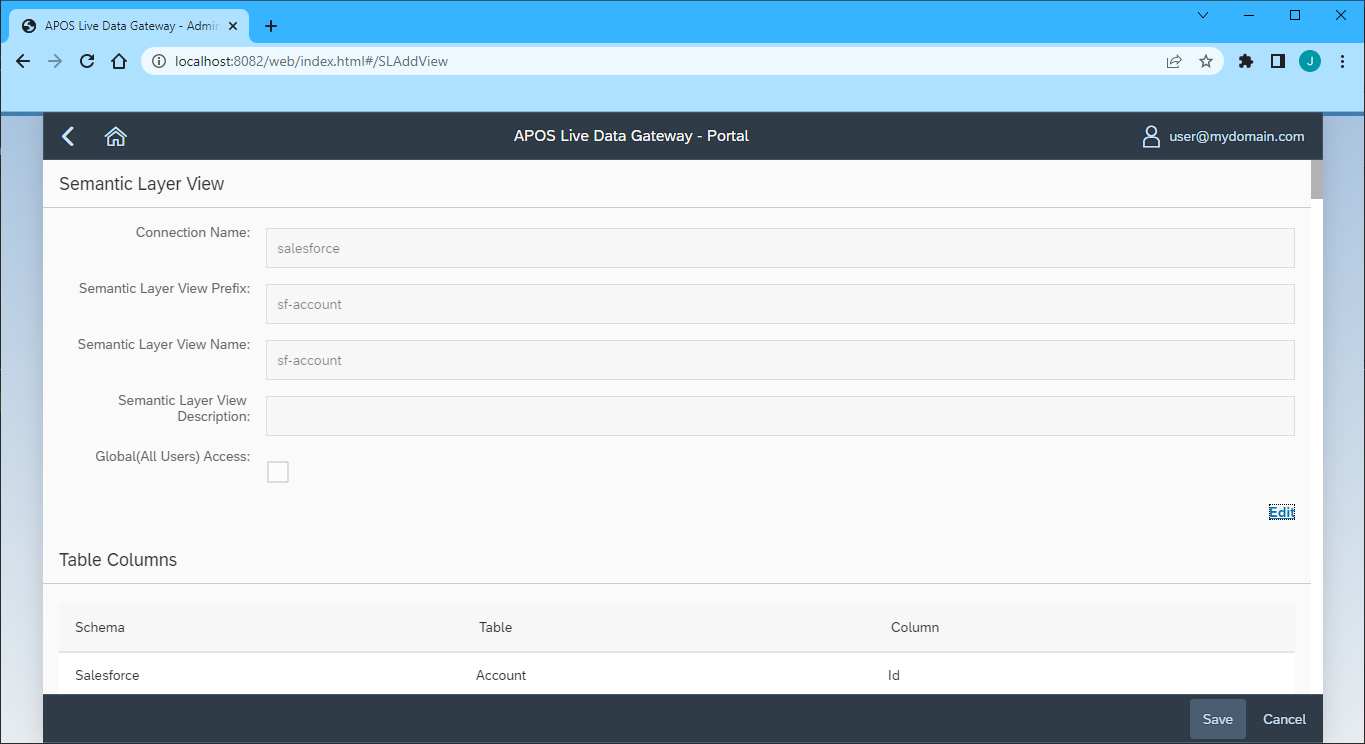

- Set the Semantic Layer View Prefix and Semantic Layer View Name

- Click "Step 2"

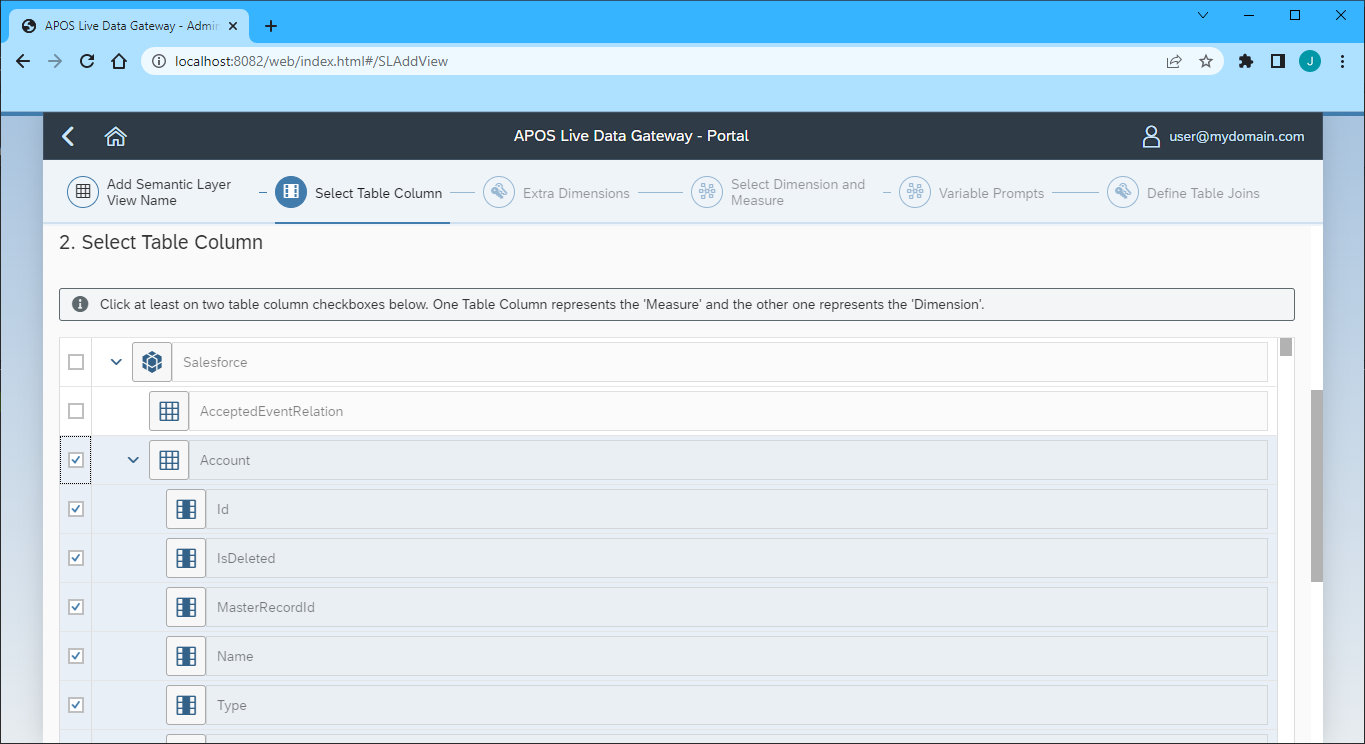

- Select the table(s) and column(s) you wish to include in your view

- Click "Step 3"

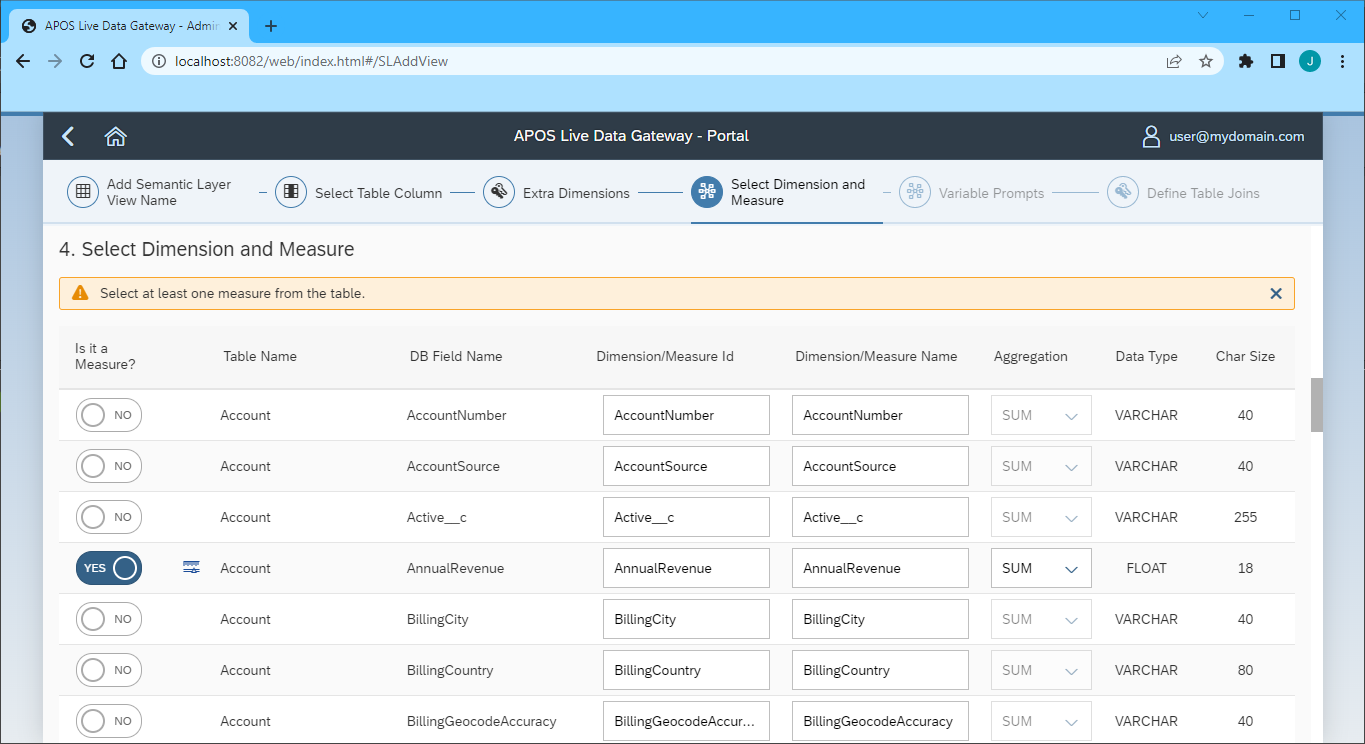

- Select the Measures from the available table columns

- Click "Step 5" (we skipped the "Extra Dimensions" step)

- Add any Variable Prompts

- Click "Step 6"

- Define any Table Joins

- Click "Review"

- Review you semantic layer view and click "Save"

With the Semantic Layer View created, you are ready to access your Lakebase data through the APOS Live Data Gateway, enabling real-time data connectivity to Lakebase data from SAP Analytics Cloud and other SAP solutions.

More Information & Free Evaluation

Please visit APOS Systems - APOS Solutions - Request Evaluation Software to request evaluation software or email [email protected] for more information on working with your live Lakebase data in APOS Live Data Gateway.