Connect to and Visualize Live Lakebase Data in Tableau Prep

Tableau is a visual analytics platform transforming the way businesses use data to solve problems. When paired with the CData Tableau Connector for Lakebase, you can easily get access to live Lakebase data within Tableau Prep. This article shows how to connect to Lakebase in Tableau Prep and build a simple chart.

The CData Tableau Connectors enable high-speed access to live Lakebase data in Tableau. Once you install the connector, you simply authenticate with Lakebase and you can immediately start building responsive, dynamic visualizations and dashboards. By surfacing Lakebase data using native Tableau data types and handling complex filters, aggregations, & other operations automatically, CData Tableau Connectors grant seamless access to Lakebase data.

NOTE: The CData Tableau Connectors support Tableau Prep Builder 2020.4.1 or higher. If you are using an older version of Tableau Prep Builder, you will need to use the CData JDBC Driver.

Install the CData Tableau Connector

When you install the CData Tableau Connector for Lakebase, the installer should copy the TACO and JAR files to the appropriate directories. If your data source does not appear in the connection steps below, you will need to copy two files:

- Copy the TACO file (cdata.lakebase.taco) found in the lib folder of the connector's installation location (C:\Program Files\CData\CData Tableau Connector for Lakebase 20XX\lib on Windows) to the Tableau Prep Builder repository:

- Windows: C:\Users\[Windows User]\Documents\My Tableau Prep Repository\Connectors

- MacOS: /Users//Documents/My Tableau Prep Repository/Connectors

- Copy the JAR file (cdata.tableau.lakebase.jar) found in the same lib folder to the Tableau drivers directory, typically [Tableau installation location]\Drivers.

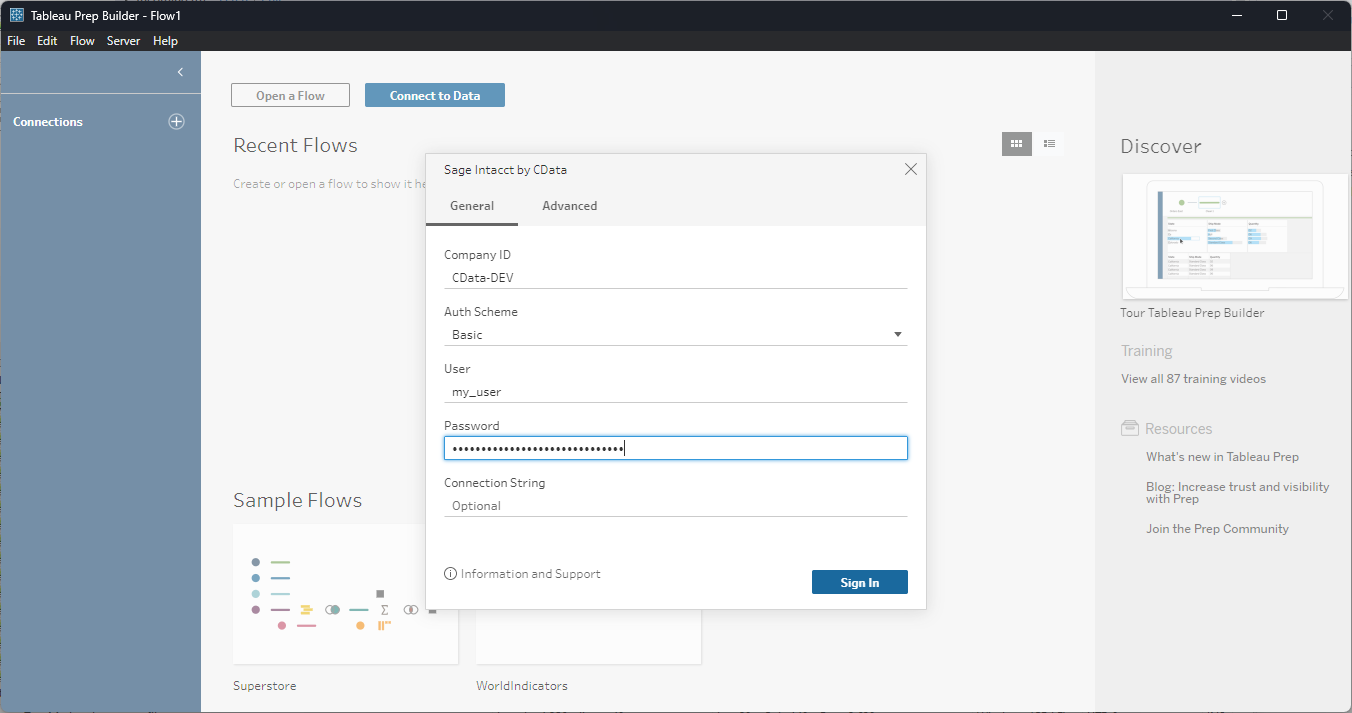

Connect to Lakebase in Tableau Prep Builder

Open Tableau Prep Builder and click "Connect to Data" and search for "Lakebase by CData." Configure the connection and click "Sign In."

To connect to Databricks Lakebase, start by setting the following properties:- DatabricksInstance: The Databricks instance or server hostname, provided in the format instance-abcdef12-3456-7890-abcd-abcdef123456.database.cloud.databricks.com.

- Server: The host name or IP address of the server hosting the Lakebase database.

- Port (optional): The port of the server hosting the Lakebase database, set to 5432 by default.

- Database (optional): The database to connect to after authenticating to the Lakebase Server, set to the authenticating user's default database by default.

OAuth Client Authentication

To authenicate using OAuth client credentials, you need to configure an OAuth client in your service principal. In short, you need to do the following:

- Create and configure a new service principal

- Assign permissions to the service principal

- Create an OAuth secret for the service principal

For more information, refer to the Setting Up OAuthClient Authentication section in the Help documentation.

OAuth PKCE Authentication

To authenticate using the OAuth code type with PKCE (Proof Key for Code Exchange), set the following properties:

- AuthScheme: OAuthPKCE.

- User: The authenticating user's user ID.

For more information, refer to the Help documentation.

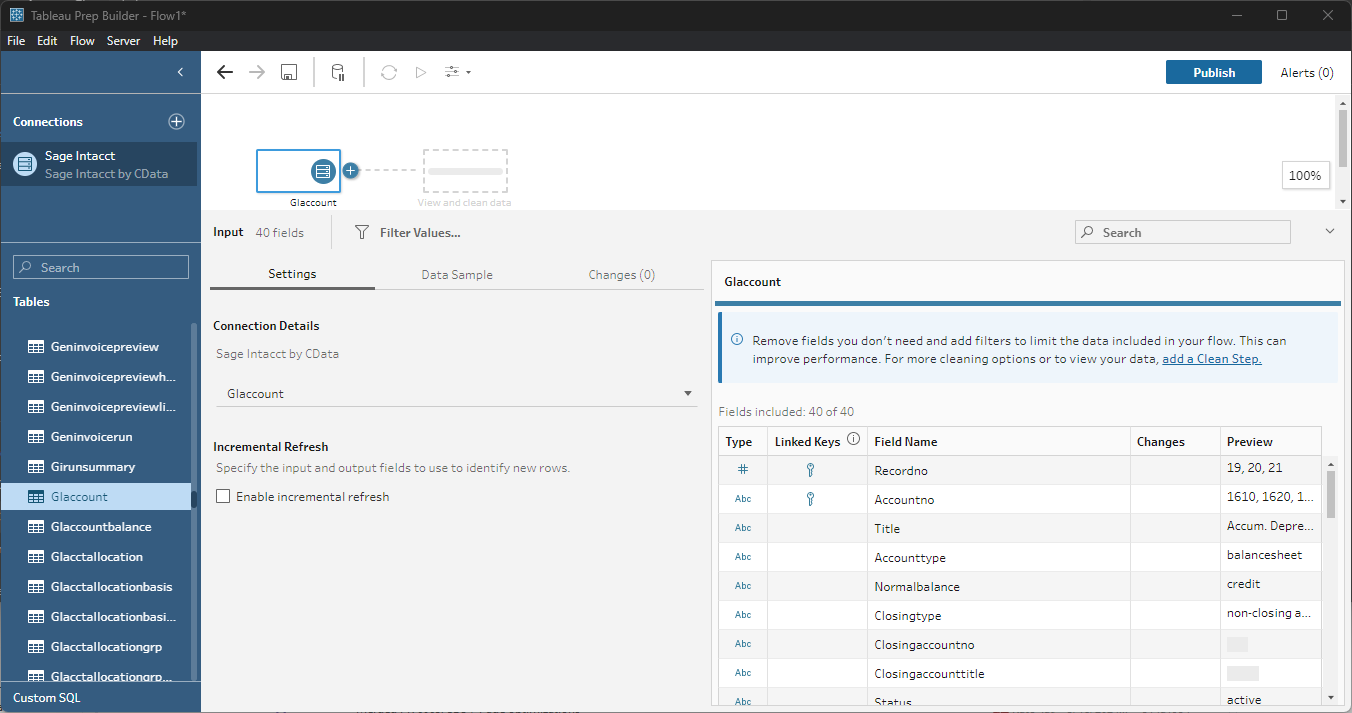

Discover and Prep Data

Drag the tables and views you wish to work with onto the canvas. You can include multiple tables.

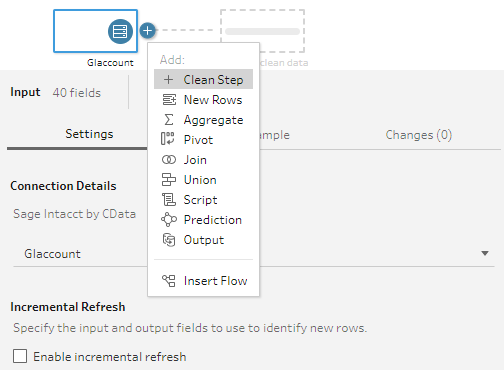

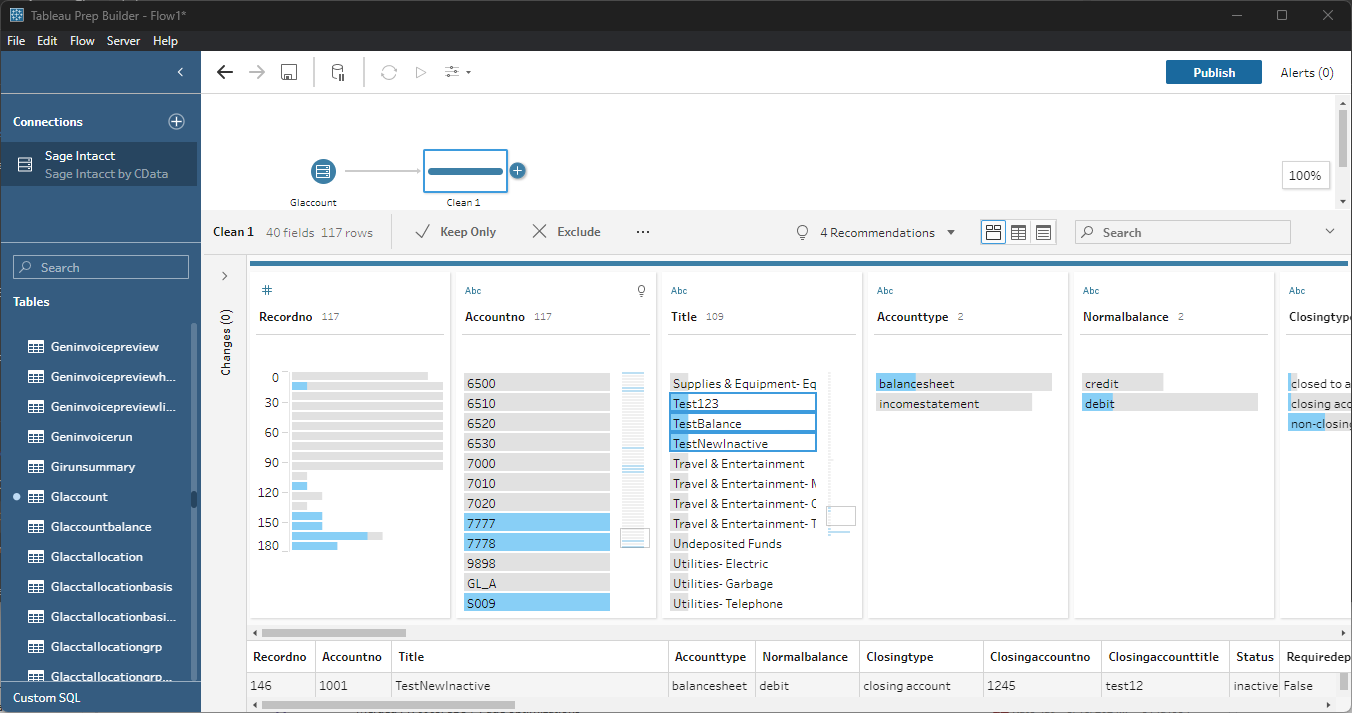

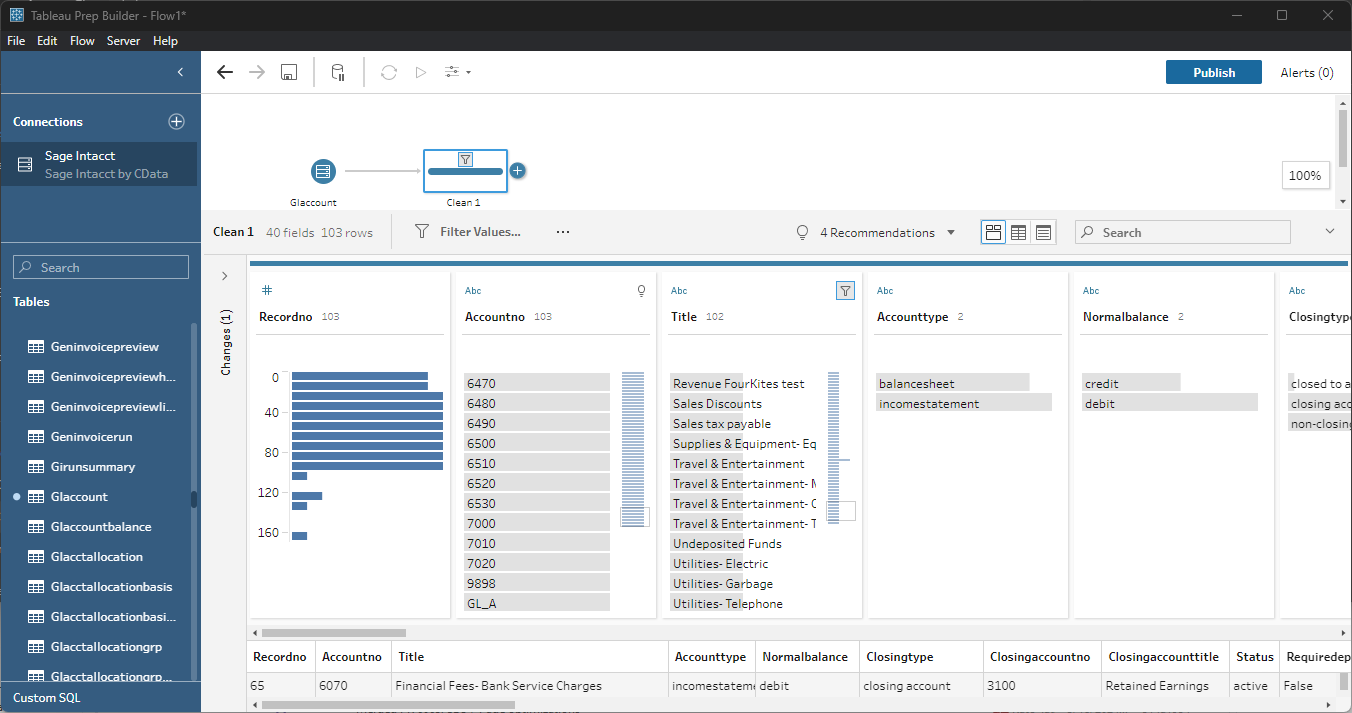

Data Cleansing & Filtering

To further prepare the data, you can implement filters, remove duplicates, modify columns and more.

- Start by clicking on the plus next to your table and selecting the Clean Step option.

- Select the field values to filter by. As you select values, you can see how your selections impact other fields.

- Opt to "Keep Only" or "Exclude" entries with your select values and the data changes in response.

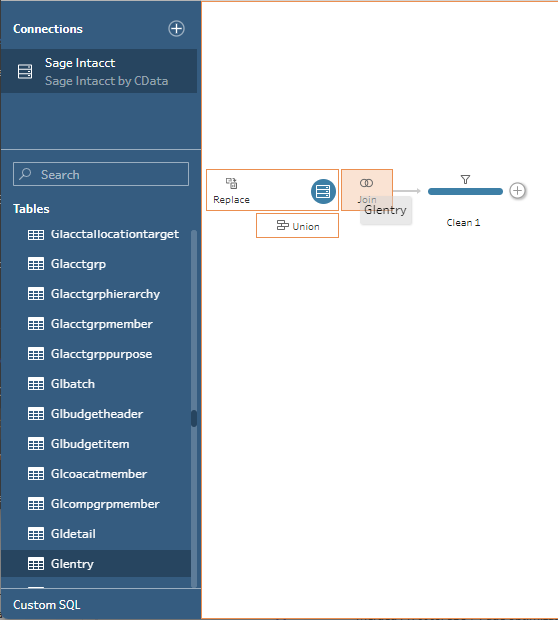

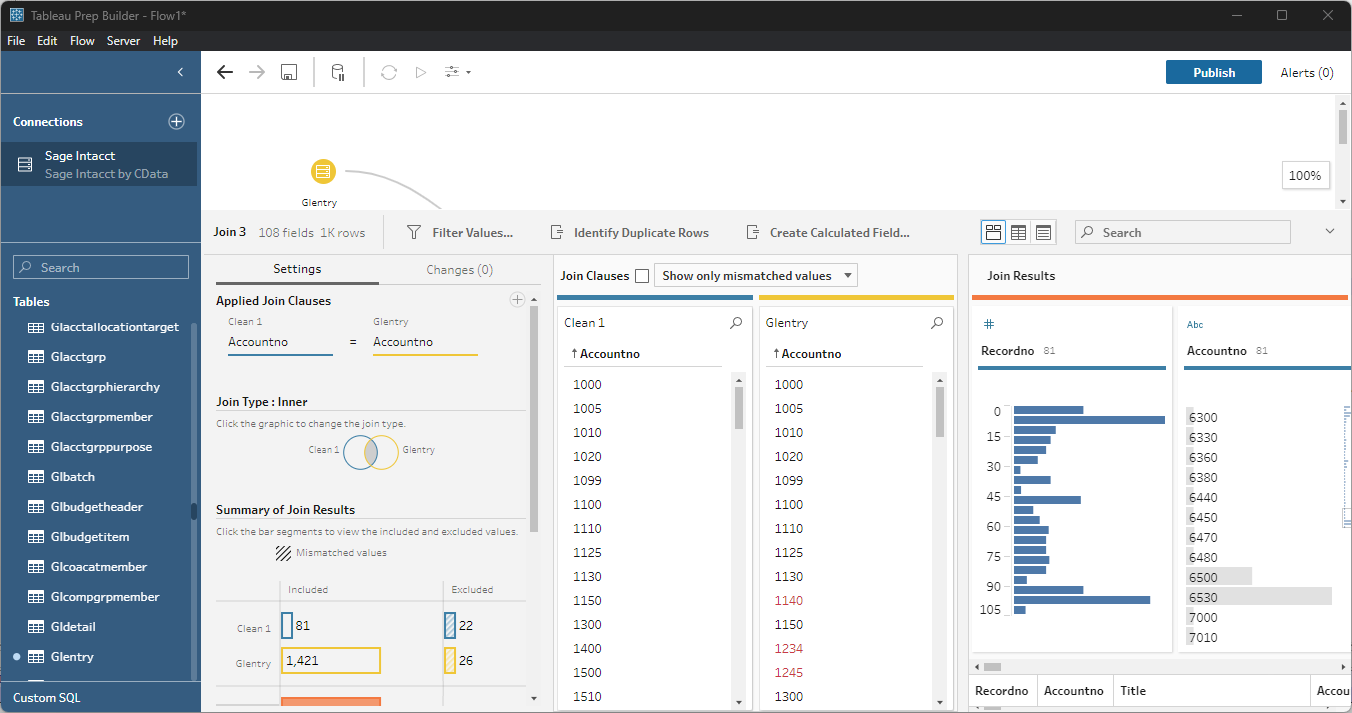

Data Joins and Unions

Data joining involves combining data from two or more related tables based on a common field or key.

- To join multiple tables, drag a related table next to an existing table in the canvas and place it in the Join box.

- Select the foreign keys that exist in both tables.

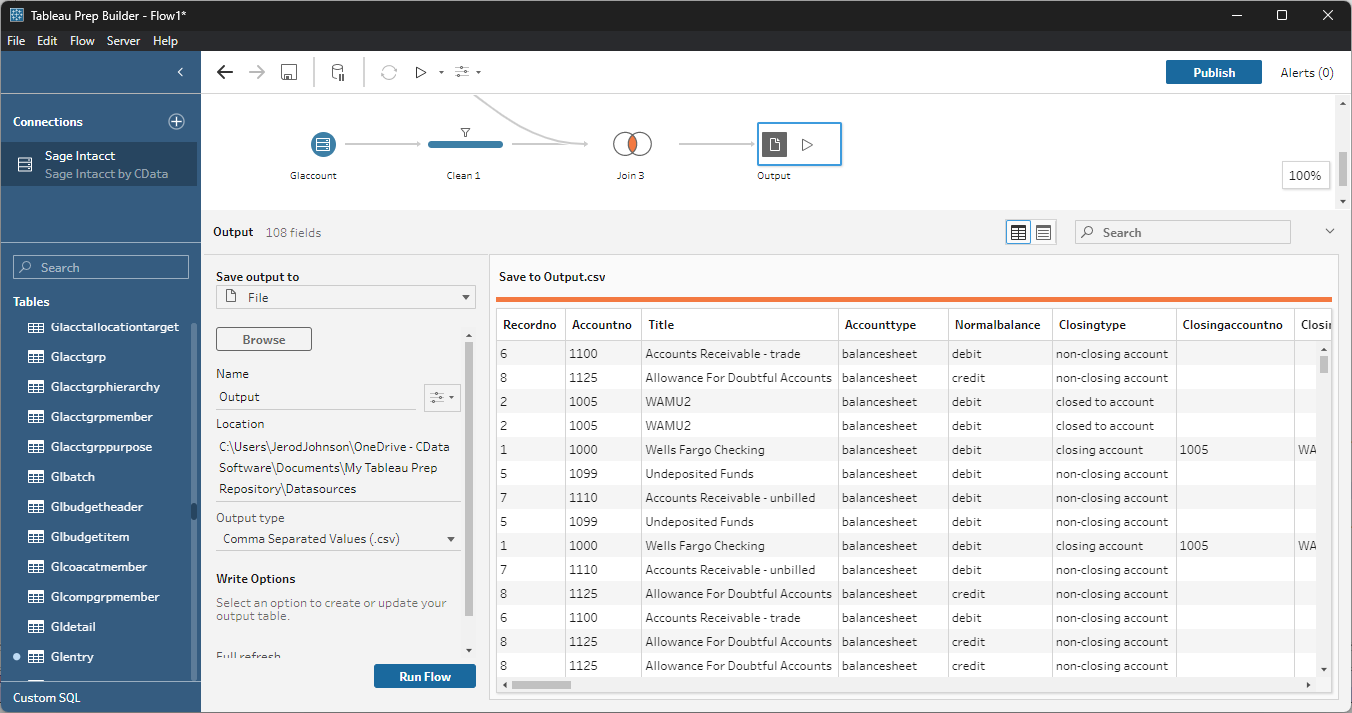

Exporting Prepped Data

After you perform any cleansing, filtering, transformations, and joins, you can export the data for visualization in Tableau.

- Add any other needed transformations then insert an Output node at the end of the flow.

- Configure the node to save to a file in the format of your choice.

Once the output data is saved, you can work with it in Tableau, just like you would any other file source.

Using the CData Tableau Connector for Lakebase with Tableau Prep Builder, you can easily join, cleanse, filter, and aggregate Lakebase data for visualizations and reports in Tableau. Download a free, 30-day trial and get started today.