Connect and Query Live Amazon Athena Data in Databricks with CData Connect AI

Databricks is a leading AI cloud-native platform that unifies data engineering, machine learning, and analytics at scale. Its powerful data lakehouse architecture combines the performance of data warehouses with the flexibility of data lakes. Integrating Databricks with CData Connect AI gives organizations live, real-time access to Amazon Athena data without the need for complex ETL pipelines or data duplication—streamlining operations and reducing time-to-insights.

In this article, we'll walk through how to configure a secure, live connection from Databricks to Amazon Athena using CData Connect AI. Once configured, you'll be able to access Amazon Athena data directly from Databricks notebooks using standard SQL—enabling unified, real-time analytics across your data ecosystem.

About Amazon Athena Data Integration

CData provides the easiest way to access and integrate live data from Amazon Athena. Customers use CData connectivity to:

- Authenticate securely using a variety of methods, including IAM credentials, access keys, and Instance Profiles, catering to diverse security needs and simplifying the authentication process.

- Streamline their setup and quickly resolve issue with detailed error messaging.

- Enhance performance and minimize strain on client resources with server-side query execution.

Users frequently integrate Athena with analytics tools like Tableau, Power BI, and Excel for in-depth analytics from their preferred tools.

To learn more about unique Amazon Athena use cases with CData, check out our blog post: https://www.cdata.com/blog/amazon-athena-use-cases.

Getting Started

Overview

Here is an overview of the simple steps:

- Step 1 — Connect and Configure: In CData Connect AI, create a connection to your Amazon Athena source, configure user permissions, and generate a Personal Access Token (PAT).

- Step 2 — Query from Databricks: Install the CData JDBC driver in Databricks, configure your notebook with the connection details, and run SQL queries to access live Amazon Athena data.

Prerequisites

Before you begin, make sure you have the following:

- An active Amazon Athena account.

- A CData Connect AI account. You can log in or sign up for a free trial here.

- A Databricks account. Sign up or log in here.

Step 1: Connect and Configure a Amazon Athena Connection in CData Connect AI

1.1 Add a Connection to Amazon Athena

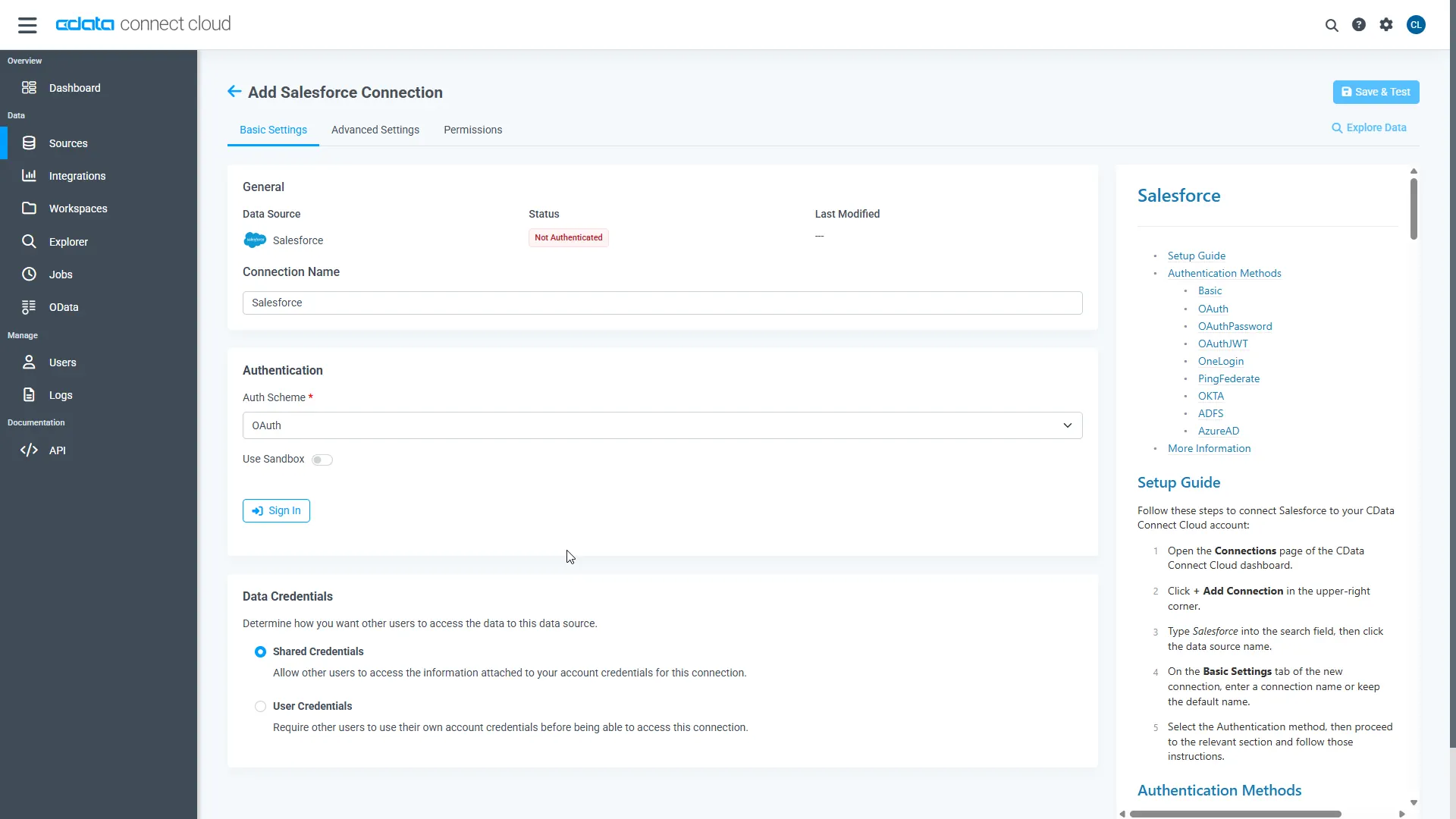

CData Connect AI uses a straightforward, point-and-click interface to connect to available data sources.

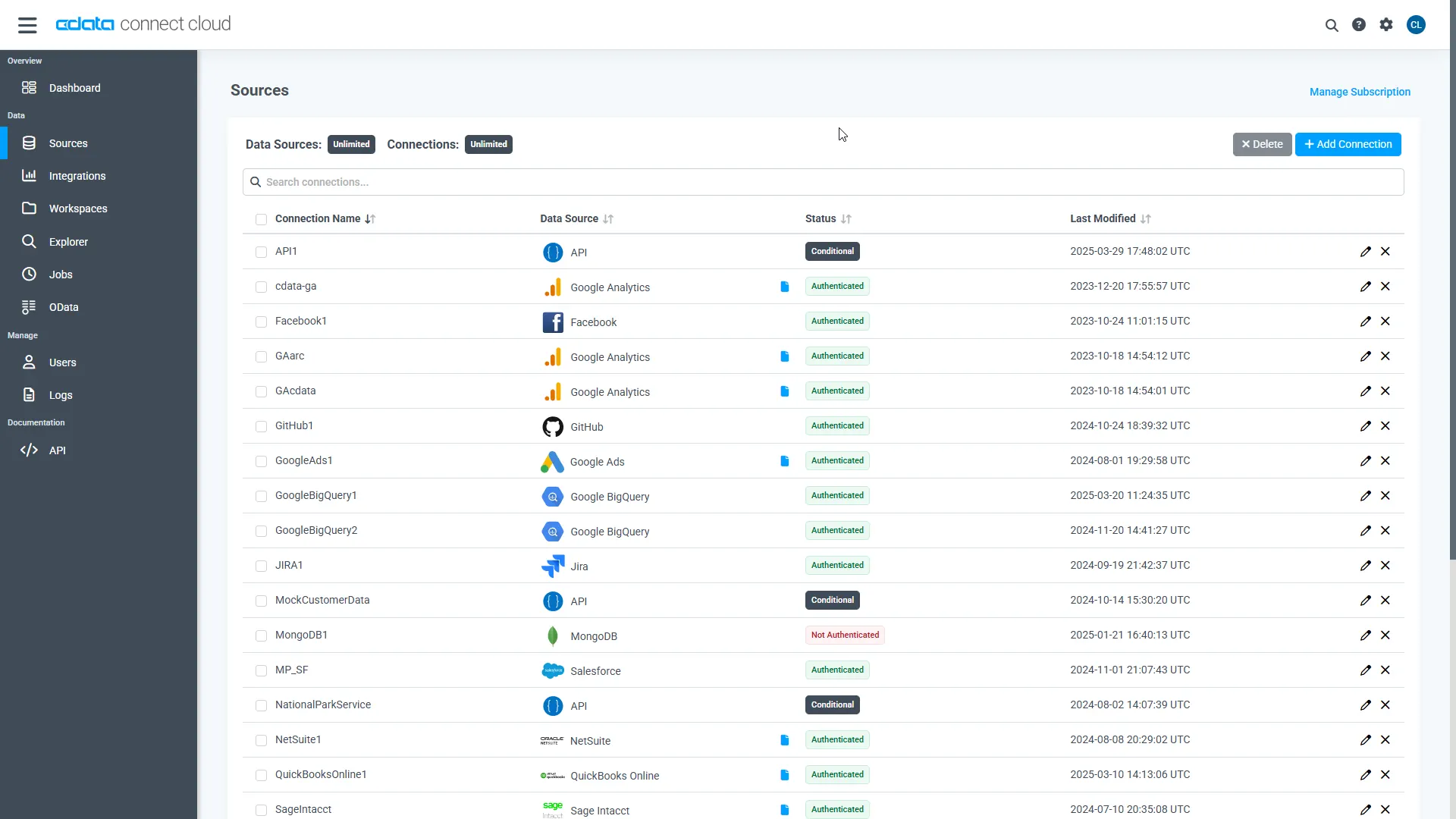

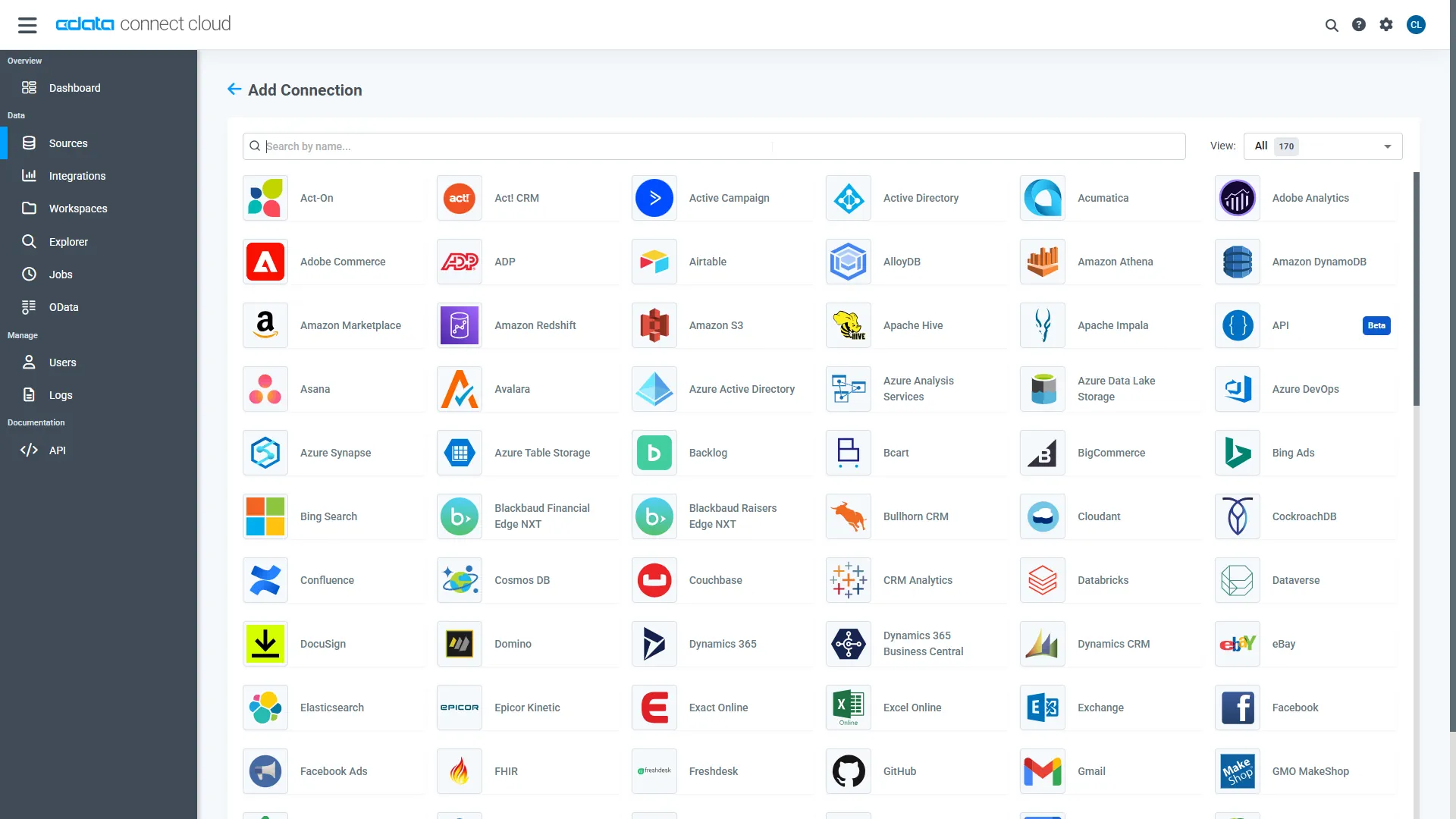

- Log into Connect AI, click Sources on the left, and then click Add Connection in the top-right.

- Select "Amazon Athena" from the Add Connection panel.

-

Enter the necessary authentication properties to connect to Amazon Athena.

Authenticating to Amazon Athena

To authorize Amazon Athena requests, provide the credentials for an administrator account or for an IAM user with custom permissions: Set AccessKey to the access key Id. Set SecretKey to the secret access key.

Note: Though you can connect as the AWS account administrator, it is recommended to use IAM user credentials to access AWS services.

Obtaining the Access Key

To obtain the credentials for an IAM user, follow the steps below:

- Sign into the IAM console.

- In the navigation pane, select Users.

- To create or manage the access keys for a user, select the user and then select the Security Credentials tab.

To obtain the credentials for your AWS root account, follow the steps below:

- Sign into the AWS Management console with the credentials for your root account.

- Select your account name or number and select My Security Credentials in the menu that is displayed.

- Click Continue to Security Credentials and expand the Access Keys section to manage or create root account access keys.

Authenticating from an EC2 Instance

If you are using the CData Data Provider for Amazon Athena 2018 from an EC2 Instance and have an IAM Role assigned to the instance, you can use the IAM Role to authenticate. To do so, set UseEC2Roles to true and leave AccessKey and SecretKey empty. The CData Data Provider for Amazon Athena 2018 will automatically obtain your IAM Role credentials and authenticate with them.

Authenticating as an AWS Role

In many situations it may be preferable to use an IAM role for authentication instead of the direct security credentials of an AWS root user. An AWS role may be used instead by specifying the RoleARN. This will cause the CData Data Provider for Amazon Athena 2018 to attempt to retrieve credentials for the specified role. If you are connecting to AWS (instead of already being connected such as on an EC2 instance), you must additionally specify the AccessKey and SecretKey of an IAM user to assume the role for. Roles may not be used when specifying the AccessKey and SecretKey of an AWS root user.

Authenticating with MFA

For users and roles that require Multi-factor Authentication, specify the MFASerialNumber and MFAToken connection properties. This will cause the CData Data Provider for Amazon Athena 2018 to submit the MFA credentials in a request to retrieve temporary authentication credentials. Note that the duration of the temporary credentials may be controlled via the TemporaryTokenDuration (default 3600 seconds).

Connecting to Amazon Athena

In addition to the AccessKey and SecretKey properties, specify Database, S3StagingDirectory and Region. Set Region to the region where your Amazon Athena data is hosted. Set S3StagingDirectory to a folder in S3 where you would like to store the results of queries.

If Database is not set in the connection, the data provider connects to the default database set in Amazon Athena.

- Click Save & Test in the top-right.

-

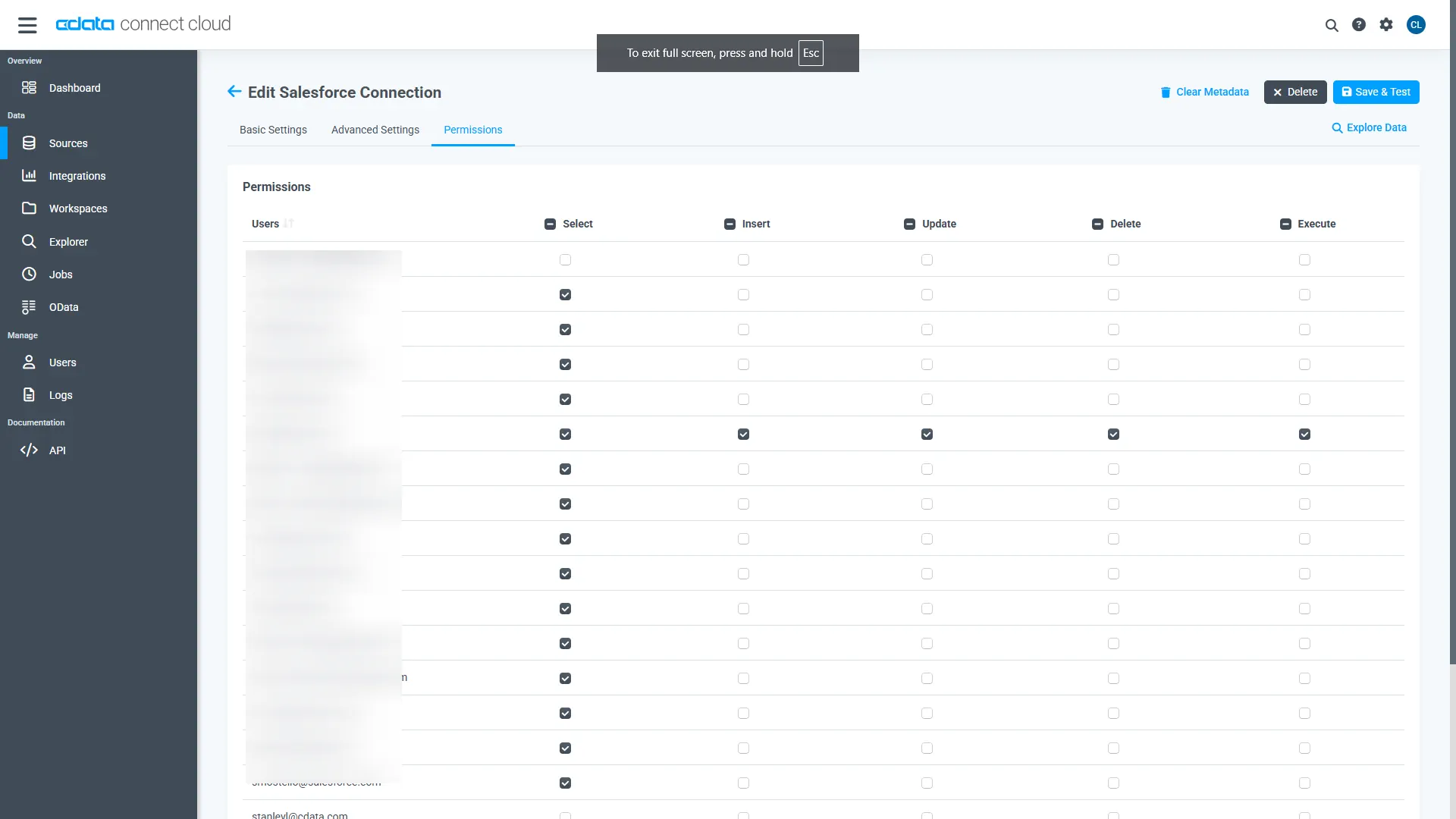

Navigate to the Permissions tab on the Amazon Athena Connection page

and update the user-based permissions based on your preferences.

1.2 Generate a Personal Access Token (PAT)

When connecting to Connect AI through the REST API, the OData API, or the Virtual SQL Server, a Personal Access Token (PAT) is used to authenticate the connection to Connect AI. PAT functions as an alternative to your login credentials for secure, token-based authentication. It is a best practice to create a separate PAT for each service to maintain granularity of access.

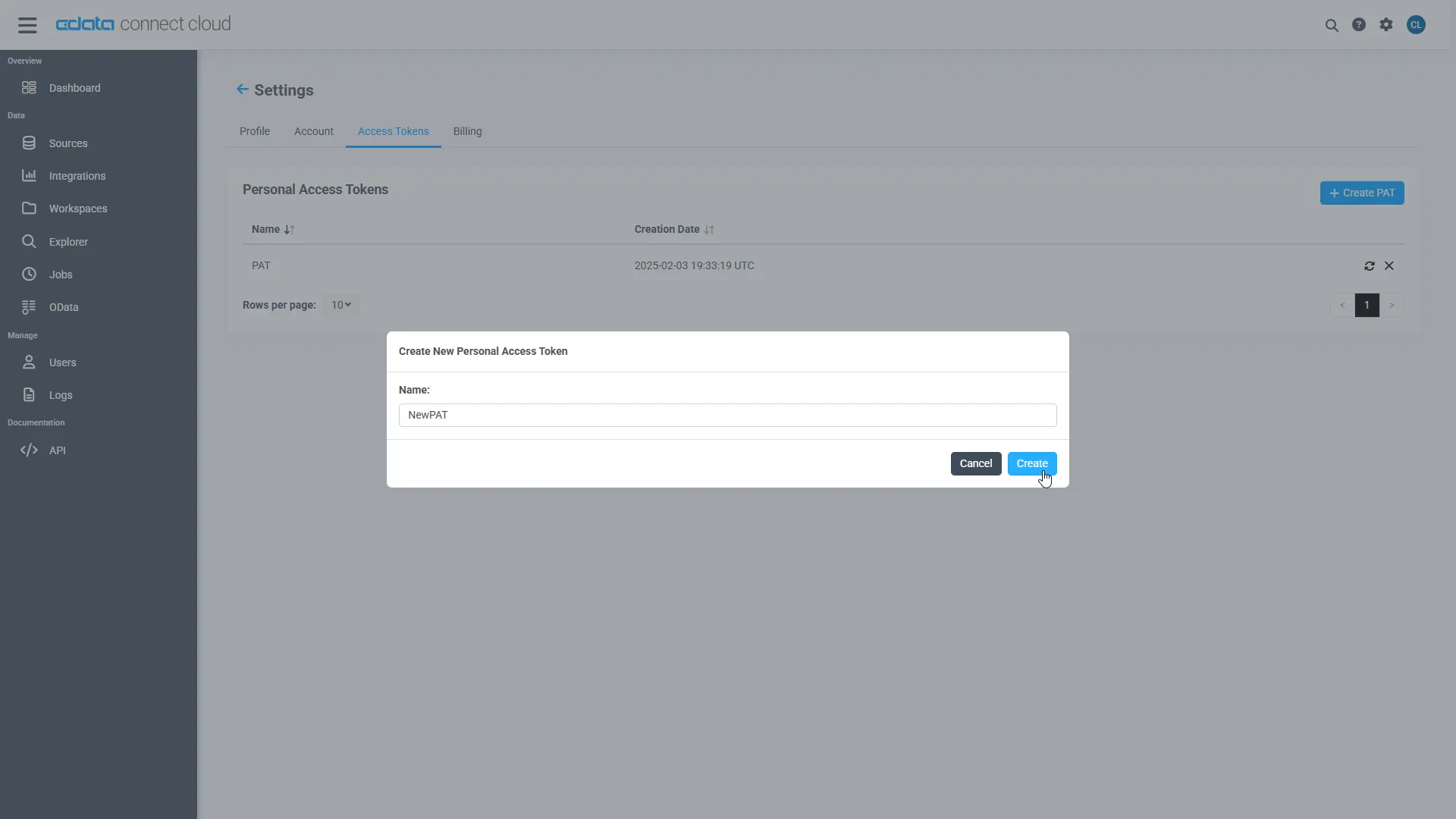

- Click on the Gear icon () at the top right of the Connect AI app to open the settings page.

- On the Settings page, go to the Access Tokens section and click Create PAT.

-

Give the PAT a name and click Create.

- Note: The personal access token is only visible at creation, so be sure to copy it and store it securely for future use.

Step 2: Connect and Query Amazon Athena Data in Databricks

Follow these steps to establish a connection from Databricks to Amazon Athena. You'll install the CData JDBC Driver for Connect AI, add the JAR file to your cluster, configure your notebooks, and run SQL queries to access live Amazon Athena data data.

2.1 Install the CData JDBC Driver for Connect AI

- In CData Connect AI, click the Integrations page on the left. Search for JDBC or Databricks, click Download, and select the installer for your operating system.

-

Once downloaded, run the installer and follow the instructions:

- For Windows: Run the setup file and follow the installation wizard.

- For Mac/Linux: Unpack the archive and move the folder to /opt or /Applications. Make sure you have execute permissions.

-

After installation, locate the JAR file in the installation directory:

- Windows:

C:\Program Files\CData\CData JDBC Driver for Connect AI\lib\cdata.jdbc.connect.jar

- Mac/Linux:

/Applications/CData/CData JDBC Driver for Connect AI/lib/cdata.jdbc.connect.jar

- Windows:

2.2 Install the JAR File on Databricks

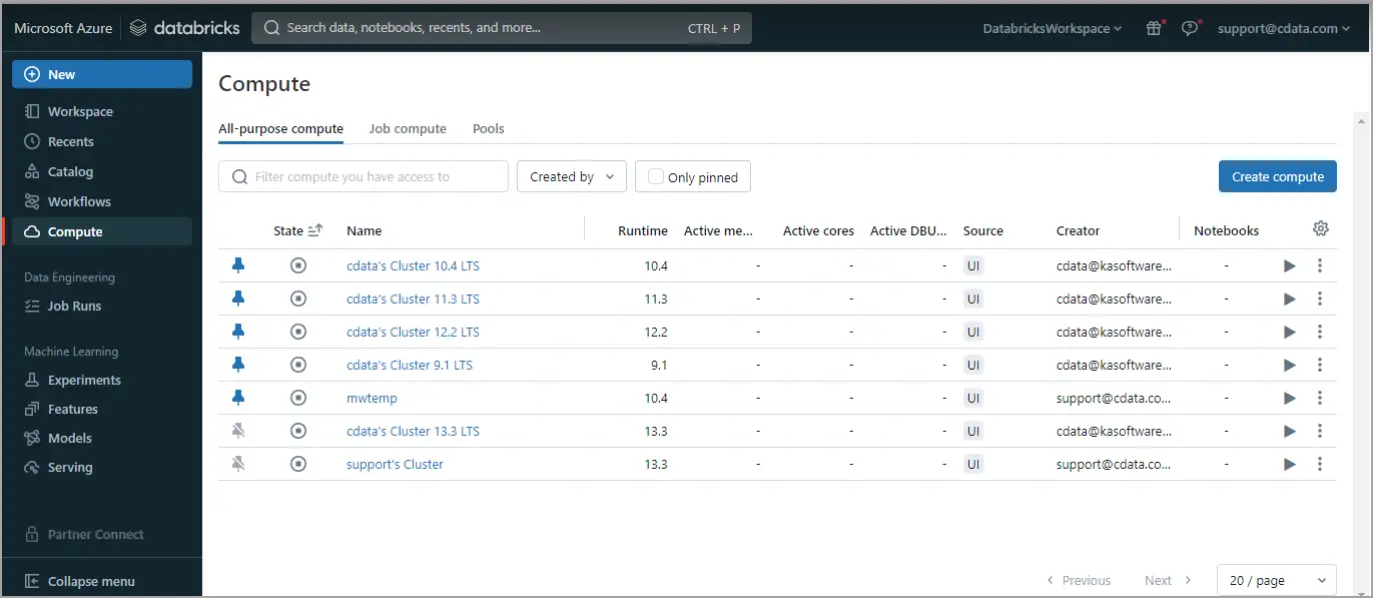

-

Log in to Databricks. In the navigation pane, click Compute on the left. Start or create a compute cluster.

-

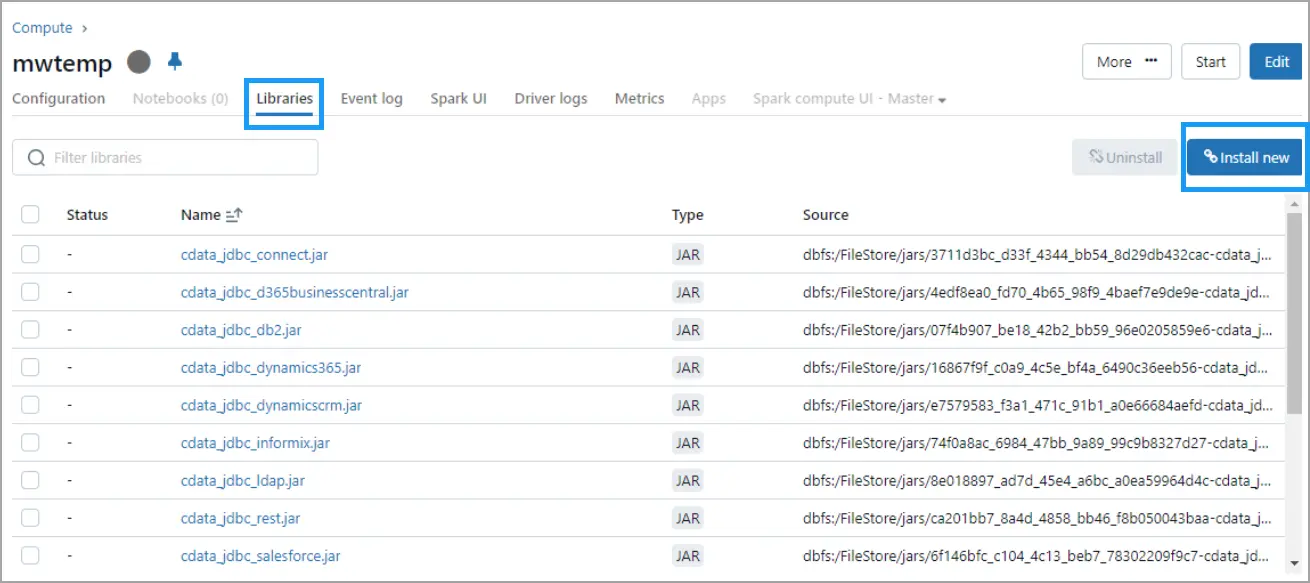

Click on the running cluster, go to the Libraries tab, and click Install New at the top right.

-

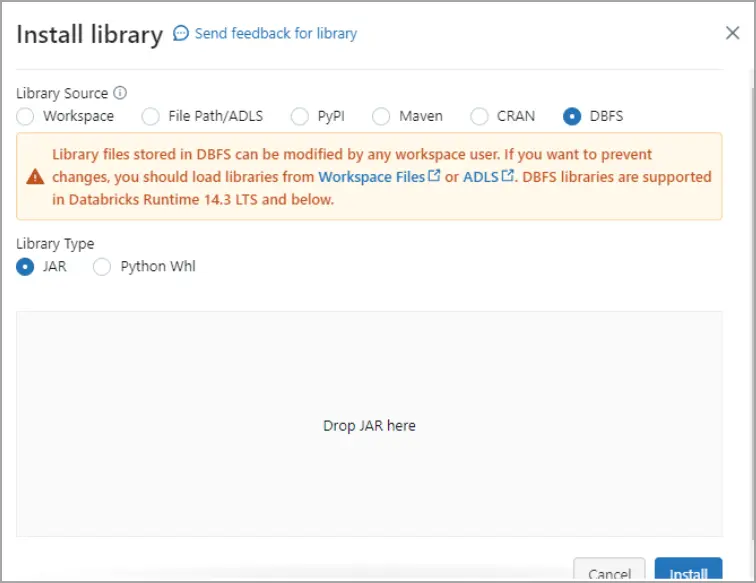

In the Install Library dialog, select DBFS, and drag and drop the

cdata.jdbc.connect.jar file. Click Install.

2.3 Query Amazon Athena Data in a Databricks Notebook

Notebook Script 1 — Define JDBC Connection:

- Paste the following script into the notebook cell:

driver = "cdata.jdbc.connect.ConnectDriver" url = "jdbc:connect:AuthScheme=Basic;User=your_username;Password=your_pat;URL=https://cloud.cdata.com/api/;DefaultCatalog=Your_Connection_Name;"

- Replace:

- your_username - With your CData Connect AI username

- your_pat - With your CData Connect AI Personal Access Token (PAT)

- Your_Connection_Name - With the name of your Connect AI data source, from the Sources page

- Run the script.

Notebook Script 2 — Load DataFrame from Amazon Athena data:

- Add a new cell for this second script. From the menu on the right side of your notebook, click Add cell below.

- Paste the following script into the new cell:

remote_table = spark.read.format("jdbc") \

.option("driver", "cdata.jdbc.connect.ConnectDriver") \

.option("url", "jdbc:connect:AuthScheme=Basic;User=your_username;Password=your_pat;URL=https://cloud.cdata.com/api/;DefaultCatalog=Your_Connection_Name;") \

.option("dbtable", "YOUR_SCHEMA.YOUR_TABLE") \

.load()

- Replace:

- your_username - With your CData Connect AI username

- your_pat - With your CData Connect AI Personal Access Token (PAT)

- Your_Connection_Name - With the name of your Connect AI data source, from the Sources page

- YOUR_SCHEMA.YOUR_TABLE - With your schema and table, for example, AmazonAthena.Customers

- Run the script.

Notebook Script 3 — Preview Columns:

- Similarly, add a new cell for this third script.

- Paste the following script into the new cell:

display(remote_table.select("ColumnName1", "ColumnName2"))

- Replace ColumnName1 and ColumnName2 with the actual columns from your Amazon Athena structure (e.g. Name, TotalDue, etc.).

- Run the script.

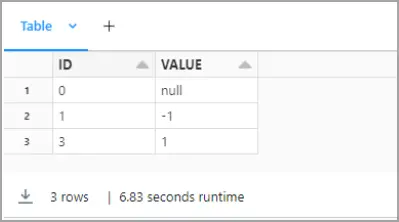

You can now explore, join, and analyze live Amazon Athena data directly within Databricks notebooks—without needing to know the complexities of the back-end API and without replicating Amazon Athena data.

Try CData Connect AI Free for 14 Days

Ready to simplify real-time access to Amazon Athena data? Start your free 14-day trial of CData Connect AI today and experience seamless, live connectivity from Databricks to Amazon Athena.

Low code, zero infrastructure, zero replication — just seamless, secure access to your most critical data and insights.