Integrating LangChain with Databricks Data via CData Connect AI

LangChain is a framework used by developers, data engineers, and AI practitioners for building AI-powered applications and workflows by combining reasoning models (LLMs), tools, APIs, and data connectors. By integrating LangChain with CData Connect AI through the built-in MCP Server, workflows can effortlessly access and interact with live Databricks data in real time.

CData Connect AI offers a secure, low-code environment to connect Databricks and other data sources, removing the need for complex ETL and enabling seamless automation across business applications with live data.

This article outlines how to configure Databricks connectivity in CData Connect AI, register the MCP server with LangChain, and build a workflow that queries Databricks data in real time.

Prerequisites

- An account in CData Connect AI

- Python version 3.10 or higher, to install the LangChain and LangGraph packages

- Generate and save an OpenAI API key

- Install Visual Studio Code in your system

About Databricks Data Integration

Accessing and integrating live data from Databricks has never been easier with CData. Customers rely on CData connectivity to:

- Access all versions of Databricks from Runtime Versions 9.1 - 13.X to both the Pro and Classic Databricks SQL versions.

- Leave Databricks in their preferred environment thanks to compatibility with any hosting solution.

- Secure authenticate in a variety of ways, including personal access token, Azure Service Principal, and Azure AD.

- Upload data to Databricks using Databricks File System, Azure Blog Storage, and AWS S3 Storage.

While many customers are using CData's solutions to migrate data from different systems into their Databricks data lakehouse, several customers use our live connectivity solutions to federate connectivity between their databases and Databricks. These customers are using SQL Server Linked Servers or Polybase to get live access to Databricks from within their existing RDBMs.

Read more about common Databricks use-cases and how CData's solutions help solve data problems in our blog: What is Databricks Used For? 6 Use Cases.

Getting Started

Step 1: Configure Databricks Connectivity for LangChain

Before LangChain can access Databricks, a Databricks connection must be created in CData Connect AI. This connection is then exposed to LangChain through the remote MCP server.

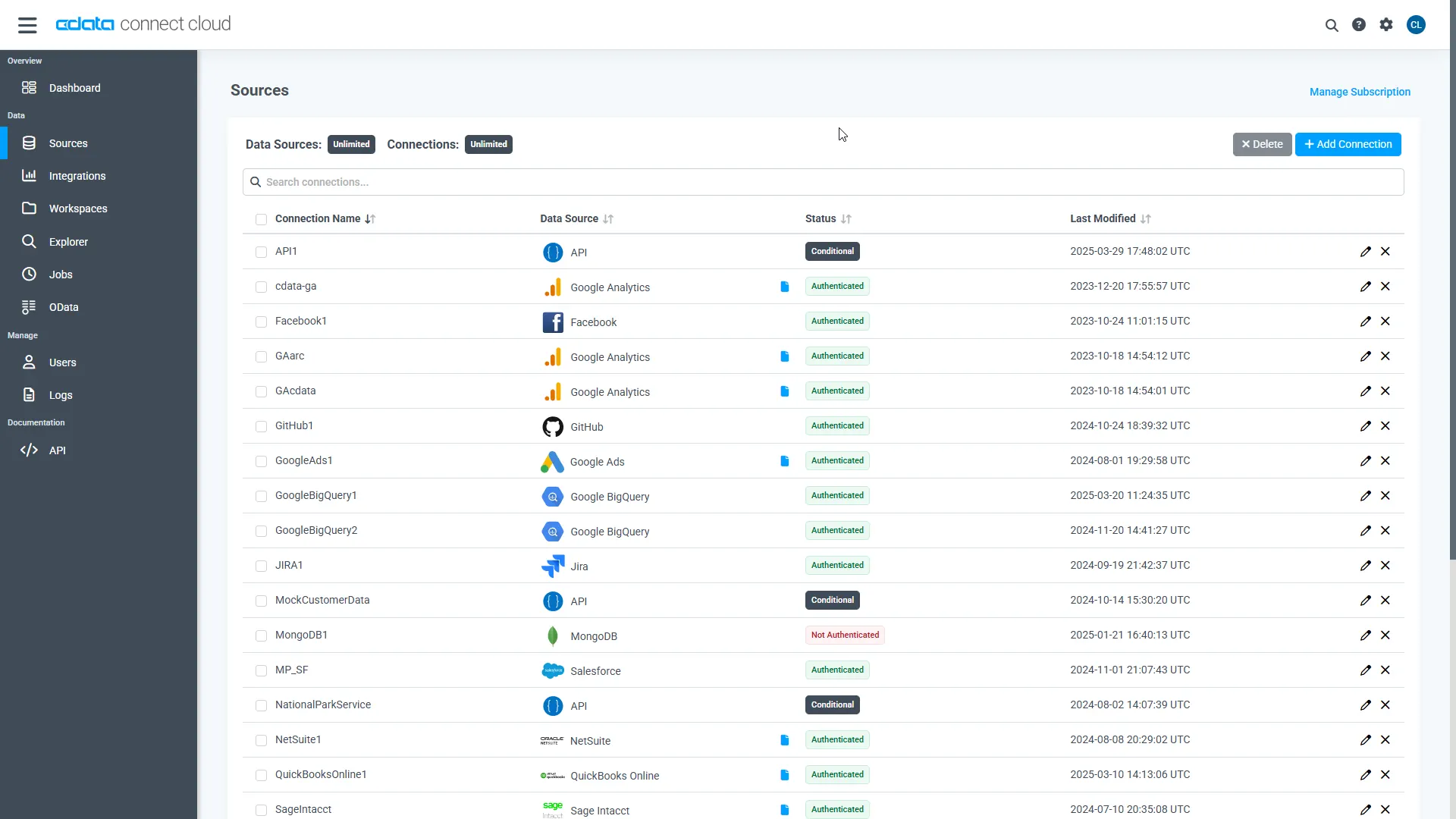

- Log in to Connect AI click Sources, and then click + Add Connection

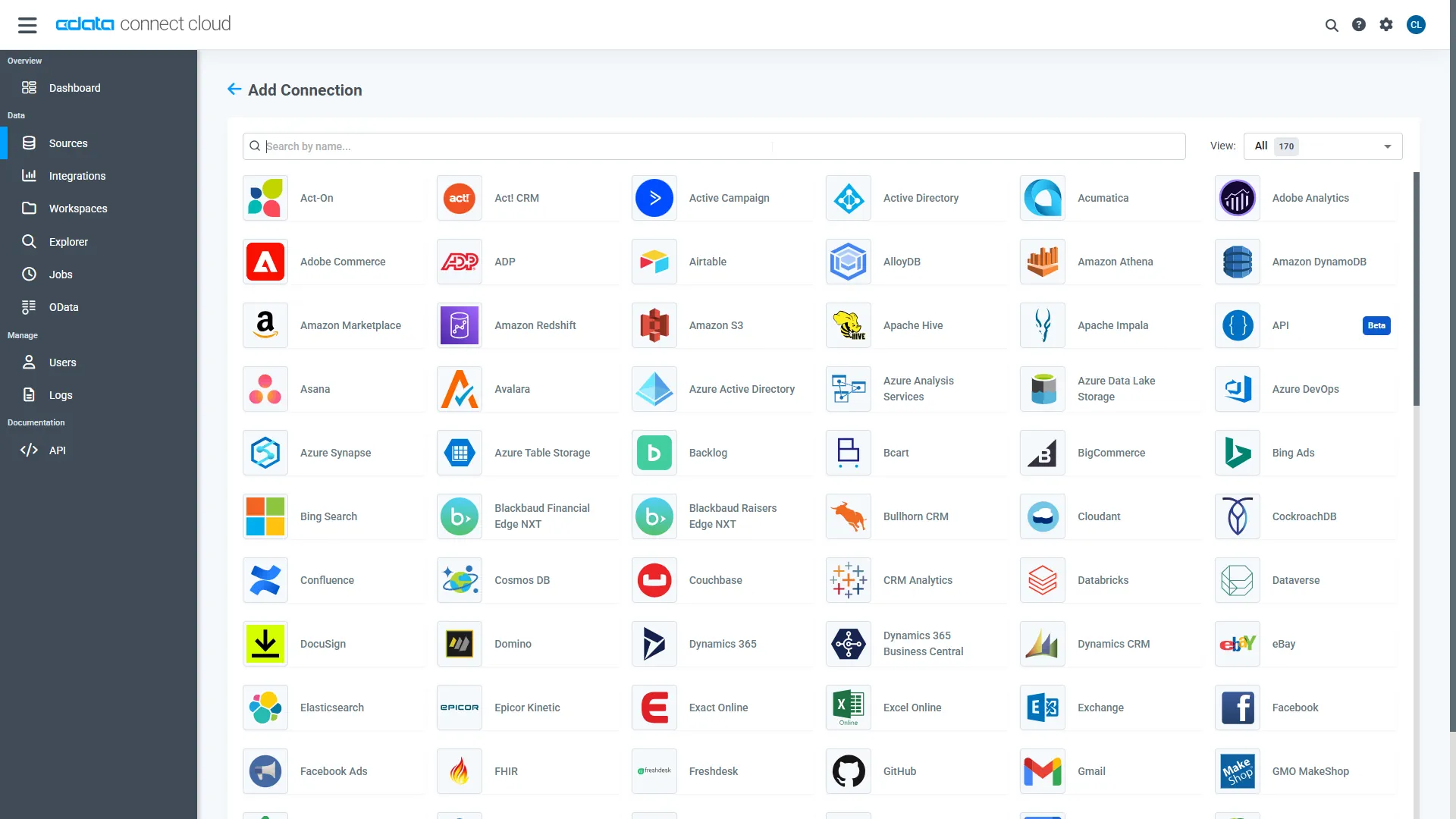

- From the available data sources, choose Databricks

-

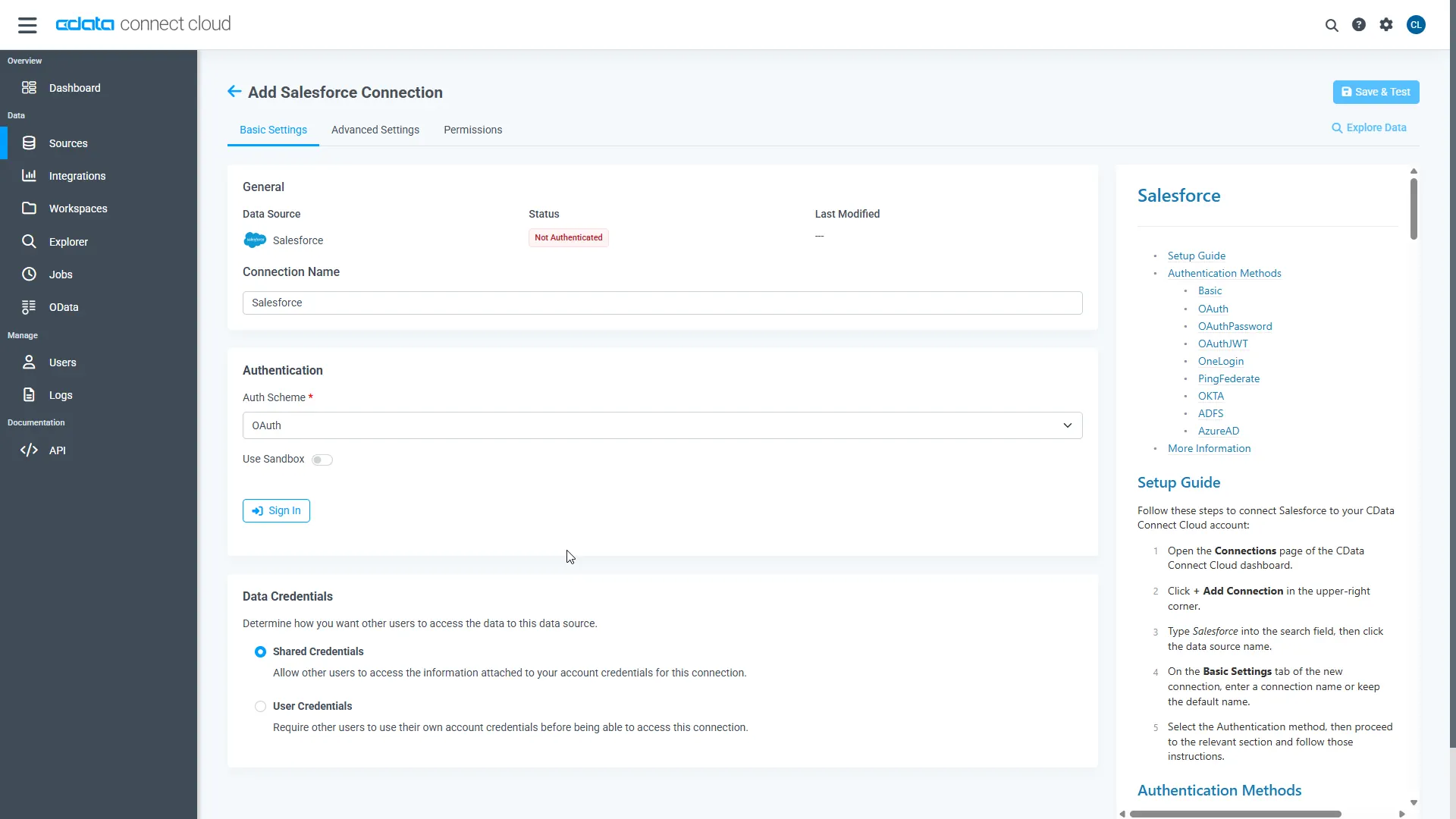

Enter the necessary authentication properties to connect to Databricks

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

- Click Save & Test

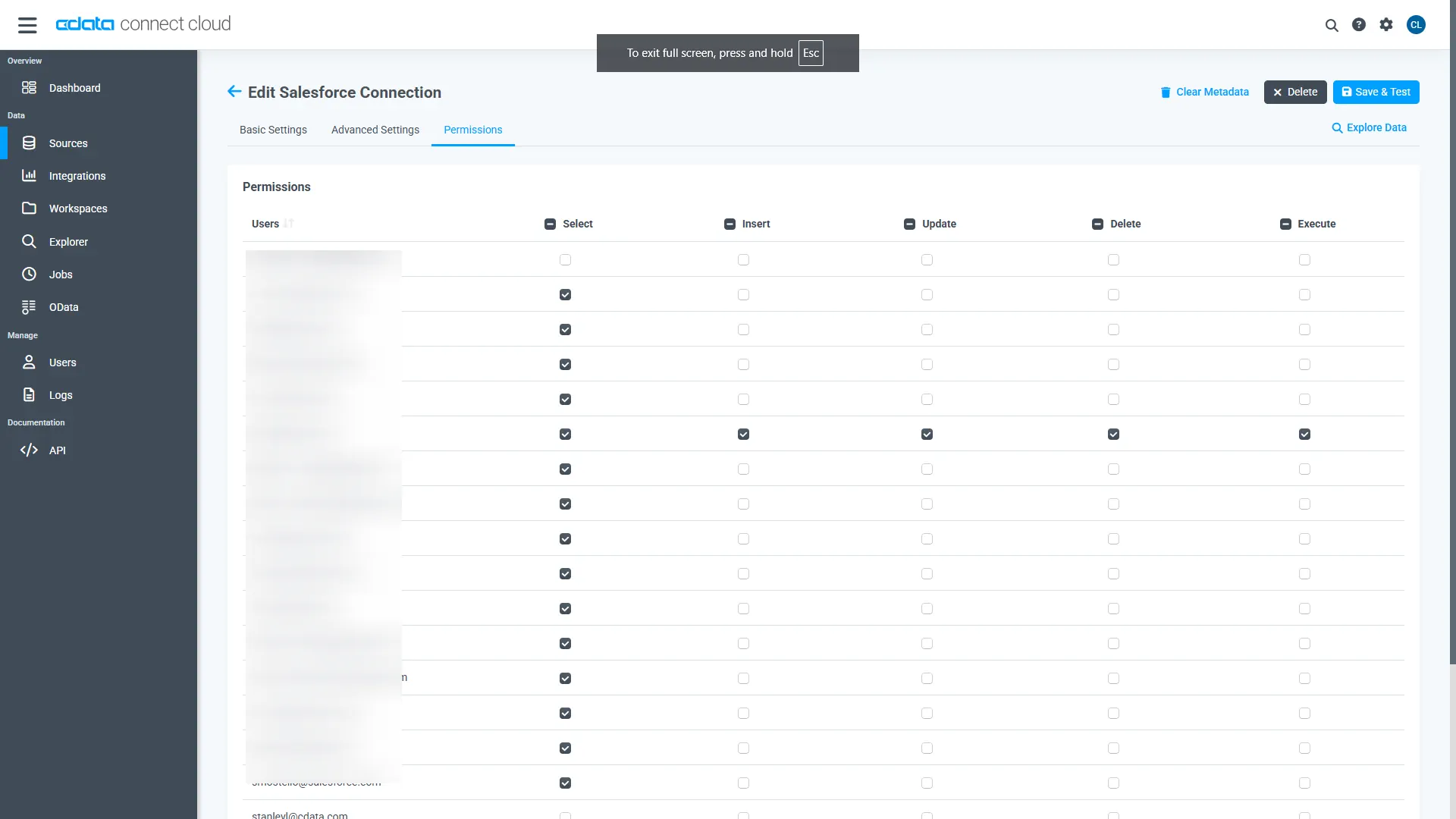

- Once authenticated, open the Permissions tab in the Databricks connection and configure user-based permissions as required

Generate a Personal Access Token (PAT)

LangChain authenticates to Connect AI using an account email and a Personal Access Token (PAT). Creating separate PATs for each integration is recommended to maintain access control granularity.

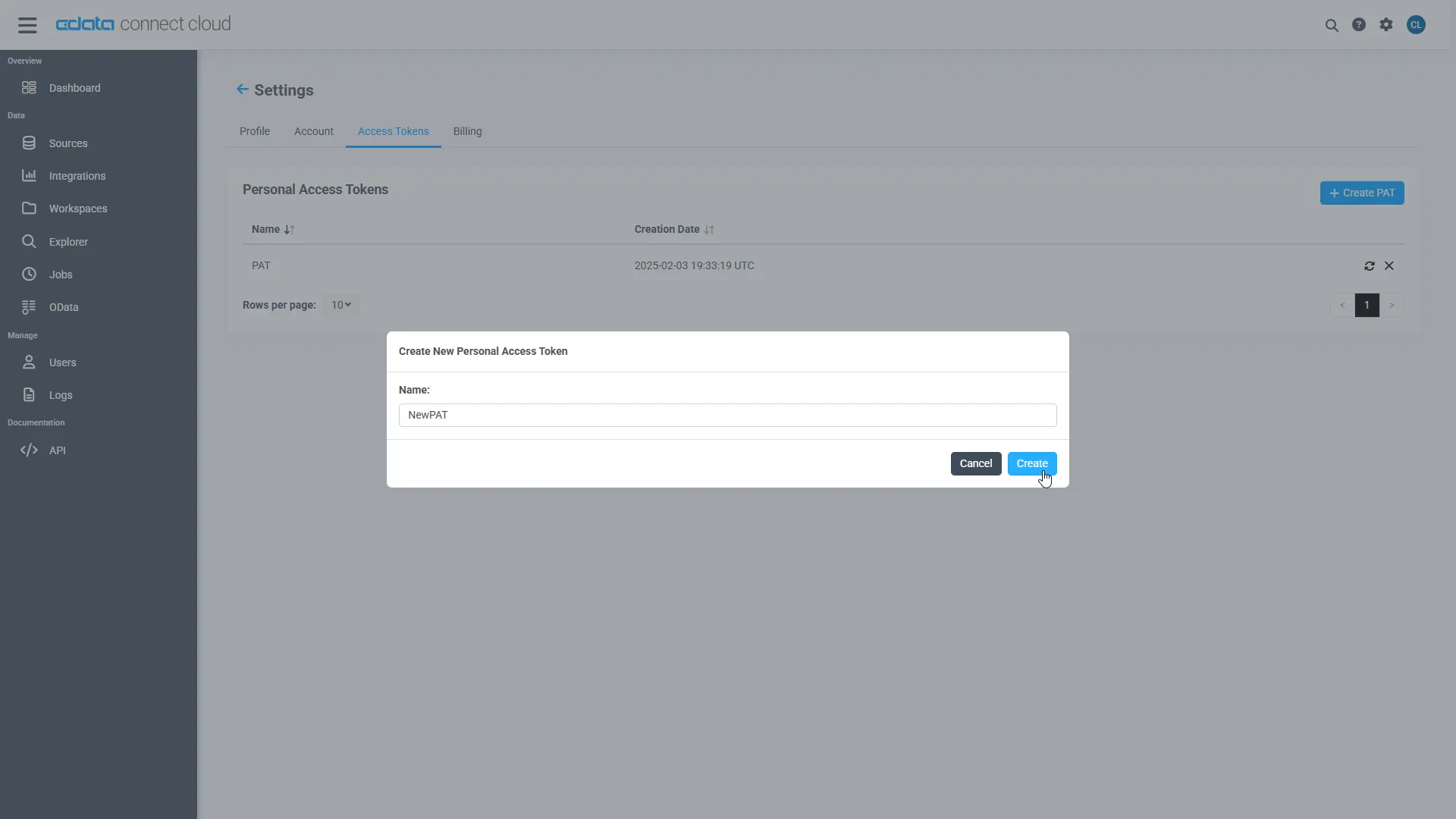

- In Connect AI, select the Gear icon in the top-right to open Settings

- Under Access Tokens, select Create PAT

- Provide a descriptive name for the token and select Create

- Copy the token and store it securely. The PAT will only be visible during creation

With the Databricks connection configured and a PAT generated, LangChain is prepared to connect to Databricks data through the CData MCP server.

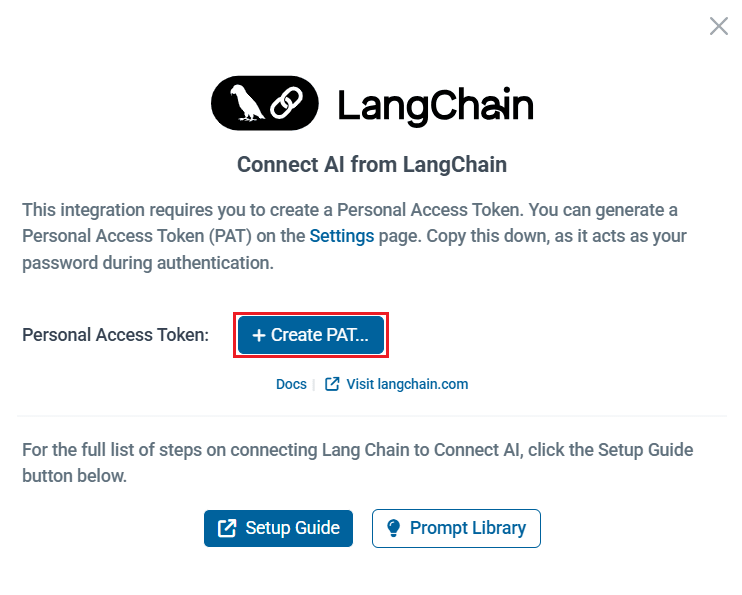

Note: You can also generate a PAT from LangChain in the Integrations section of Connect AI. Simply click Connect --> Create PAT to generate it.

Step 2: Connect to the MCP server in LangChain

To connect LangChain with CData Connect AI Remote MCP Server and use OpenAI (ChatGPT) for reasoning, you need to configure your MCP server endpoint and authentication values in a config.py file. These values allow LangChain to call the MCP server tools, while OpenAI handles the natural language reasoning.

- Create a folder for LangChain MCP

- Create two Python files within the folder: config.py and langchain.py

- In config.py, create a class Config to define your MCP server authentication and URL. You need to provide your Base64-encoded CData Connect AI username and PAT (obtained in the prerequisites):

class Config: MCP_BASE_URL = "https://mcp.cloud.cdata.com/mcp" #MCP Server URL MCP_AUTH = "base64encoded(EMAIL:PAT)" #Base64 encoded Connect AI Email:PATNote: You can create the base64 encoded version of MCP_AUTH using any Base64 encoding tool.

- In langchain.py, set up your MCP server and MCP client to call the tools and prompts:

""" Integrates a LangChain ReAct agent with CData Connect AI MCP server. The script demonstrates fetching, filtering, and using tools with an LLM for agent-based reasoning. """ import asyncio from langchain_mcp_adapters.client import MultiServerMCPClient from langchain_openai import ChatOpenAI from langgraph.prebuilt import create_react_agent from config import Config async def main(): # Initialize MCP client with one or more server URLs mcp_client = MultiServerMCPClient( connections={ "default": { # you can name this anything "transport": "streamable_http", "url": Config.MCP_BASE_URL, "headers": {"Authorization": f"Basic {Config.MCP_AUTH}"}, } } ) # Load remote MCP tools exposed by the server all_mcp_tools = await mcp_client.get_tools() print("Discovered MCP tools:", [tool.name for tool in all_mcp_tools]) # Create and run the ReAct style agent llm = ChatOpenAI( model="gpt-4o", temperature=0.2, api_key="YOUR_OPEN_API_KEY" #Use your OpenAI API Key here, this can be found here: https://platform.openai.com/ ) agent = create_react_agent(llm, all_mcp_tools) user_prompt = "How many tables are available in Databricks1?" #Change prompts as per need print(f" User prompt: {user_prompt}") # Send a prompt asking the agent to use the MCP tools response = await agent.ainvoke( { "messages": [{ "role": "user", "content": (user_prompt),}]} ) # Print out the agent's final response final_msg = response["messages"][-1].content print("Agent final response:", final_msg) if __name__ == "__main__": asyncio.run(main())

Step 3: Install the LangChain and LangGraph packages

Since this workflow uses LangChain together with CData Connect AI MCP and integrates OpenAI for reasoning, you need to install the required Python packages.

Run the following command in your project terminal:

pip install langchain-mcp-adapters langchain-openai langgraph

Step 4: Prompt Databricks using LangChain (via the MCP server)

- When the installation finishes, run python langchain.py to execute the script

- The script connects to the MCP server and discovers the CData Connect AI MCP tools available for querying your connected data

- Supply a prompt (e.g., "How many tables are available in Databricks?")

- Accordingly, the agent responds with the results

Get CData Connect AI

To get live data access to 300+ SaaS, Big Data, and NoSQL sources directly from your cloud applications, try CData Connect AI today!