How to Connect to Live Databricks Data from OpenAI Python Applications (via CData Connect AI)

OpenAI's Python SDK provides powerful capabilities for building AI applications that can interact with various data sources. When combined with CData Connect AI Remote MCP, you can build intelligent chat applications that interact with your Databricks data in real-time through natural language queries. This article outlines the process of connecting to Databricks using Connect AI Remote MCP and configuring an OpenAI-powered Python application to interact with your Databricks data through conversational AI.

CData Connect AI offers a dedicated cloud-to-cloud interface for connecting to Databricks data. The CData Connect AI Remote MCP Server enables secure communication between OpenAI applications and Databricks. This allows your AI assistants to read from and take actions on your live Databricks data. With its inherent optimized data processing capabilities, CData Connect AI efficiently channels all supported SQL operations, including filters and JOINs, directly to Databricks. This leverages server-side processing to swiftly deliver the requested Databricks data.

In this article, we show how to configure an OpenAI-powered Python application to conversationally explore (or Vibe Query) your data using natural language. With Connect AI you can build AI assistants with access to live Databricks data, plus hundreds of other sources.

About Databricks Data Integration

Accessing and integrating live data from Databricks has never been easier with CData. Customers rely on CData connectivity to:

- Access all versions of Databricks from Runtime Versions 9.1 - 13.X to both the Pro and Classic Databricks SQL versions.

- Leave Databricks in their preferred environment thanks to compatibility with any hosting solution.

- Secure authenticate in a variety of ways, including personal access token, Azure Service Principal, and Azure AD.

- Upload data to Databricks using Databricks File System, Azure Blog Storage, and AWS S3 Storage.

While many customers are using CData's solutions to migrate data from different systems into their Databricks data lakehouse, several customers use our live connectivity solutions to federate connectivity between their databases and Databricks. These customers are using SQL Server Linked Servers or Polybase to get live access to Databricks from within their existing RDBMs.

Read more about common Databricks use-cases and how CData's solutions help solve data problems in our blog: What is Databricks Used For? 6 Use Cases.

Getting Started

Step 1: Configure Databricks Connectivity for OpenAI Applications

Connectivity to Databricks from OpenAI applications is made possible through CData Connect AI Remote MCP. To interact with Databricks data from your OpenAI assistant, we start by creating and configuring a Databricks connection in CData Connect AI.

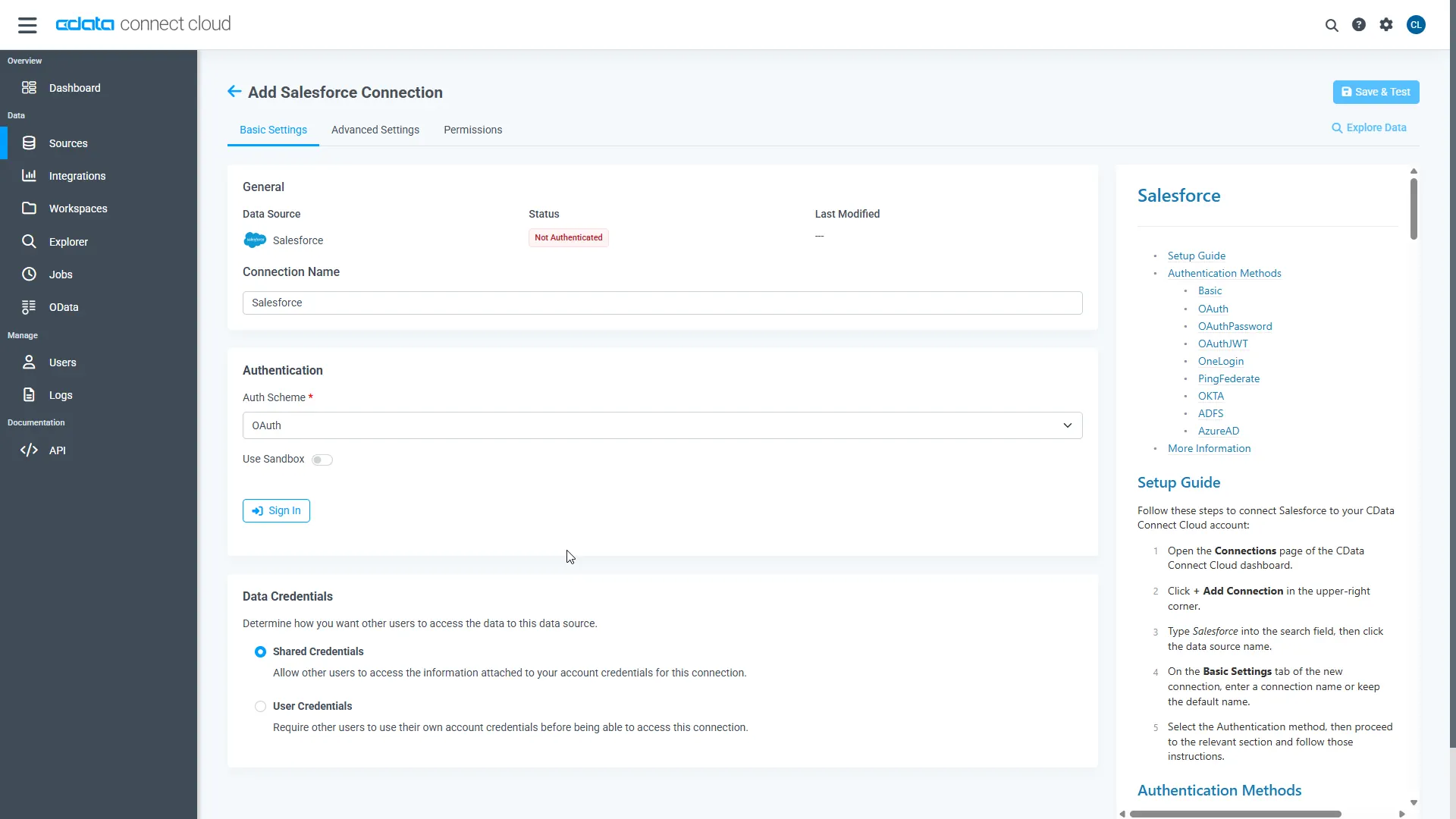

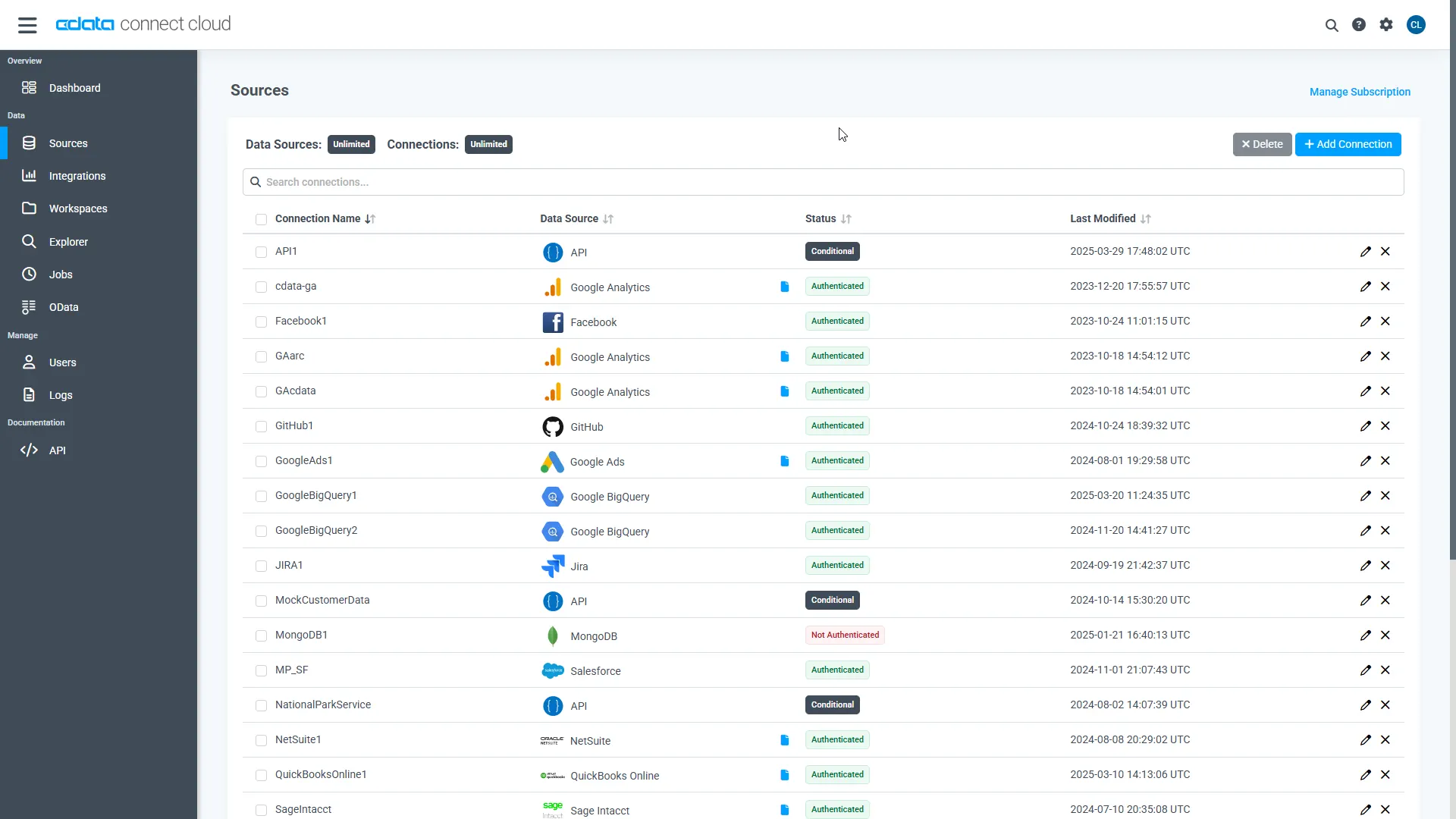

- Log into Connect AI, click Sources, and then click Add Connection

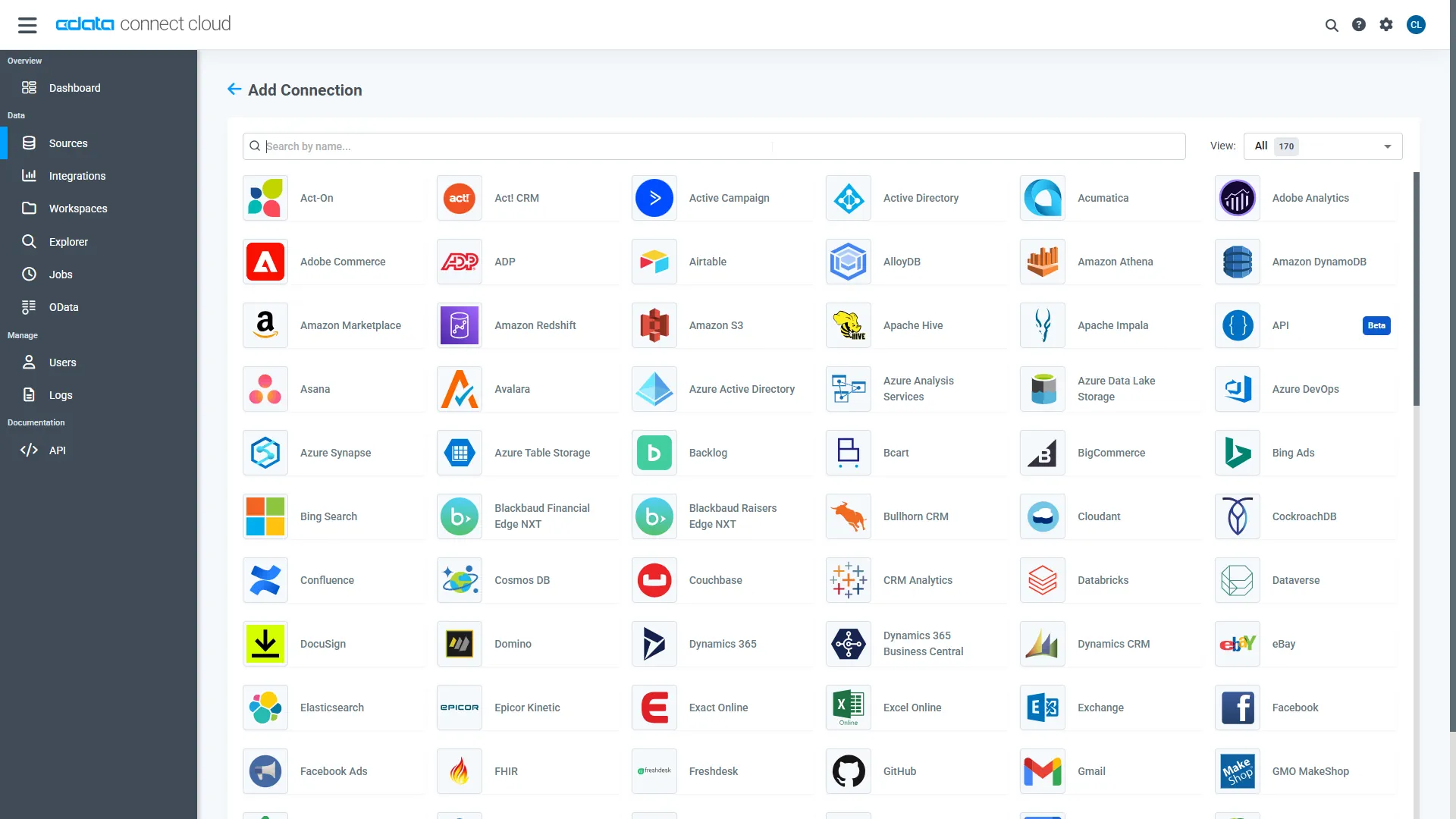

- Select "Databricks" from the Add Connection panel

-

Enter the necessary authentication properties to connect to Databricks.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

- Click Save & Test

-

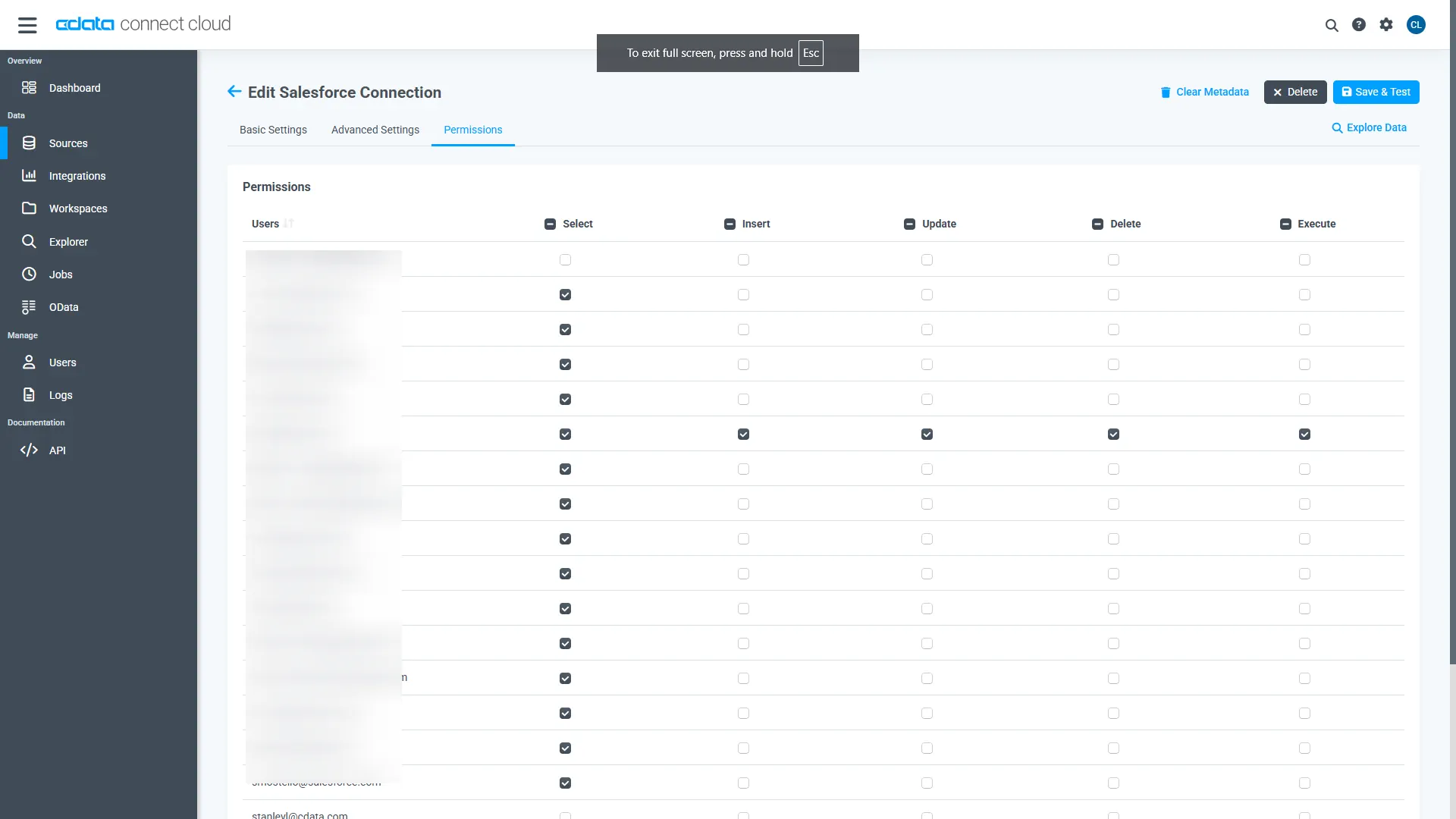

Navigate to the Permissions tab in the Add Databricks Connection page and update the User-based permissions.

Add a Personal Access Token

A Personal Access Token (PAT) is used to authenticate the connection to Connect AI from your OpenAI application. It is best practice to create a separate PAT for each service to maintain granularity of access.

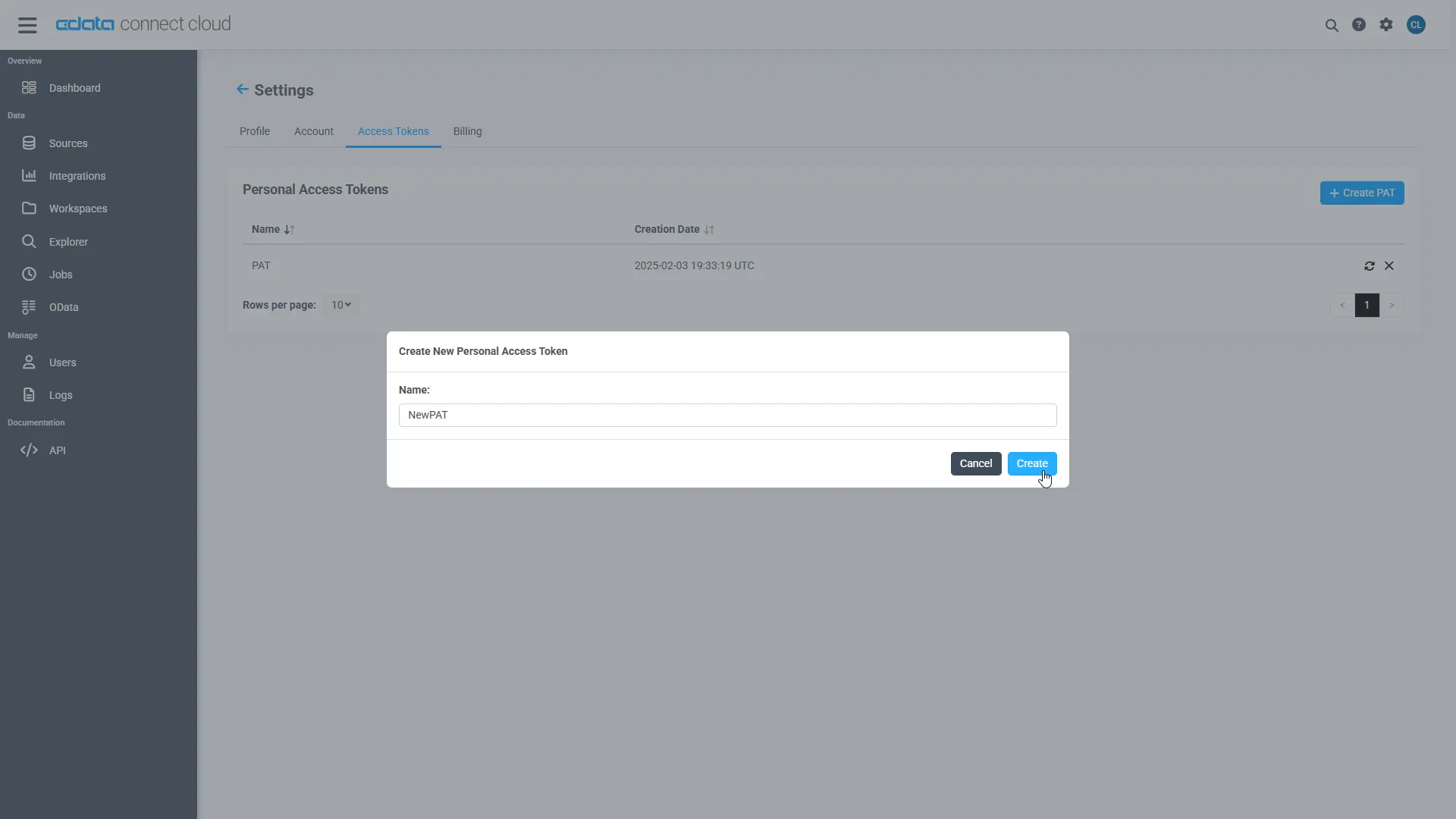

- Click on the Gear icon () at the top right of the Connect AI app to open the settings page.

- On the Settings page, go to the Access Tokens section and click Create PAT.

-

Give the PAT a name and click Create.

- The personal access token is only visible at creation, so be sure to copy it and store it securely for future use.

With the connection configured and a PAT generated, we are ready to connect to Databricks data from your OpenAI application.

Step 2: Configure Your OpenAI Python Application for CData Connect AI

Follow these steps to configure your OpenAI Python application to connect to CData Connect AI. You can use our pre-built client as a starting point, available at https://github.com/CDataSoftware/openai-mcp-client, or follow the instructions below to create your own.

-

Ensure you have Python 3.8+ installed and install the required dependencies:

pip install openai python-dotenv httpx

-

Clone or download the OpenAI MCP client from GitHub:

git clone https://github.com/CDataSoftware/openai-mcp-client.git cd openai-mcp-client

-

Set up your environment variables. Create a .env file in your project root with the following variables:

OPENAI_API_KEY=YOUR_OPENAI_API_KEY MCP_SERVER_URL=https://mcp.cloud.cdata.com/mcp MCP_USERNAME=YOUR_EMAIL MCP_PASSWORD=YOUR_PAT OPENAI_MODEL=gpt-4Replace YOUR_OPENAI_API_KEY with your OpenAI API key, YOUR_EMAIL with your Connect AI email address, and YOUR_PAT with the Personal Access Token created in Step 1. -

If creating your own application, here's the core implementation for connecting to CData Connect AI MCP Server:

import os import asyncio import base64 from dotenv import load_dotenv from mcp_client import MCPServerStreamableHttp, MCPAgent # Load environment variables load_dotenv() async def main(): """Main chat loop for interacting with Databricks data.""" # Get configuration api_key = os.getenv('OPENAI_API_KEY') mcp_url = os.getenv('MCP_SERVER_URL', 'https://mcp.cloud.cdata.com/mcp') username = os.getenv('MCP_USERNAME', '') password = os.getenv('MCP_PASSWORD', '') model = os.getenv('OPENAI_MODEL', 'gpt-4') # Create auth header for MCP server headers = {} if username and password: auth = base64.b64encode(f"{username}:{password}".encode()).decode() headers = {"Authorization": f"Basic {auth}"} # Connect to CData MCP Server async with MCPServerStreamableHttp( name="CData MCP Server", params={ "url": mcp_url, "headers": headers, "timeout": 30, "verify_ssl": True } ) as mcp_server: # Create AI agent with access to Databricks data agent = MCPAgent( name="data_assistant", model=model, mcp_servers=[mcp_server], instructions="""You are a data query assistant with access to Databricks data through CData Connect AI. You can help users explore and query their Databricks data in real-time. Use the available MCP tools to: - List available databases and schemas - Explore table structures - Execute SQL queries - Provide insights about the data Always explain what you're doing and format results clearly.""", api_key=api_key ) await agent.initialize() print(f"Connected! {len(agent._tools_cache)} tools available.") print(" Chat with your Databricks data (type 'exit' to quit): ") # Interactive chat loop conversation = [] while True: user_input = input("You: ") if user_input.lower() in ['exit', 'quit']: break conversation.append({"role": "user", "content": user_input}) print("Assistant: ", end="", flush=True) response = await agent.run(conversation) print(response["content"]) conversation.append({"role": "assistant", "content": response["content"]}) if __name__ == "__main__": asyncio.run(main()) -

Run your OpenAI application:

python client.py

- Start interacting with your Databricks data through natural language queries. Your OpenAI assistant now has access to your Databricks data through the CData Connect AI MCP Server.

Step 3: Build Intelligent Applications with Live Databricks Data Access

With your OpenAI Python application configured and connected to CData Connect AI, you can now build sophisticated AI assistants that interact with your Databricks data using natural language. The MCP integration provides your applications with powerful data access capabilities through OpenAI's advanced language models.

Available MCP Tools for Your Assistant

Your OpenAI assistant has access to the following CData Connect AI MCP tools:

- queryData: Execute SQL queries against connected data sources and retrieve results

- getCatalogs: Retrieve a list of available connections from CData Connect AI

- getSchemas: Retrieve database schemas for a specific catalog

- getTables: Retrieve database tables for a specific catalog and schema

- getColumns: Retrieve column metadata for a specific table

- getProcedures: Retrieve stored procedures for a specific catalog and schema

- getProcedureParameters: Retrieve parameter metadata for stored procedures

- executeProcedure: Execute stored procedures with parameters

Example Use Cases

Here are some examples of what your OpenAI-powered applications can do with live Databricks data access:

- Conversational Analytics: Build chat interfaces that answer complex business questions using natural language

- Automated Reporting: Generate dynamic reports and summaries based on real-time data queries

- Data Discovery Assistant: Help users explore and understand their data structure without SQL knowledge

- Intelligent Data Monitor: Create AI assistants that proactively identify trends and anomalies

- Custom Query Builder: Enable users to create complex queries through conversational interactions

Interacting with Your Assistant

Once running, you can interact with your OpenAI assistant through natural language. Example queries include:

- "Show me all available databases"

- "What tables are in the sales database?"

- "List the top 10 customers by revenue"

- "Find all orders from the last month"

- "Analyze the trend in sales over the past quarter"

- "What's the structure of the customer table?"

Your OpenAI assistant will automatically translate these natural language queries into appropriate SQL queries and execute them against your Databricks data through the CData Connect AI MCP Server, providing intelligent insights without requiring users to write complex SQL or understand the underlying data structure.

Advanced Features

The OpenAI MCP integration supports advanced capabilities:

- Context Awareness: The assistant maintains conversation context for follow-up questions

- Multi-turn Conversations: Build complex queries through iterative dialogue

- Intelligent Error Handling: Get helpful suggestions when queries encounter issues

- Data Insights: Leverage GPT's analytical capabilities to identify patterns and trends

- Format Flexibility: Request results in various formats (tables, summaries, JSON, etc.)

Get CData Connect AI

To get live data access to 300+ SaaS, Big Data, and NoSQL sources directly from your OpenAI applications, try CData Connect AI today!