Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Connect to Azure Data Lake Storage Data in HULFT Integrate

Connect to Azure Data Lake Storage as a JDBC data source in HULFT Integrate

HULFT Integrate is a modern data integration platform that provides a drag-and-drop user interface to create cooperation flows, data conversion, and processing so that complex data connections are easier than ever to execute. When paired with the CData JDBC Driver for Azure Data Lake Storage, HULFT Integrate can work with live Azure Data Lake Storage data. This article walks through connecting to Azure Data Lake Storage and moving the data into a CSV file.

With built-in optimized data processing, the CData JDBC Driver offers unmatched performance for interacting with live Azure Data Lake Storage data. When you issue complex SQL queries to Azure Data Lake Storage, the driver pushes supported SQL operations, like filters and aggregations, directly to Azure Data Lake Storage and utilizes the embedded SQL engine to process unsupported operations client-side (often SQL functions and JOIN operations). Its built-in dynamic metadata querying allows you to work with and analyze Azure Data Lake Storage data using native data types.

Enable Access to Azure Data Lake Storage

To enable access to Azure Data Lake Storage data from HULFT Integrate projects:

- Copy the CData JDBC Driver JAR file (and license file if it exists), cdata.jdbc.adls.jar (and cdata.jdbc.adls.lic), to the jdbc_adapter subfolder for the Integrate Server

- Restart the HULFT Integrate Server and launch HULFT Integrate Studio

Build a Project with Access to Azure Data Lake Storage Data

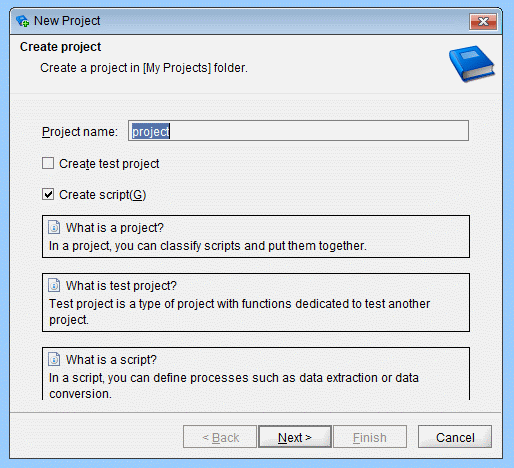

Once you copy the JAR files, you can create a project with access to Azure Data Lake Storage data. Start by opening Integrate Studio and creating a new project.

- Name the project

- Ensure the "Create script" checkbox is checked

- Click Next

![Creating a new project.]()

- Name the script (e.g.: ADLStoCSV)

Once you create the project, add components to the script to copy Azure Data Lake Storage data to a CSV file.

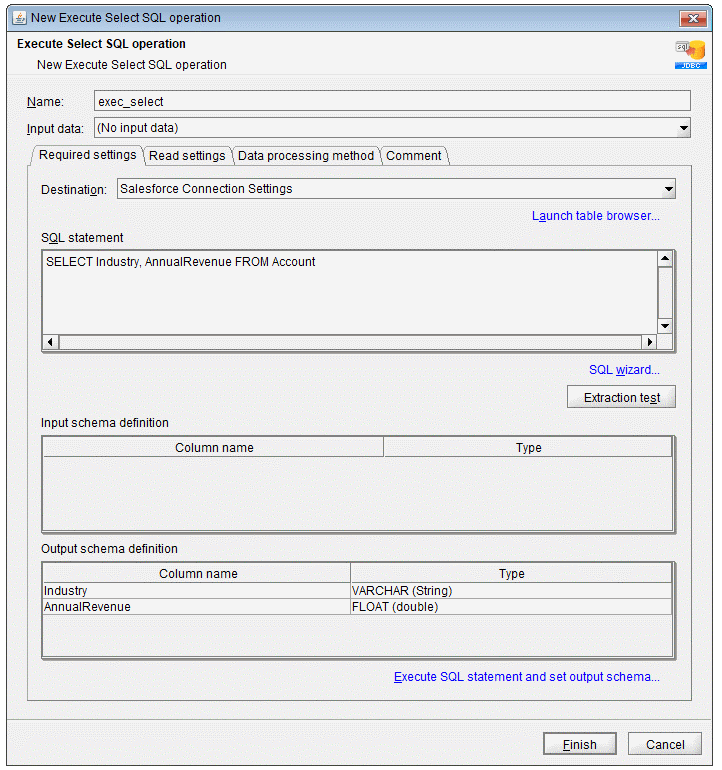

Configure an Execute Select SQL Component

Drag an "Execute Select SQL" component from the Tool Palette (Database -> JDBC) into the Script workspace.

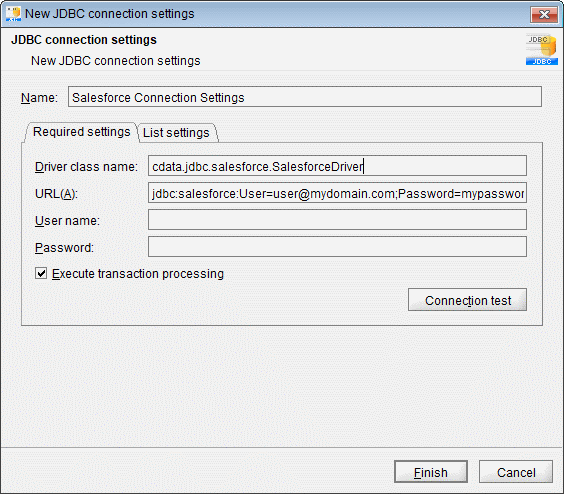

- In the "Required settings" tab for the Destination, click "Add" to create a new connection for Azure Data Lake Storage. Set the following properties:

- Name: Azure Data Lake Storage Connection Settings

- Driver class name: cdata.jdbc.adls.ADLSDriver

- URL: jdbc:adls:Schema=ADLSGen2;Account=myAccount;FileSystem=myFileSystem;AccessKey=myAccessKey;InitiateOAuth=GETANDREFRESH

![JDBC connection settings (Salesforce is shown).]()

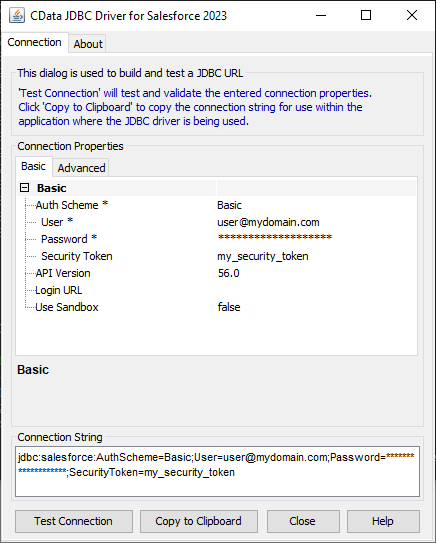

Built-in Connection String Designer

For assistance constructing the JDBC URL, use the connection string designer built into the Azure Data Lake Storage JDBC Driver. Either double-click the JAR file or execute the JAR file from the command-line.

java -jar cdata.jdbc.adls.jarFill in the connection properties and copy the connection string to the clipboard.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

- Write your SQL statement. For example:

SELECT FullPath, Permission FROM Resources

- Click "Extraction test" to ensure the connection and query are configured properly

- Click "Execute SQL statement and set output schema"

- Click "Finish"

![Configuring the Execute Select SQL operation]()

Configure a Write CSV File Component

Drag a "Write CSV File" component from the Tool Palette (File -> CSV) onto the workspace.

- Set a file to write the query results to (e.g. Resources.csv)

- Set "Input data" to the "Select SQL" component

- Add columns for each field selected in the SQL query

- In the "Write settings" tab, check the checkbox to "Insert column names into first row"

- Click "Finish"

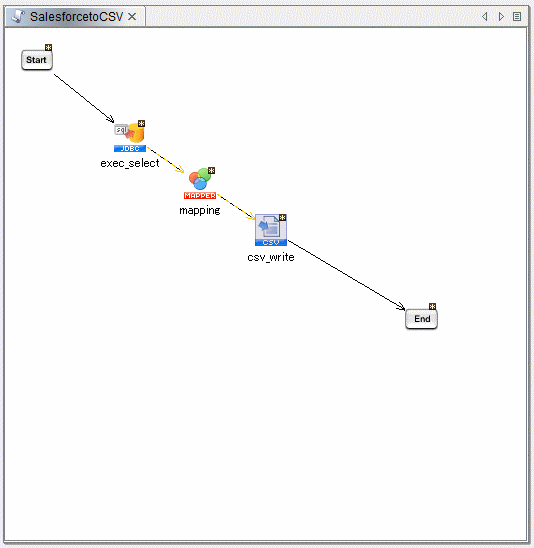

Map Azure Data Lake Storage Fields to the CSV Columns

Map each column from the "Select" component to the corresponding column for the "CSV" component.

Finish the Script

Drag the "Start" component onto the "Select" component and the "CSV" component onto the "End" component. Build the script and run the script to move Azure Data Lake Storage data into a CSV file.

Download a free, 30-day trial of the CData JDBC Driver for Azure Data Lake Storage and start working with your live Azure Data Lake Storage data in HULFT Integrate. Reach out to our Support Team if you have any questions.