Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →How to Access Live Azure Data Lake Storage Data in Power Automate Desktop via ODBC

The CData ODBC Driver for Azure Data Lake Storage enables you to integrate Azure Data Lake Storage data into workflows built using Microsoft Power Automate Desktop.

The CData ODBC Driver for Azure Data Lake Storage enables you to access live Azure Data Lake Storage data in workflow automation tools like Power Automate. This article shows how to integrate Azure Data Lake Storage data into a simple workflow, moving Azure Data Lake Storage data into a CSV file.

Through optimized data processing, CData ODBC Drivers offer unmatched performance for interacting with live Azure Data Lake Storage data in Microsoft Power Automate. When you issue complex SQL queries from Power Automate to Azure Data Lake Storage, the driver pushes supported SQL operations, like filters and aggregations, directly to Azure Data Lake Storage and utilizes the embedded SQL engine to process unsupported operations client-side (e.g. SQL functions and JOIN operations).

Connect to Azure Data Lake Storage as an ODBC Data Source

If you have not already, first specify connection properties in an ODBC DSN (data source name). This is the last step of the driver installation. You can use the Microsoft ODBC Data Source Administrator to create and configure ODBC DSNs.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

When you configure the DSN, you may also want to set the Max Rows connection property. This will limit the number of rows returned, which is especially helpful for improving performance when designing workflows.

Integrate Azure Data Lake Storage Data into Power Automate Workflows

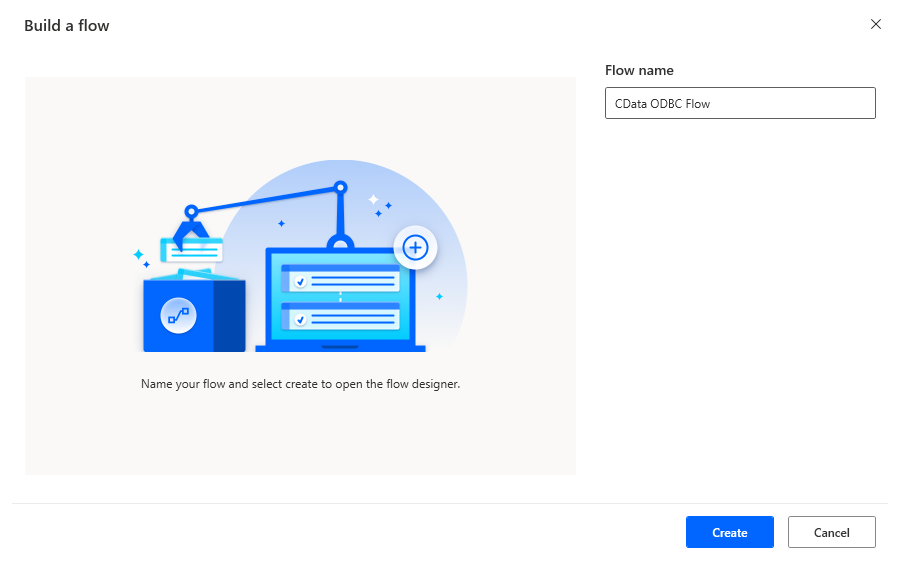

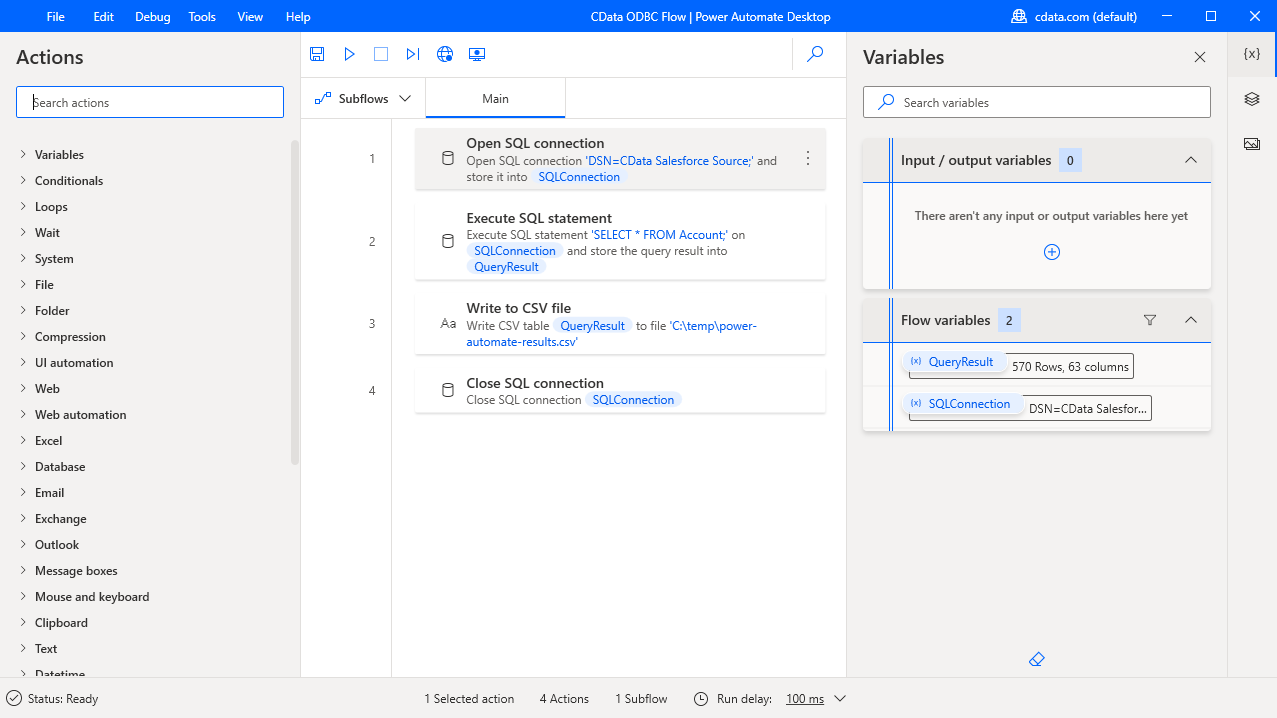

After configuring the DSN for Azure Data Lake Storage, you are ready to integrate Azure Data Lake Storage data into your Power Automate workflows. Open Microsoft Power Automate, add a new flow, and name the flow.

In the flow editor, you can add the actions to connect to Azure Data Lake Storage, query Azure Data Lake Storage using SQL, and write the query results to a CSV document.

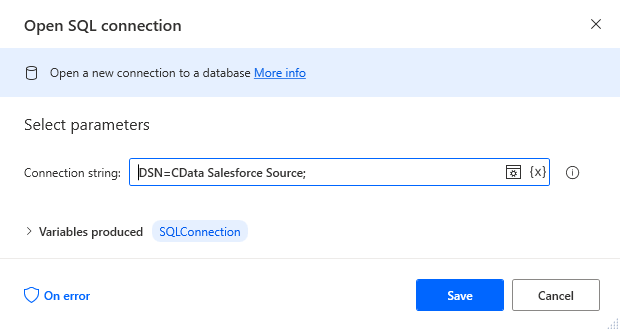

Add an Open SQL Connection Action

Add an "Open SQL connection" action (Actions -> Database) and configure the properties.

- Connection string: DSN=CData Azure Data Lake Storage Source

After configuring the action, click Save.

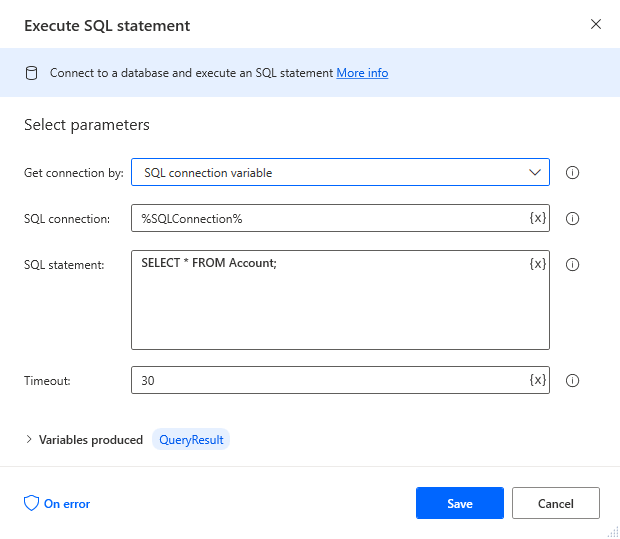

Add an Execute SQL Statement Action

Add an "Execute SQL statement" action (Actions -> Database) and configure the properties.

- Get connection by: SQL connection variable

- SQL connection: %SQLConnection% (the variable from the "Open SQL connection" action above)

- SQL statement: SELECT * FROM Resources

After configuring the action, click Save.

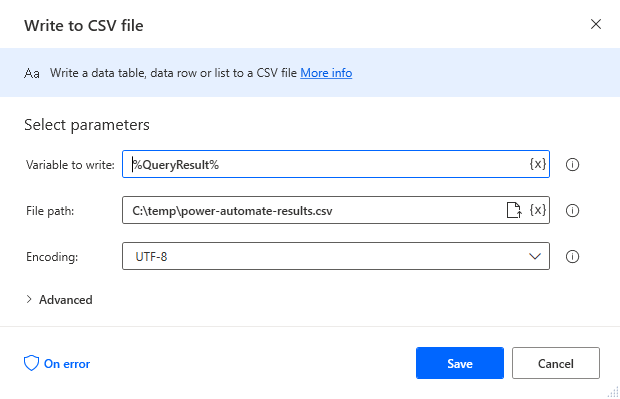

Add a Write to CSV File Action

Add a "Write to CSV file" action (Actions -> File) and configure the properties.

- Variable to write to: %QueryResult% (the variable from the "Execute SQL statement" action above)

- File path: set to a file on disk

- Configure Advanced settings as needed.

After configuring the action, click Save.

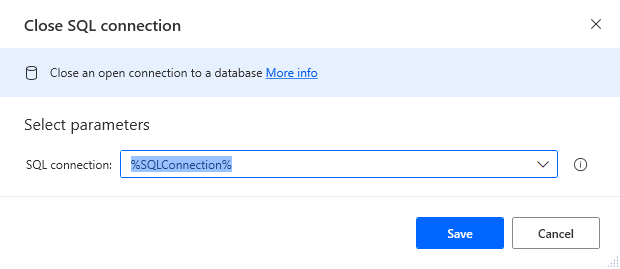

Add a Close SQL Connection Action

Add a "Close SQL connection" action (Actions -> Database) and configure the properties.

- SQL Connection: %SQLConnection% (the variable from the "Open SQL connection" action above)

After configuring the action, click Save.

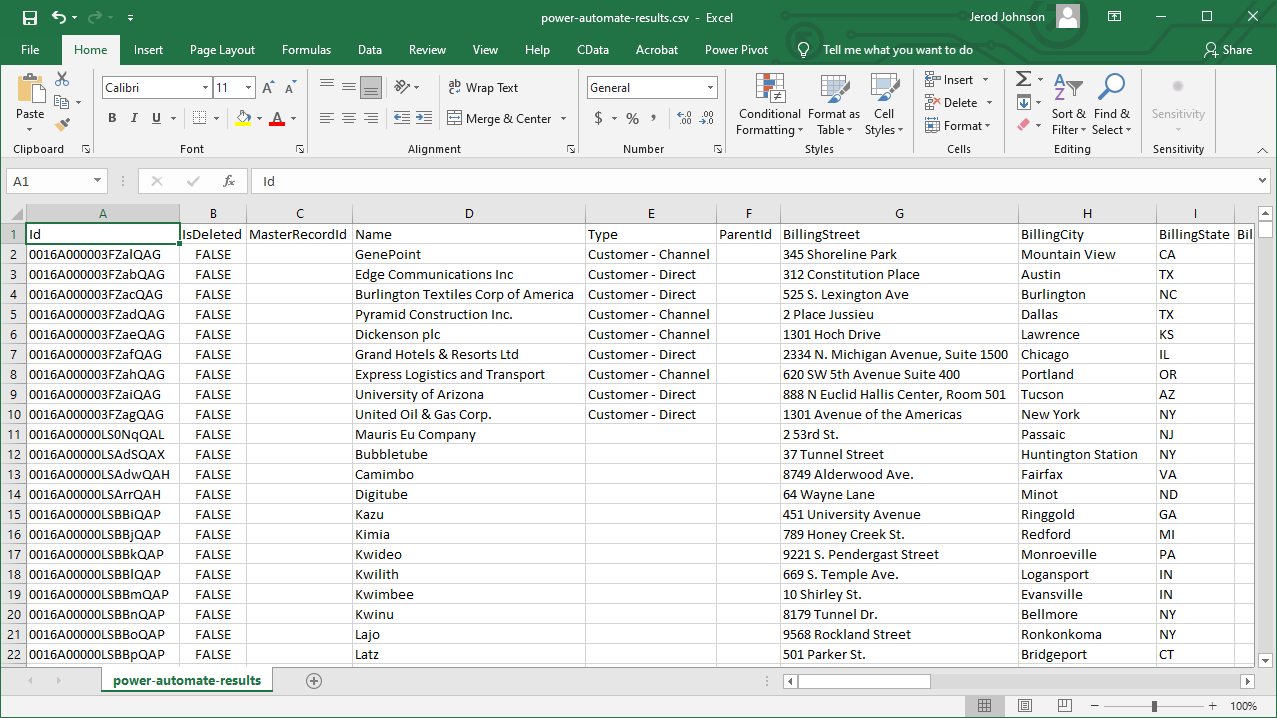

Save & Run the Flow

Once you have configured all the actions for the flow, click the disk icon to save the flow. Click the play icon to run the flow.

Now you have a workflow to move Azure Data Lake Storage data into a CSV file.

With the CData ODBC Driver for Azure Data Lake Storage, you get live connectivity to Azure Data Lake Storage data within your Microsoft Power Automate workflows.

Related Power Automate Articles

This article walks through using the CData ODBC Driver for Azure Data Lake Storage with Power Automate Desktop. Check out our other articles for more ways to work with Power Automate (Desktop & Online):