Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Automated Continuous Azure Data Lake Storage Replication to Apache Kafka

Use CData Sync for automated, continuous, customizable Azure Data Lake Storage replication to Apache Kafka.

Always-on applications rely on automatic failover capabilities and real-time data access. CData Sync integrates live Azure Data Lake Storage data into your Apache Kafka instance, allowing you to consolidate all of your data into a single location for archiving, reporting, analytics, machine learning, artificial intelligence and more.

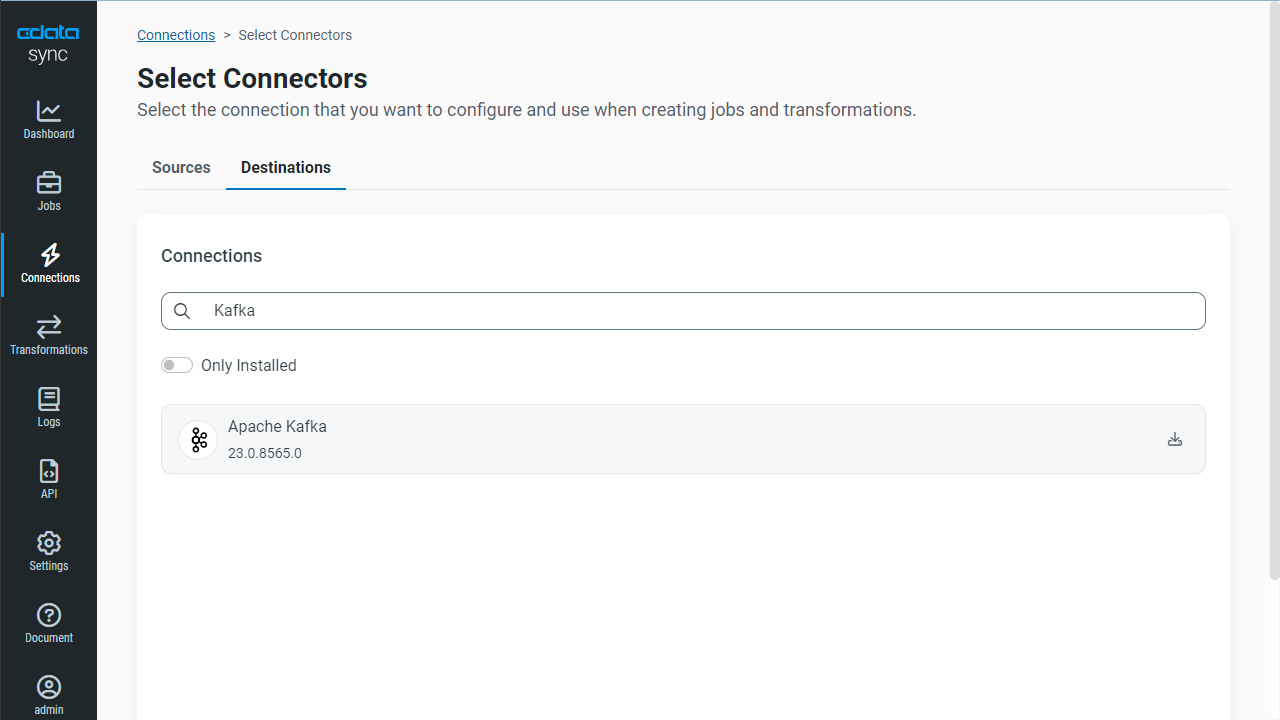

Configure Apache Kafka as a Replication Destination

Using CData Sync, you can replicate Azure Data Lake Storage data to Kafka. To add a replication destination, navigate to the Connections tab.

- Click Add Connection.

- Select Apache Kafka as a destination.

![Configure a Destination connection to Kafka.]()

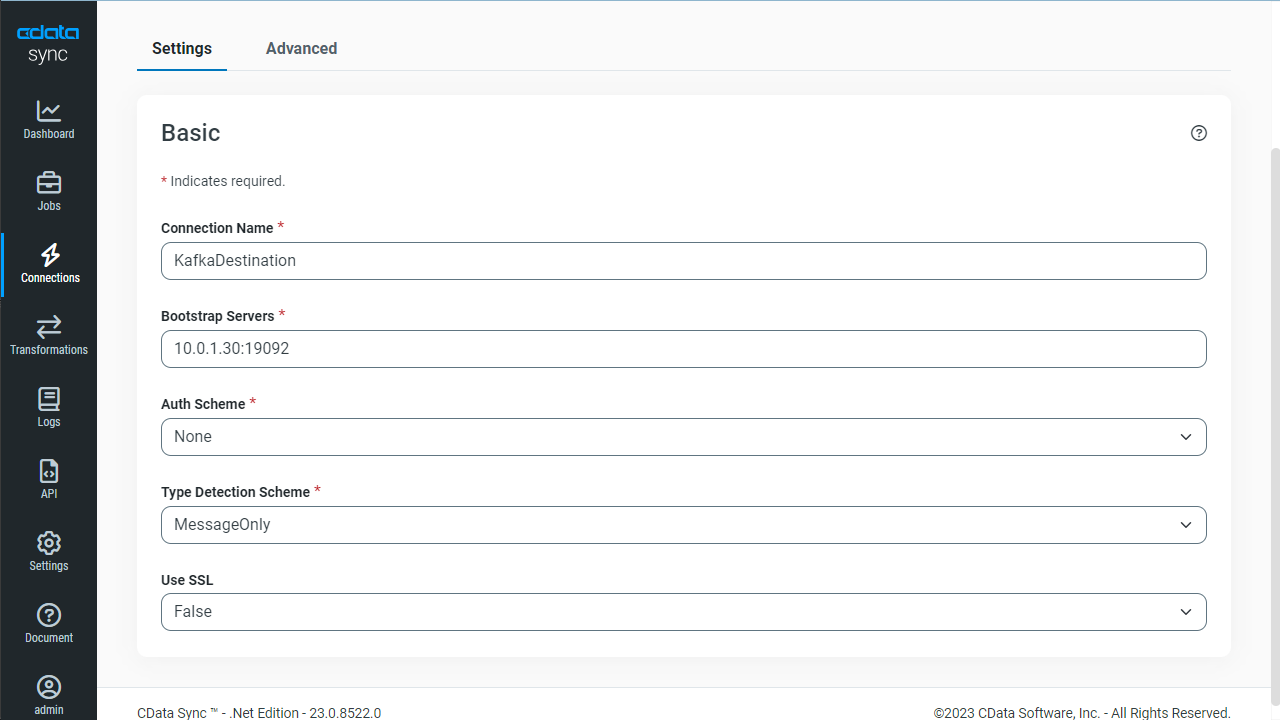

- Enter the necessary connection properties:

- Bootstrap Servers - Enter the address of the Apache Kafka Bootstrap servers to which you want to connect.

- Auth Scheme - Select the authentication scheme. Plain is the default setting. For this setting, specify your login credentials:

- User - Enter the username that you use to authenticate to Apache Kafka.

- Password - Enter the password that you use to authenticate to Apache Kafka.

- Type Detection Scheme - Specify the detection-scheme type (None, RowScan, SchemaRegistry, or MessageOnly) that you want to use. The default type is None.

- Use SSL - Specify whether you want to use the Secure Sockets Layer (SSL) protocol. The default value is False.

- Click Test Connection to ensure that the connection is configured properly.

![Configure a Destination connection.]()

- Click Save Changes.

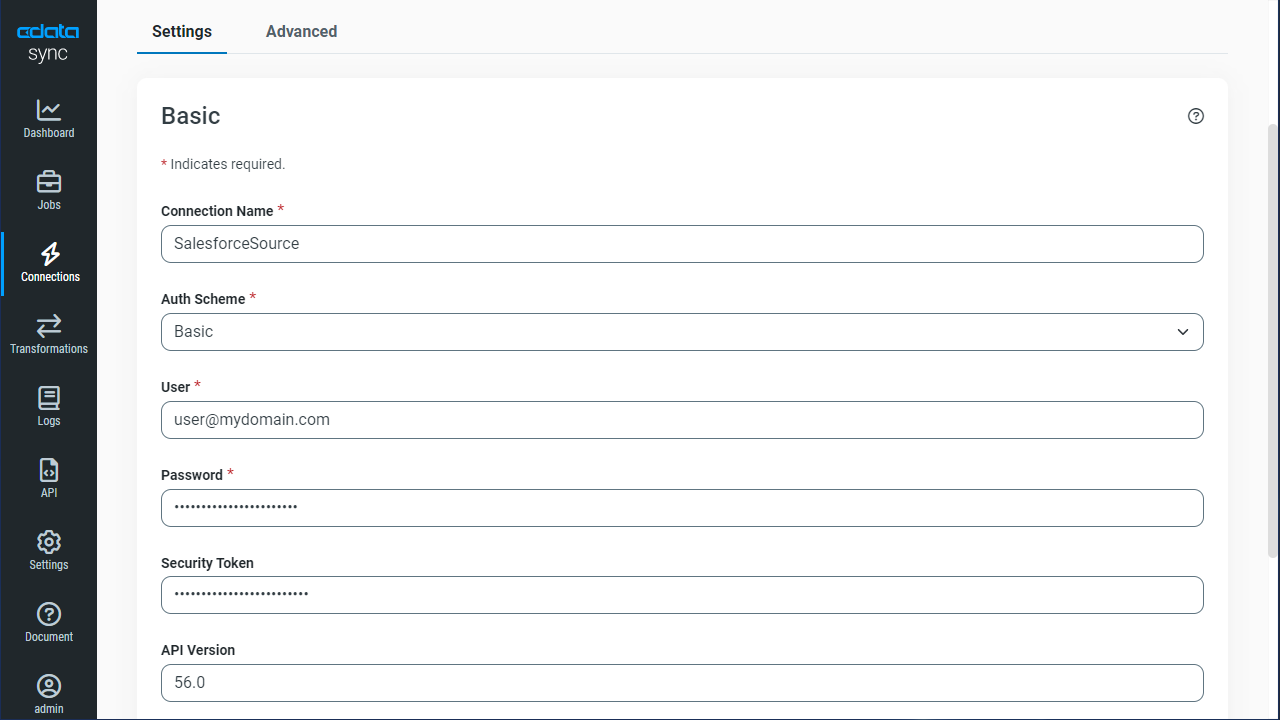

Configure the Azure Data Lake Storage Connection

You can configure a connection to Azure Data Lake Storage from the Connections tab. To add a connection to your Azure Data Lake Storage account, navigate to the Connections tab.

- Click Add Connection.

- Select a source (Azure Data Lake Storage).

- Configure the connection properties.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

![Configure a Source connection (Salesforce is shown).]()

- Click Connect to ensure that the connection is configured properly.

- Click Save Changes.

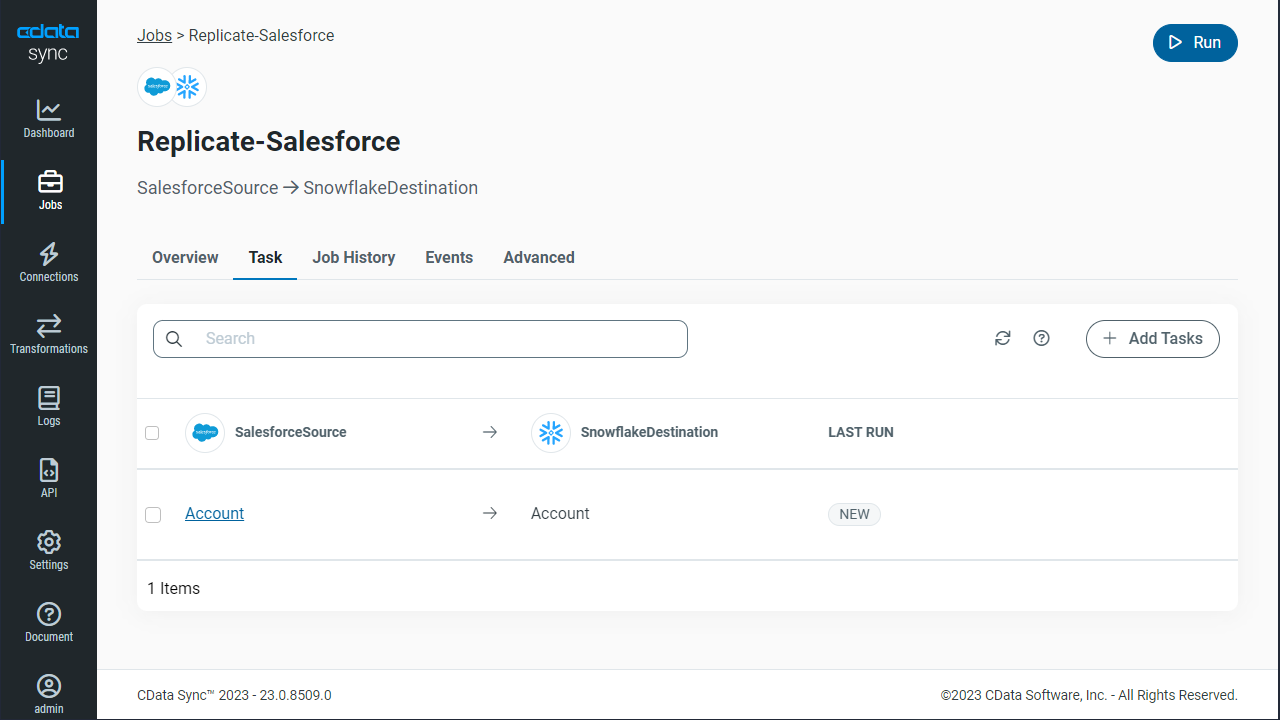

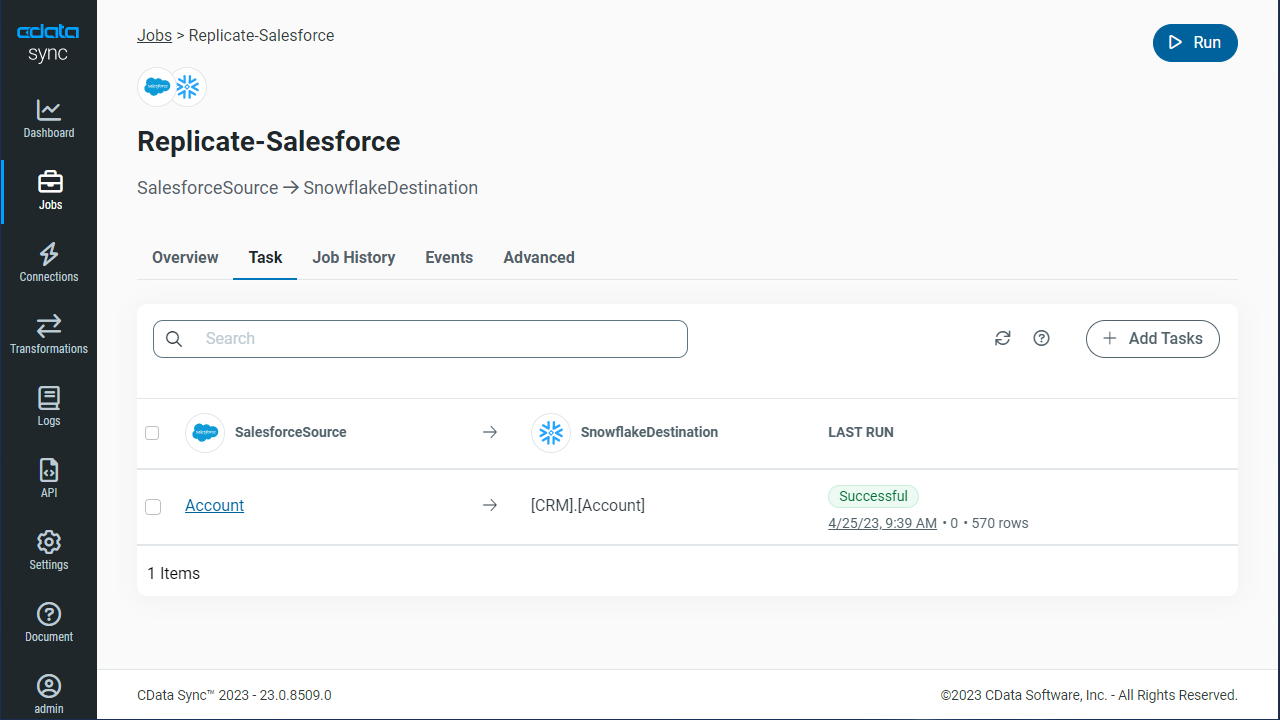

Configure Replication Queries

CData Sync enables you to control replication with a point-and-click interface and with SQL queries. For each replication you wish to configure, navigate to the Jobs tab and click Add Job. Select the Source and Destination for your replication.

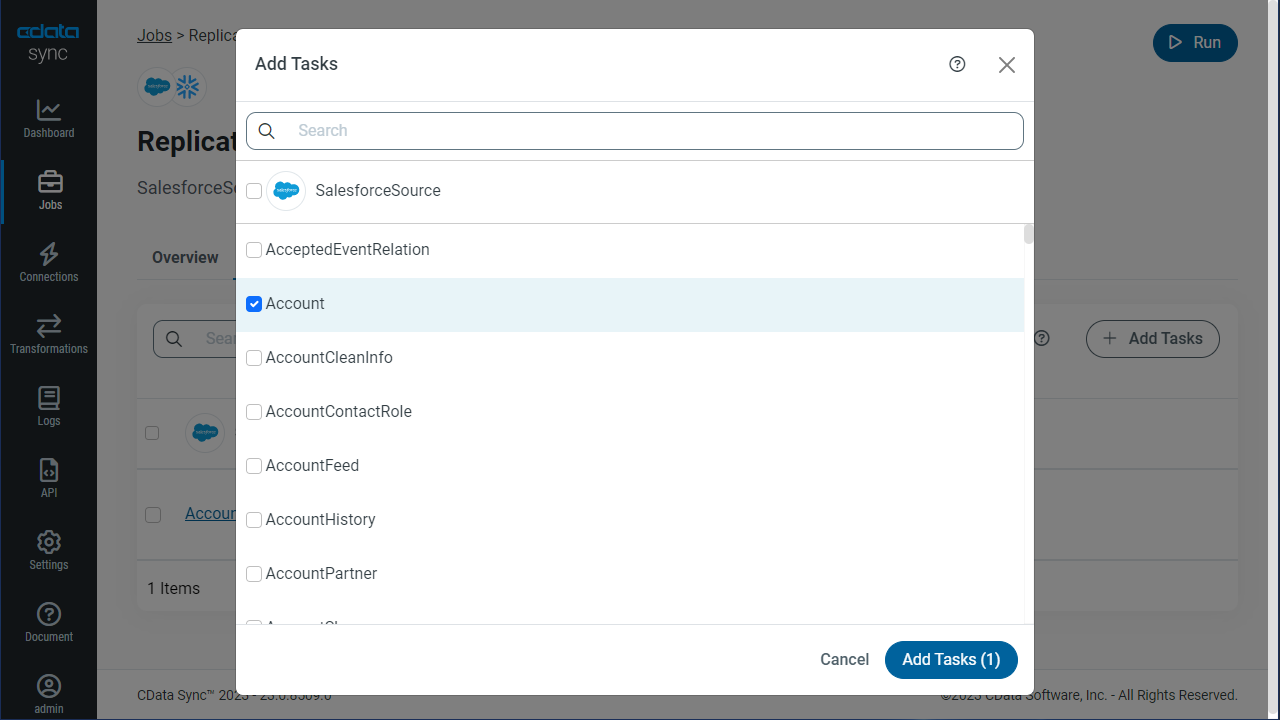

Replicate Entire Tables

To replicate an entire table, click Add Tables in the Tables section, choose the table(s) you wish to replicate, and click Add Selected Tables.

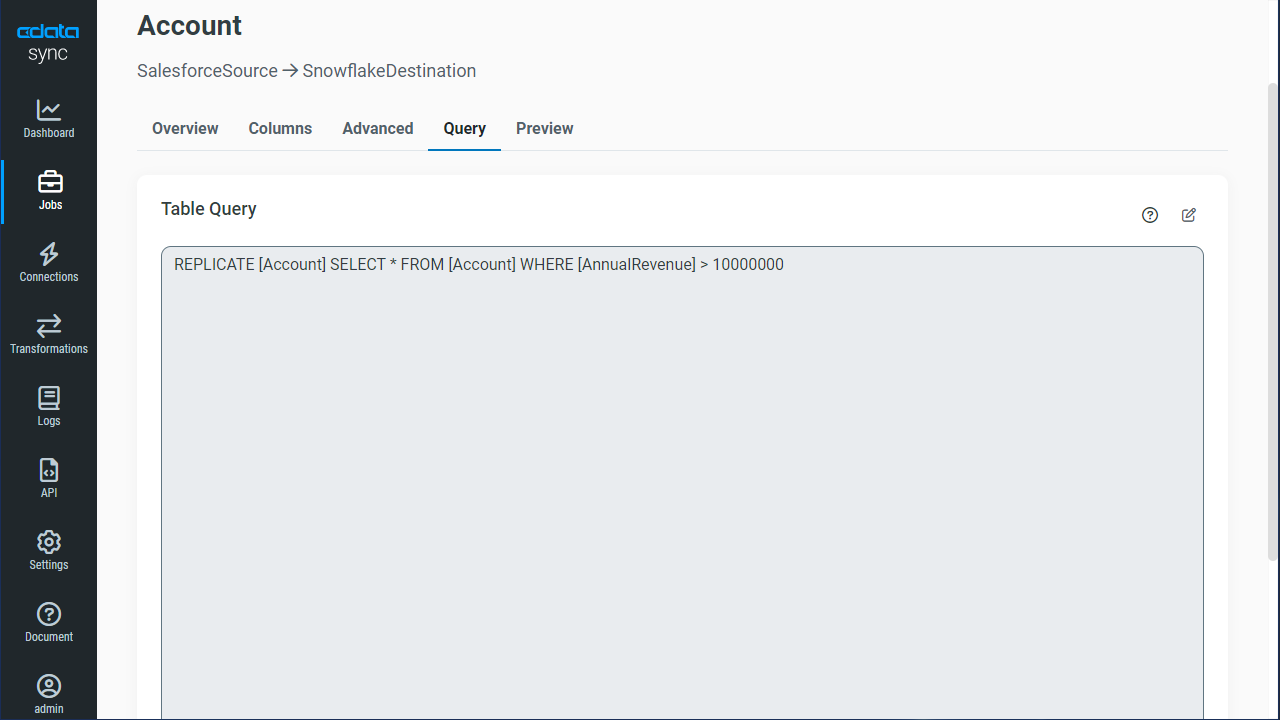

Customize Your Replication

You can use the Columns and Query tabs of a task to customize your replication. The Columns tab allows you to specify which columns to replicate, rename the columns at the destination, and even perform operations on the source data before replicating. The Query tab allows you to add filters, grouping, and sorting to the replication.

Schedule Your Replication

In the Schedule section, you can schedule a job to run automatically, configuring the job to run after specified intervals ranging from once every 10 minutes to once every month.

Once you have configured the replication job, click Save Changes. You can configure any number of jobs to manage the replication of your Azure Data Lake Storage data to Kafka.