Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Build an OLAP Cube in SSAS from Databricks Data

Establish a connection to Databricks data data from SQL Server Analysis Services, and use the Databricks Data Provider to build OLAP cubes for use in analytics and reporting.

SQL Server Analysis Services (SSAS) serves as an analytical data engine employed in decision support and business analytics, offering high-level semantic data models for business reports and client applications like Power BI, Excel, Reporting Services reports, and various data visualization tools. When coupled with the CData ADO.NET Provider for Databricks, you gain the capability to generate cubes from Databricks data, facilitating more profound and efficient data analysis.

In this article, we will guide you through the process of developing and deploying a multi-dimensional model of Databricks data by creating an Analysis Services project in Visual Studio. To proceed, ensure that you have an accessible SSAS instance and have installed the ADO.NET Provider.

Creating a Data Source for Databricks

Start by creating a new Analysis Service Multidimensional and Data Mining Project in Visual Studio. Next, create a Data Source for Databricks data in the project.

- In the Solution Explorer, right-click Data Source and select New Data Source.

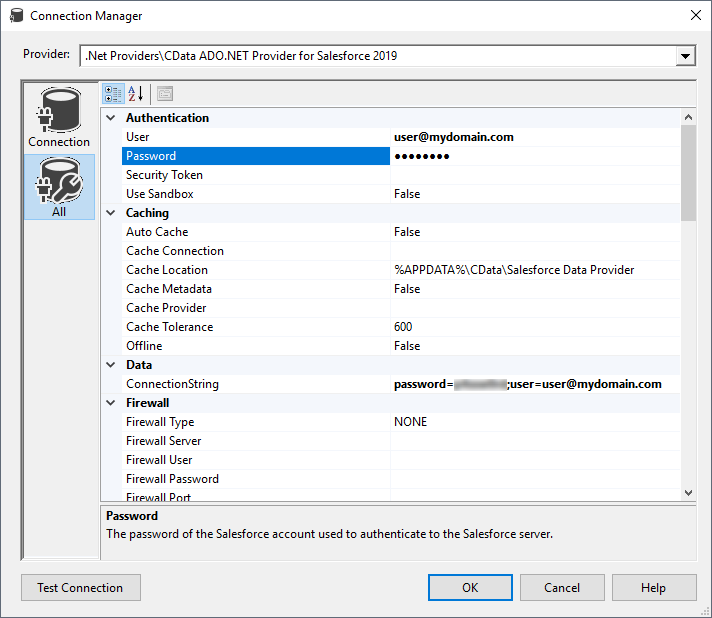

- Opt to create a data source based on an existing or new connection and click New.

- In the Connection Manager, select CData ADO.NET Provider for Databricks, enter the necessary connection properties, and click Next.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

When you configure the connection, you may also want to set the Max Rows connection property. This will limit the number of rows returned, which is especially helpful for improving performance when designing reports and visualizations.

![Setting the Connection properties (Salesforce is shown.)]()

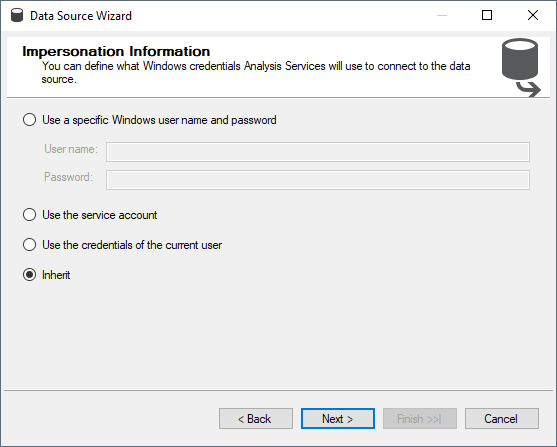

- Set the impersonation method to Inherit and click Next.

![Setting the impersonation method.]()

- Name the data source (CData Databricks Source) and click Finish.

Creating a Data Source View

After you create the data source, create the data source view.

- In the Solution Explorer, right-click Data Source Views and select New Data Source View.

- Select the data source you just created (CData Databricks Source) and click Next.

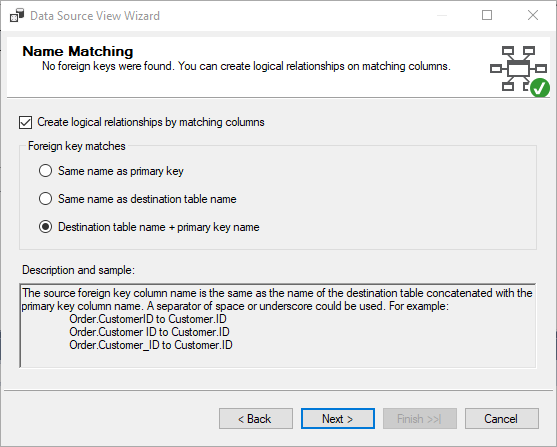

- Choose a foreign key match pattern that matches your underlying data source and click Next.

![Selecting the Foreign key match pattern.]()

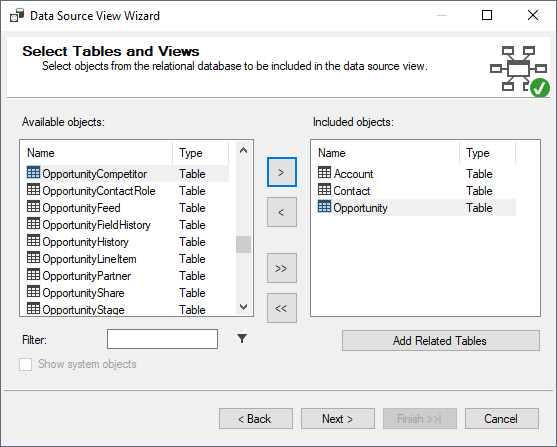

- Select Databricks tables to add to the view and click Next.

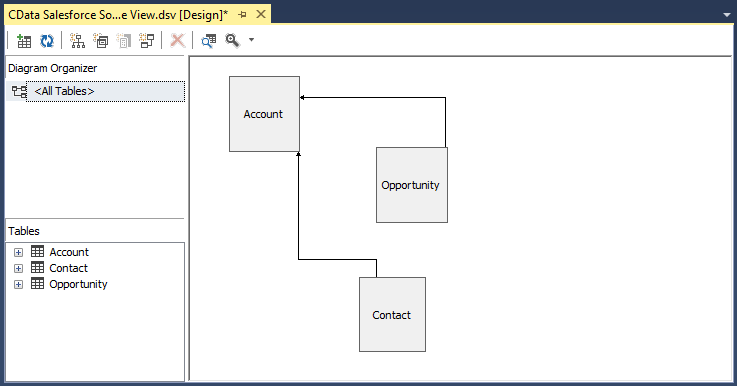

![Selecting Tables (Salesforce is shown).]()

- Name the view and click Finish

Based on the foreign key match scheme, relationships in the underlying data will be automatically detected. You can view (and edit) these relationships by double clicking Data Source View.

Note that adding a secondary data source to the Data Source View is not supported. When working with multiple data sources, SSAS requires both sources to support remote queries via OpenRowset which is unavailable in the ADO.NET Provider.

Creating a Cube for Databricks

The last step before you can process the project and deploy Databricks data to SSAS is creating the cubes.

- In the Solution Explorer, right-click Cubes and select New Cube

- Select "Use existing tables" and click Next.

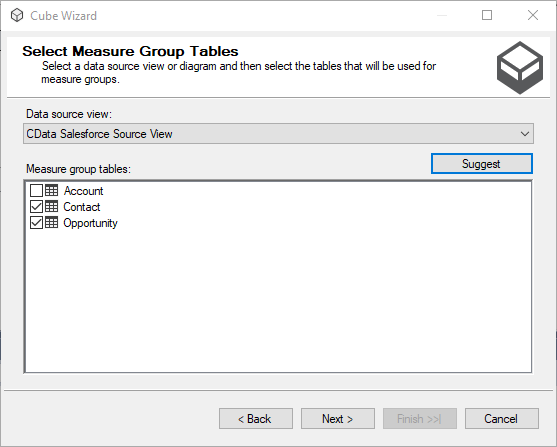

- Select the tables that will be used for measure group tables and click Next.

![Selecting measure group tables (Salesforce is shown).]()

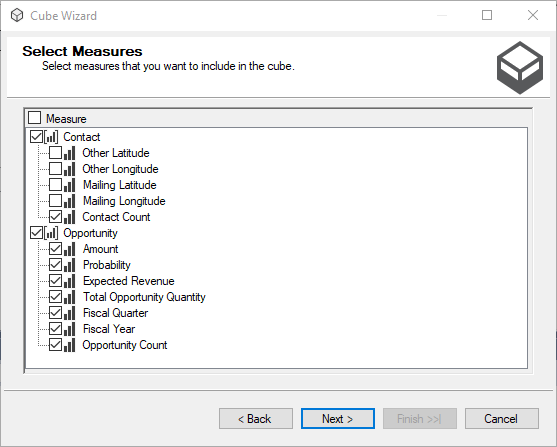

- Select the measures you want to include in the cube and click Next.

![Selecting measures (Salesforce is shown).]()

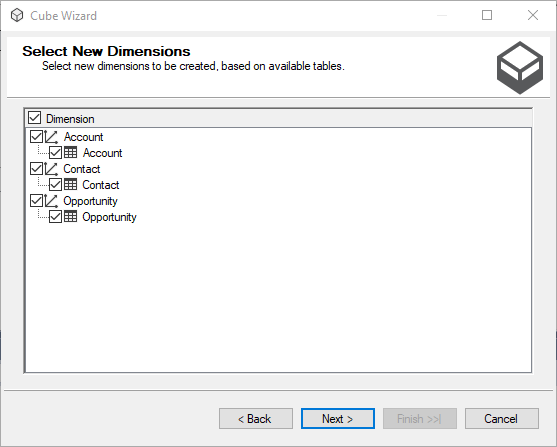

- Select the dimensions to be created, based on the available tables, and click Next.

![Selecting dimensions (Salesforce is shown).]()

- Review all of your selections and click Finish.

Process the Project

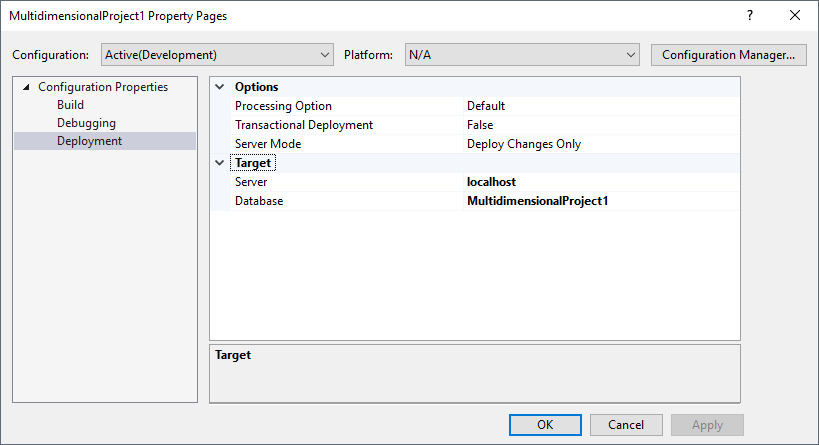

With the data source, data source view, and cube created, you are ready to deploy the cube to SSAS. To configure the target server and database, right-click the project and select properties. Navigate to deployment and configure the Server and Database properties in the Target section.

After configuring the target server and database, right-click the project and select Process. You may need to build and deploy the project as a part of this step. Once the project is built and deployed, click Run in the Process Database wizard.

Now you have an OLAP cube for Databricks data in your SSAS instance, ready to be analyzed, reported, and viewed. Get started with a free, 30-day trial of the CData ADO.NET Provider for Databricks.