Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Access Databricks Data in Mule Applications Using the CData JDBC Driver

Create a simple Mule Application that uses HTTP and SQL with CData JDBC drivers to create a JSON endpoint for Databricks data.

The CData JDBC Driver for Databricks connects Databricks data to Mule applications enabling read , write, update, and delete functionality with familiar SQL queries. The JDBC Driver allows users to easily create Mule applications to backup, transform, report, and analyze Databricks data.

This article demonstrates how to use the CData JDBC Driver for Databricks inside of a Mule project to create a Web interface for Databricks data. The application created allows you to request Databricks data using an HTTP request and have the results returned as JSON. The exact same procedure outlined below can be used with any CData JDBC Driver to create a Web interface for the 200+ available data sources.

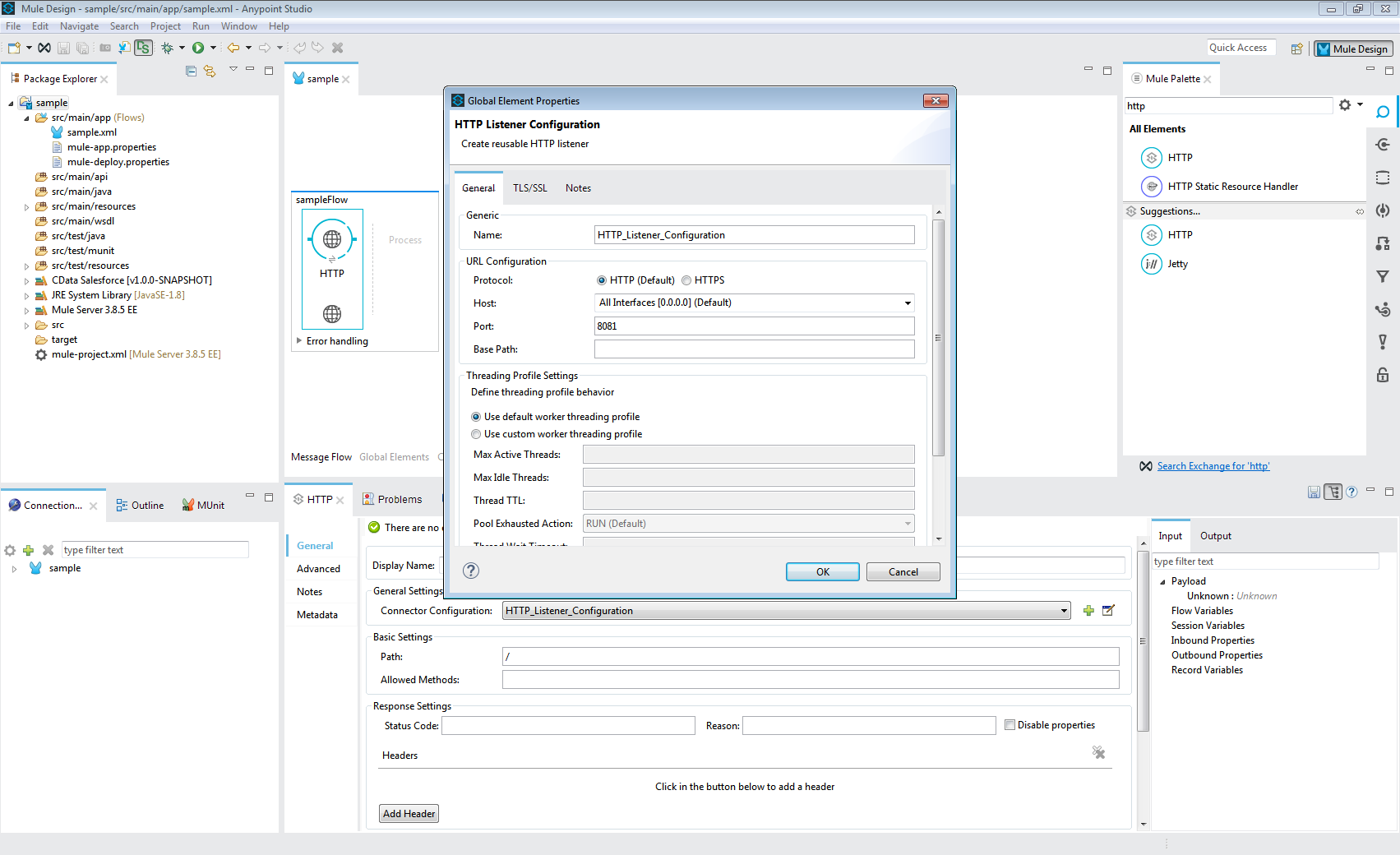

- Create a new Mule Project in Anypoint Studio.

- Add an HTTP Connector to the Message Flow.

- Configure the address for the HTTP Connector.

![Add and Configure the HTTP Connector]()

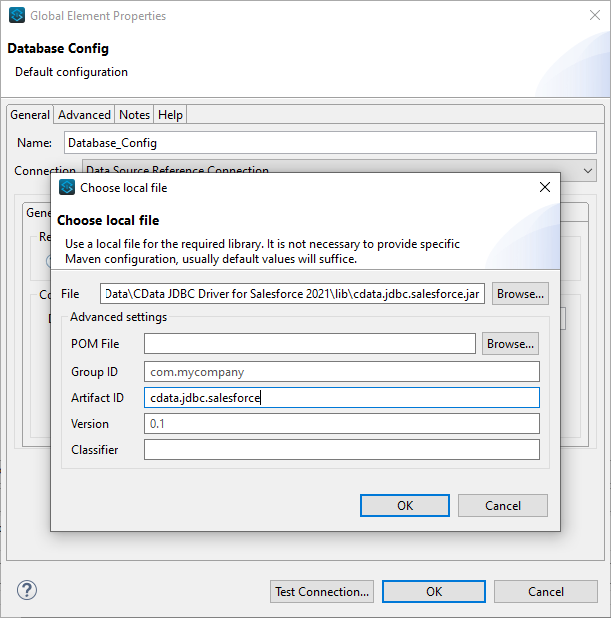

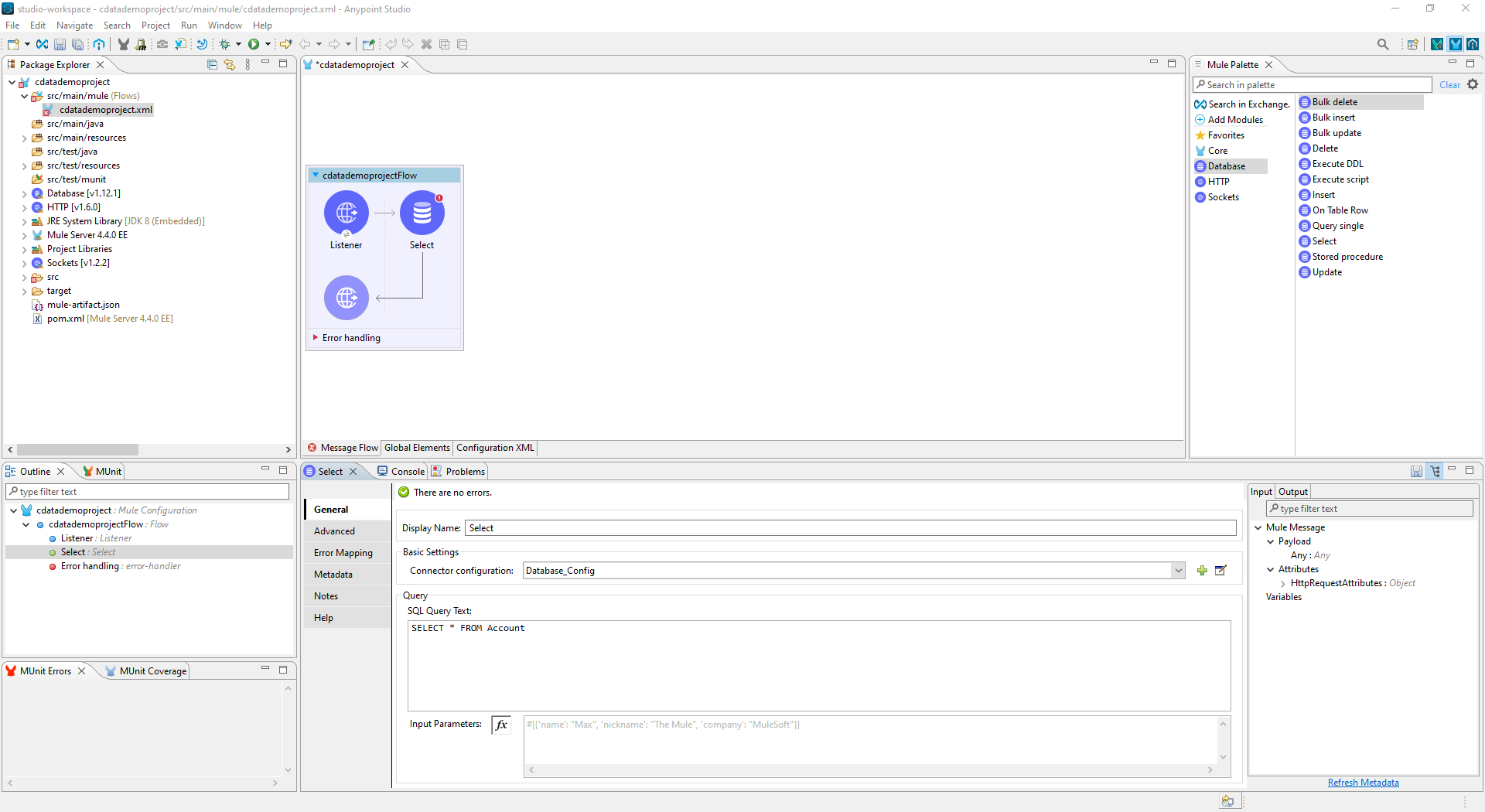

- Add a Database Select Connector to the same flow, after the HTTP Connector.

- Create a new Connection (or edit an existing one) and configure the properties.

- Set Connection to "Generic Connection"

- Select the CData JDBC Driver JAR file in the Required Libraries section (e.g. cdata.jdbc.databricks.jar).

![Adding the JAR file (Salesforce is shown).]()

- Set the URL to the connection string for Databricks

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Databricks JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.databricks.jarFill in the connection properties and copy the connection string to the clipboard.

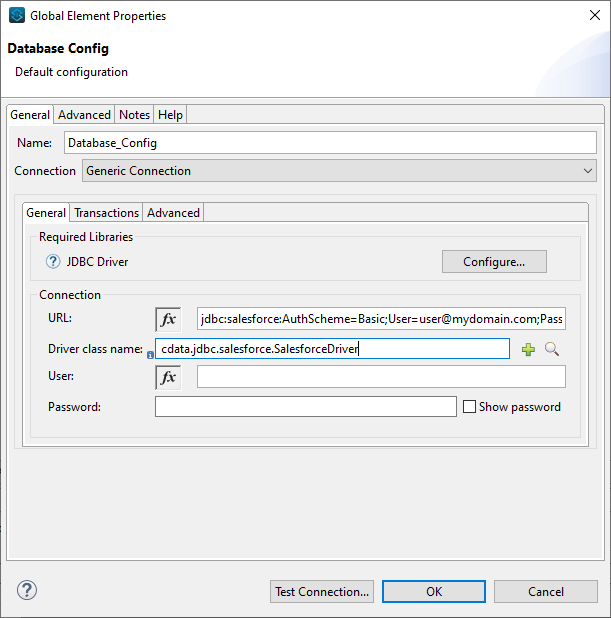

- Set the Driver class name to cdata.jdbc.databricks.DatabricksDriver.

![A configured Database Connection (Salesforce is shown).]()

- Click Test Connection.

- Set the SQL Query Text to a SQL query to request Databricks data. For example:

SELECT City, CompanyName FROM Customers WHERE Country = 'US'![Configure the Select object (Salesforce is Shown)]()

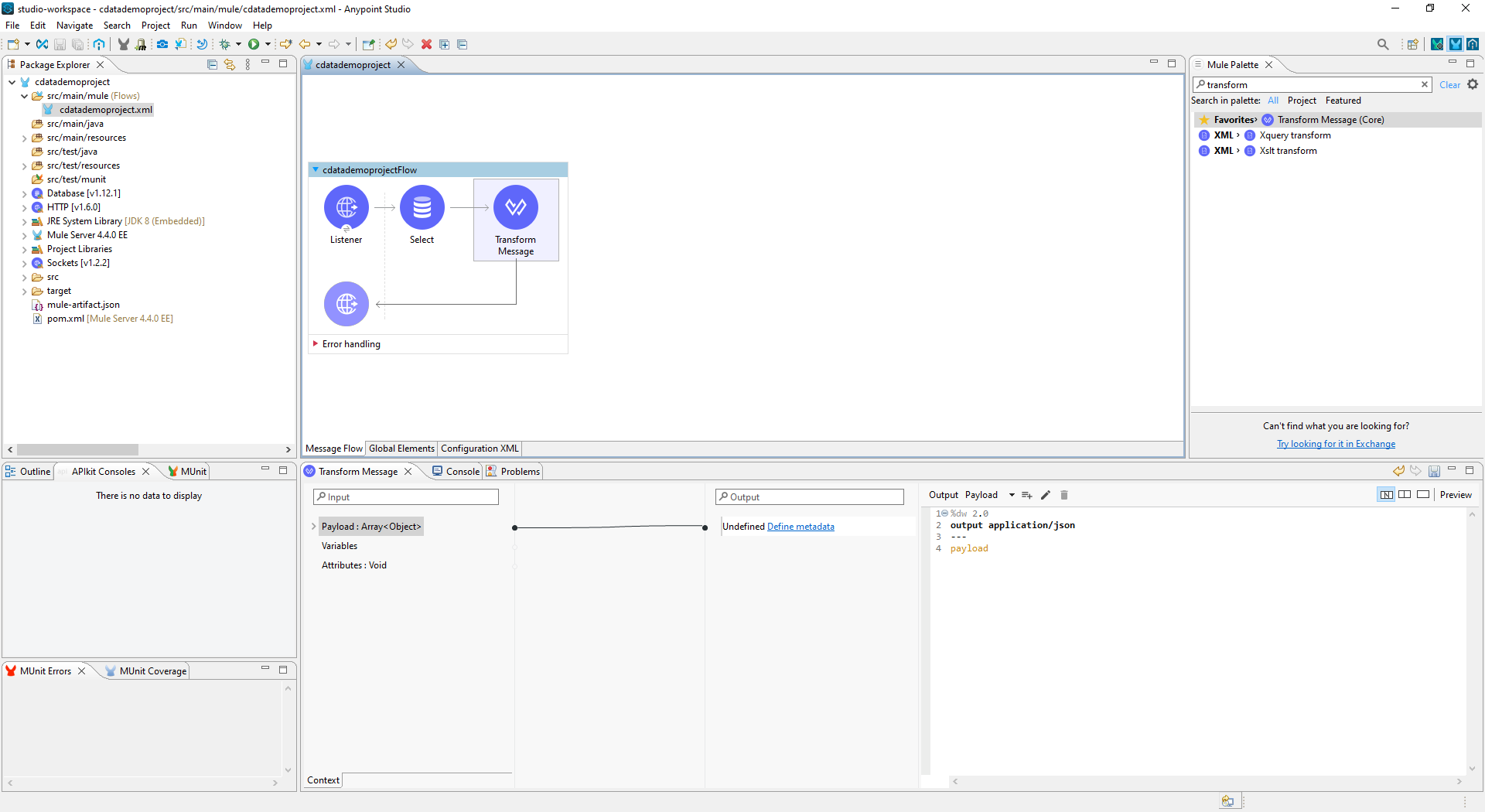

- Add a Transform Message Component to the flow.

- Set the Output script to the following to convert the payload to JSON:

%dw 2.0 output application/json --- payload![Add the Transform Message Component to the Flow]()

- To view your Databricks data, navigate to the address you configured for the HTTP Connector (localhost:8081 by default): http://localhost:8081. The Databricks data is available as JSON in your Web browser and any other tools capable of consuming JSON endpoints.

At this point, you have a simple Web interface for working with Databricks data (as JSON data) in custom apps and a wide variety of BI, reporting, and ETL tools. Download a free, 30 day trial of the JDBC Driver for Databricks and see the CData difference in your Mule Applications today.