Since the first integrated database system (DBMS) was born in 1960, computerized databases have morphed from handling numerical or statistical data that was tied to physical records, to managing numerical or statistical data entered in one central place.

In the mid-1980s, databases became relational (RDBMS). They gained the ability to index data, creating tables and views that exposed new relationships between data. By the 1990s, those databases supported a huge profusion of data types, distributed among multiple machines.

The different types of data had to be transformed into a format that could be analyzed and stored in a unified environment, such as a data warehouse or data lake. This gave rise to ETL.

ETL (Extract, Transform, and Load) is a process that:

- Extracts and replicates data from the source system;

- Transforms it so it can interoperate with other data at the destination data warehouse or data lake; and finally

- Loads it into the destination data warehouse or data lake.

There’s also a variant of ETL called ELT, where the transformation happens after the replicated data is loaded into the data warehouse or data lake. And there are other approaches available to facilitate data integration workflows, such as Change Data Capture (CDC) and Stream Data Integration (SDI). Both of these can be used to move data to a data warehouse or data lake in real time.

However, in all of these methodologies, the data is still being copied, transformed, and migrated. The processing required to fuel the ETL pipeline, and the difficulty in using ETL data in near-real-time use cases, persists.

The effort to eliminate or reduce data duplication, and cut down on processing throughput, led to the creation of Zero ETL, also known as zero integration.

What is Zero ETL?

Zero ETL is a methodology for storing and analyzing data within its source system in its original format, without any need for transformation or data movement. Modern data warehouses and data lakes, which are already located in the cloud, can use integrated services of cloud providers to analyze the data directly from their original sources.

No duplication, extraction, or transformation is required!

How Zero ETL works

In Zero ETL, data stays in place, but is made accessible through data virtualization and data federation technologies, without further manipulation. With zero integration, data is made available for real-time transformation and analysis.

Since there is no ETL pipeline required to gain access to data, Zero ETL solves some operational issues inherent in conventional ETL:

|

ETL

|

Zero ETL

|

|

Data quality

|

Errors can be introduced while moving or transferring data. These errors can propagate through the data pipeline, leading to inaccurate analyses

|

Zero ETL leaves data in place in the original system or service. This lowers the risk of data quality degradation.

|

|

Multiple types of data

|

Since data needs to be “cleansed” before loading, having multiple types of data in the mix can make the transformation process much more complex and error-prone.

|

Zero ETL naturally handles a diverse pool of data sources, including structured and unstructured data, without needing to cleanse it first.

|

|

Performance and scalability

|

ETL data pipelines can be difficult to scale. Traditional ETL tools may not be able to handle increases in data volume or complexity.

|

Zero ETL allows for more flexibility in handling diverse data types. This makes it easier to adapt to changes in data structure and format without worrying about whether the repository can handle it.

|

|

Time and resource constraints

|

Transformation requires time to map and convert data into a form that is suitable for analysis.

ETL processes require skilled professionals who understand both the ETL tools themselves, and the business data’s needs.

|

By eliminating the need for manual data transformation, Zero ETL frees up time for data professionals to focus on more high-value tasks like analysis and interpretation.

|

|

Compliance and security

|

If your company is governed by strict data regulations, all ETL processes must be compliant.

Moving and transforming sensitive data can introduce the risk of data breaches.

|

No need to move or transform sensitive data. Leaving data in place lessens the risk of a data breach.

|

Benefits of Zero ETL

- Interact with data without moving or transforming it. By eliminating the need for an ETL-style data pipeline, Zero ETL also:

- Eliminates the errors that can come with duplication

- Eliminates data latency

- Helps facilitate near-real-time analysis of data

- Gain greater agility via speedy data transfers, streamlined analytics processes, and real-time access to insights.

- Save time and money with simplified, self-serve implementation and cost efficiency.

Challenges of Zero ETL

- Limited data transformation capability. If your organization currently accesses data that requires cleaning, standardization, or other complex transformations before it can be consumed, that data cannot route through a Zero ETL system.

- Compromised data governance. Traditional ETL solutions often come equipped with controls and safeguards to uphold the quality and integrity of data transfers. Zero ETL operates without such controls, relying on the systems that house the data to perform those important tasks.

- Restricted integration potential. Zero ETL systems may not integrate seamlessly with standard ETL ecosystems because the mechanisms that support duplication and transfer are not relevant. If you want to retain access to legacy data sources, you might wind up with two parallel connectivity methodologies: One for legacy data, and one for everything else.

Should I apply zero integration?

If you’re ready to find out more about Zero ETL and what it might bring to your data operations, ask yourself the following questions:

- Does my organization require real-time processing? If so, can it ensure data quality without pre-transformation?

Zero ETL is best for situations where data formats are consistent enough to skip extensive transformation before they are loaded, and speed is critical.

- How hard will it be to integrate Zero ETL with our existing systems?

Analyze how your current databases, sources, warehouses, and lakes deal with the typical ETL data pipeline. Can your current systems handle Schema-on-Read? What other integration challenges might ensue with current and legacy systems?

- What new automation and orchestration tools will we need?

Zero ETL requires automation and orchestration tools that can manage real-time data flows and transformations. This includes scheduling, monitoring, and maintaining more agile data processes efficiently.

- How much training will our data team need to implement and maintain Zero ETL?

Zero ETL performs data transformation on-demand and creates an extremely agile data pipeline. It entails managing and querying raw data. If your team does not have the skill sets or additional training required, you’ll need to ensure that they get it.

Discover CData Zero ETL solutions

CData is the only bi-modal data connectivity provider in the market. This means that we not only provide robust ETL/ELT solutions with CData Sync, but we also offer Zero ETL capabilities with a robust library of drivers and connectors and CData Connect AI, an enterprise-ready software-as-a-service (SaaS) offering.

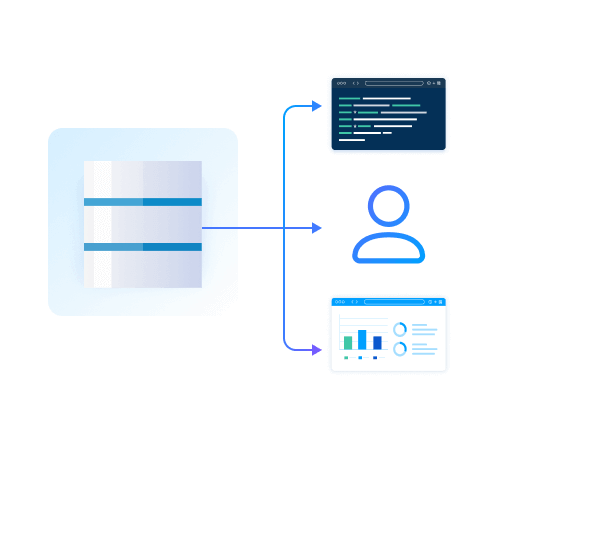

- CData Drivers and Connectors: Universal, embeddable, standards-based connectivity for real-time access to 300+ SaaS, big data, and NoSQL sources from popular BI, reporting, and analytics tools and from custom applications.

- CData Connect AI: Data virtualization for the cloud, providing governed access to hundreds of cloud applications, databases, and warehouses for live data consumption and analysis with your favorite tools.

Get a free 30-day trial of any of our real-time connectivity solutions, and get Zero ETL access to enterprise data in your preferred tools and applications today!

Try CData today

Get a free trial of CData connectivity solutions to discover how you can uplevel your data strategy today.