This article provides an insightful exploration of data management, focusing on the distinctions between data pipelines and ETL (extract, transform, load) pipelines. We'll explore the attributes and functions of each, equipping you to make informed choices for your organization, whether it’s a startup or an established business. This guide will help you confidently navigate the evolving landscape of data integration and data processing.

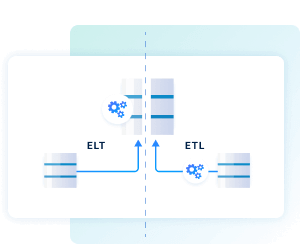

Before we get started, let’s go over the terms we’re using here. “Data pipeline” and “ETL pipeline” are sometimes used interchangeably, but there are important differences between them. We’ll describe each in more detail below.

What is an ETL pipeline?

An ETL pipeline is a series of processes within a data pipeline designed to enhance data quality through data integration. It is particularly suited for batch processing, complex data transformations, and business intelligence applications, focusing on compliance while effectively extracting data from various data sources for loading into a data warehouse or data lake. An ETL pipeline comprises three primary steps:

Extraction

Raw data is gathered from various data sources, including databases, flat files, and web services, through ETL processes that involve either full extraction of all data or partial extraction of new updates for data integration.

Transformation

The extracted data undergoes cleaning, normalization, and integration in the ETL process, enhancing its usability and preparing it for specific data pipeline use cases.

Loading

The transformed data is moved to a data warehouse for analysis, ensuring data integrity and system performance, thus preparing it for practical business applications through efficient ETL pipelines and data integration.

5 benefits of ETL pipelines

Modern ETL pipelines play a pivotal role in data management, significantly boosting data quality and accuracy, enhancing operational efficiency, and saving time by automating manual data-handling tasks. Their scalability effectively accommodates increasing data volumes, vital for maintaining data security and meeting regulatory compliance standards – key elements for thorough analysis and reporting.

Data quality

ETL pipelines prioritize data quality through thorough data cleaning, transformation, and enrichment steps, yielding highly accurate, consistent data that minimizes errors and inconsistencies. This ETL process is crucial for reliable analytics and reporting, making it ideal for scenarios demanding accuracy, such as financial reporting and customer data analysis.

Complex data transformations

ETL pipelines are essential for environments requiring extensive data transformation. They efficiently handle complex ETL processes like converting data formats, aggregating data from multiple data sources, and applying business logic. This makes ETL pipelines ideal for applications needing refined and structured data for seamless data integration into a data warehouse or data lake, where structured data processing enhances analytics and downstream applications.

Business intelligence

Tailored for business intelligence (BI) and data warehousing needs, ETL pipelines enable the transformation of raw data sources into structured data warehouses. These structured datasets support in-depth analysis, historical reporting, and strategic decision-making, empowering businesses to derive meaningful insights. By extracting data from various sources and processing it through the ETL process, companies can streamline data integration and optimize data processing for actionable insights.

Batch processing

ETL pipelines are ideal for batch processing, where data pipelines extract data, transform it, and load it into a data warehouse or data lake at set intervals. This ETL approach suits scenarios where real-time data processing isn’t essential, optimizing resource usage for efficient data integration and handling of large datasets. This approach is particularly useful for data transformation and storage in scenarios where periodic data updates are sufficient.

Security and compliance

ETL pipelines use a structured approach to ensure data security and regulatory compliance throughout the ETL process. These ETL tools maintain data lineage, enforce data privacy, and support data governance policies, making them ideal for handling sensitive or regulated data. By extracting data from diverse data sources and integrating it into a data warehouse or data lake, ETL pipelines streamline data transformation and data processing for secure and compliant data handling.

What is a data pipeline?

A data pipeline refers to the end-to-end process of extracting data from various data sources—such as databases, applications, or IoT devices—and moving it to a destination like a data warehouse or data lake for analysis and storage. While ETL pipelines or ELT processes are often part of a data pipeline, they represent only a subset of its functionality.

Functioning as conduits for data integration and data processing, data pipelines guide data through every stage of the data lifecycle—from data transformation and collection to analysis and reporting. They are critical components in an organization’s data infrastructure, centralizing data across sources to provide a unified, comprehensive view of operations.

This unified approach supports everything from business intelligence and real-time analytics to machine learning initiatives, transforming raw data into valuable, actionable insights. Data pipelines thus provide the foundational data flow that drives data-driven decision-making across an organization.

Learn more about data pipelines

4 benefits of data pipelines

Data pipelines enable structured, automated connections, and transformations of data, removing data silos and enhancing both accuracy and reliability.

Versatility

Data pipelines, including ETL pipelines, manage diverse types of data, from structured to unstructured, and integrate multiple data sources such as databases, APIs, and cloud platforms. This adaptability makes them ideal for various environments, from simple data transfers to complex data integration tasks.

Real-time data

Data pipelines are capable of both real-time (or streaming) and near real-time data processing, enabling organizations to act on the most current data for timely decision-making. This is a critical function in fraud detection, market trend analysis, and operational monitoring, where immediate data availability can offer critical security insights or competitive advantage.

Scalability

Designed for managing large data volumes, data pipelines scale to meet growing big data needs, crucial for businesses expanding their data footprint. This scalability ensures efficient data processing in ETL pipelines, allowing seamless data transformation and integration from diverse data sources without performance loss.

Flexibility

Data pipelines can route data to various end systems, such as data warehouses for transactional data, data lakes for analytical processing, or even real-time analytics platforms. This flexibility allows organizations to customize their ETL pipelines to meet specific data storage and analysis needs, supporting a wide range of business applications.

Data pipeline vs. ETL: 6 differences

Data pipelines and ETL pipelines differ in several ways. The key ones are:

|

Aspect

|

Data Pipeline

|

ETL Pipeline

|

|

Data processing

|

Handles data duplication, filtering, migration, and enrichment. Supports both batch and real-time processing.

|

Focuses on extracting, transforming, and loading data into a data warehouse. Typically, batch processing.

|

|

Flexibility and adaptability

|

Highly flexible, adapts to various data sources, formats, and destinations. Handles real-time and batch processing.

|

Less flexible, follows structured workflows. Limited adaptability to new data sources or formats.

|

|

Latency

|

Offers low latency with real-time processing options. Suitable for real-time data applications.

|

Provides higher latency due to batch processing. Data processed in chunks at scheduled intervals.

|

|

Transformation focus

|

Transformation is optional, it focuses on data movement. Can be minimal or extensive.

|

Transformation is a central component. Ensures data is suitable for analysis in a data warehouse.

|

|

Focus and use Cases

|

Broad use cases: real-time analytics, data migration, continuous integration.

|

Focused on data warehousing and business intelligence. Centralizes data for analysis and reporting.

|

|

Scalability

|

Highly scalable, adjusts to varying data volumes and processing demands.

|

Scalable but limited by batch processing constraints. Requires additional resources for scaling.

|

How to choose between data pipelines and ETL

Now that we’ve gone through the basics of both types of pipelines, which should you choose? That depends on your organization’s circumstances and needs. Keep these questions in mind as you evaluate:

What kind of data do you have, and how much?

Data pipelines are ideal for managing diverse data types and large volumes, especially for streaming data. In contrast, ETL pipelines excel in scenarios requiring significant data transformation before storage and analysis, particularly in batch processing environments.

How complex is your data?

If you want to move data efficiently from source to destination with minimal processing, data pipelines are ideal. In contrast, ETL pipelines focus on the data transformation aspect, making them suitable for scenarios requiring extensive cleaning, normalization, and enrichment of extracted data before loading it into a data warehouse or data lake.

What do you want to do with your data?

Data pipelines are essential for quickly and efficiently making data available for various uses, such as operational reporting and real-time analytics. ETL pipelines are commonly utilized for data warehousing and scenarios requiring extensive data analysis for business intelligence and strategic decision-making.

Other considerations

- Performance and scalability: Consider the performance impact of both options, particularly regarding data volume and the complexity of the ETL process. Scalability is essential if you anticipate growth in data sources or data processing needs over time.

- Data quality and consistency: Ensure that the selected approach maintains high data quality standards and consistency, which is crucial for ETL pipelines in data integration and data processing.

- Integration with existing systems: Evaluate how well the ETL pipeline will integrate with your existing data ecosystem, focusing on compatibility with current data sources and data warehouses.

- Cost and resource implications: Evaluate the comparative costs of implementing and maintaining each ETL pipeline, including necessary resources such as hardware, software, and human expertise for data integration, data processing, and transforming data from various data sources.

- Compliance and security needs: Ensure your data handling approach complies with relevant data privacy and security regulations, crucial for both ETL pipelines and data processing. This is essential for extracting data from various data sources and integrating it effectively into your data warehouse or data lake.

- Flexibility and future-proofing: Consider how adaptable your ETL pipelines need to be for future changes in data sources, formats, and business requirements.

Elevate your pipelines with CData Sync

CData Sync provides a single tool to empower users—even those with limited technical experience—to easily build their own data pipelines, simplifying the consolidation of data across cloud and on-premises systems. Sync’s intuitive interface takes the complexity out of data integration, enabling you to focus on making informed, data-driven decisions for your business.

Discover CData Sync