Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Build Azure Data Lake Storage-Connected Visualizations in datapine

Use CData Connect Cloud and datapine to build visualizations and dashboards with access to live Azure Data Lake Storage data.

datapine is a browser-based business intelligence platform. When paired with the CData Connect Cloud, you get access to your Azure Data Lake Storage data directly from your datapine visualizations and dashboards. This article describes connecting to Azure Data Lake Storage in CData Connect Cloud and building a simple Azure Data Lake Storage-connected visualization in datapine.

CData Connect Cloud provides a pure SQL Server interface for Azure Data Lake Storage, allowing you to query data from Azure Data Lake Storage without replicating the data to a natively supported database. Using optimized data processing out of the box, CData Connect Cloud pushes all supported SQL operations (filters, JOINs, etc.) directly to Azure Data Lake Storage, leveraging server-side processing to return the requested Azure Data Lake Storage data quickly.

Configure Azure Data Lake Storage Connectivity for datapine

Connectivity to Azure Data Lake Storage from datapine is made possible through CData Connect Cloud. To work with Azure Data Lake Storage data from datapine, we start by creating and configuring a Azure Data Lake Storage connection.

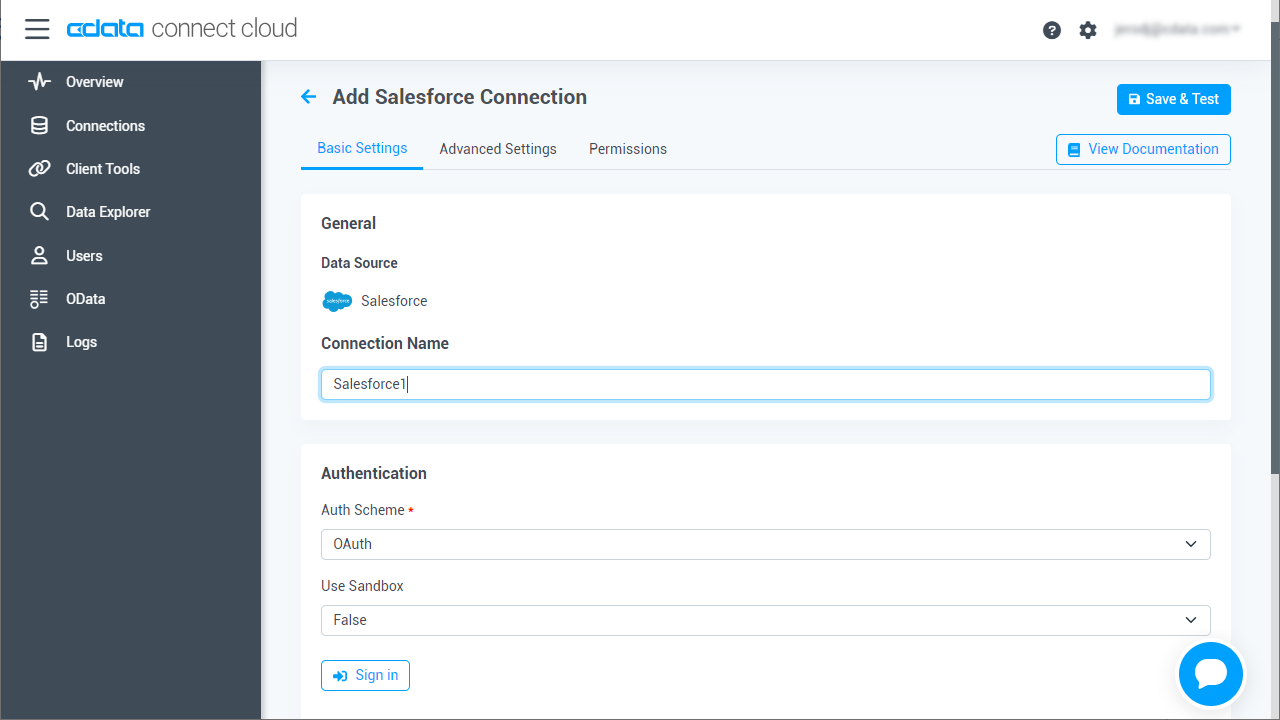

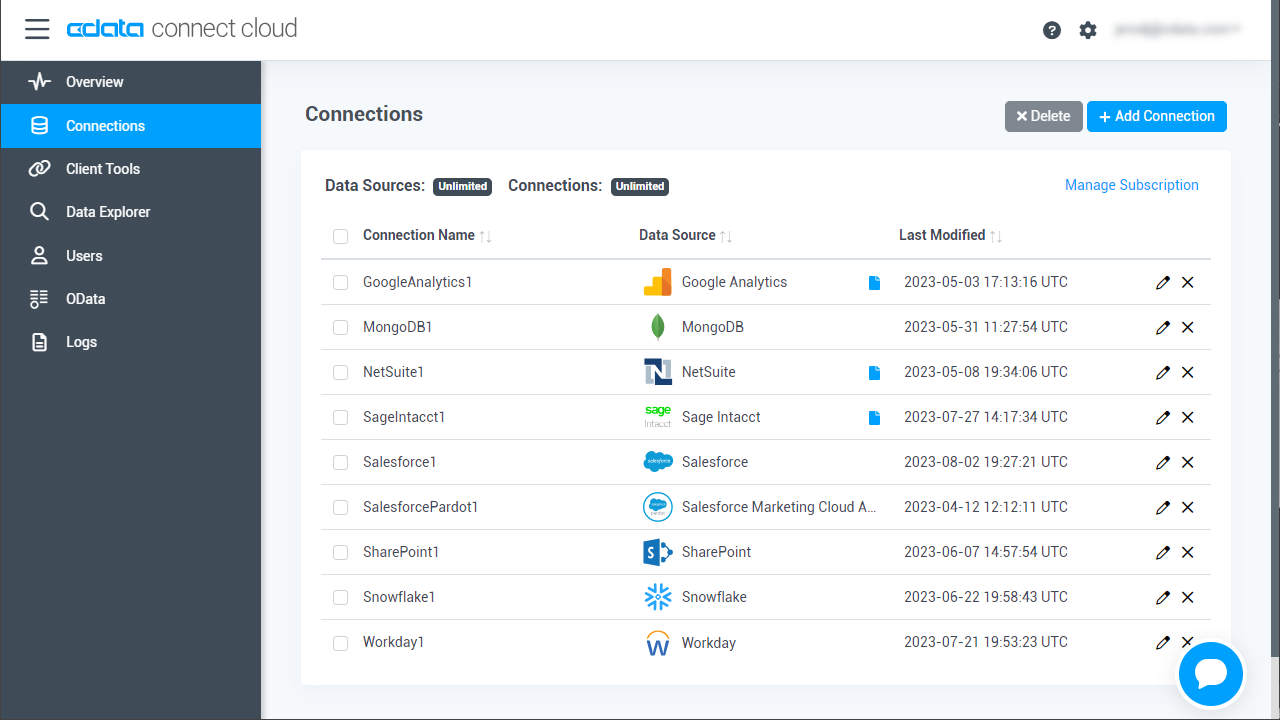

- Log into Connect Cloud, click Connections and click Add Connection

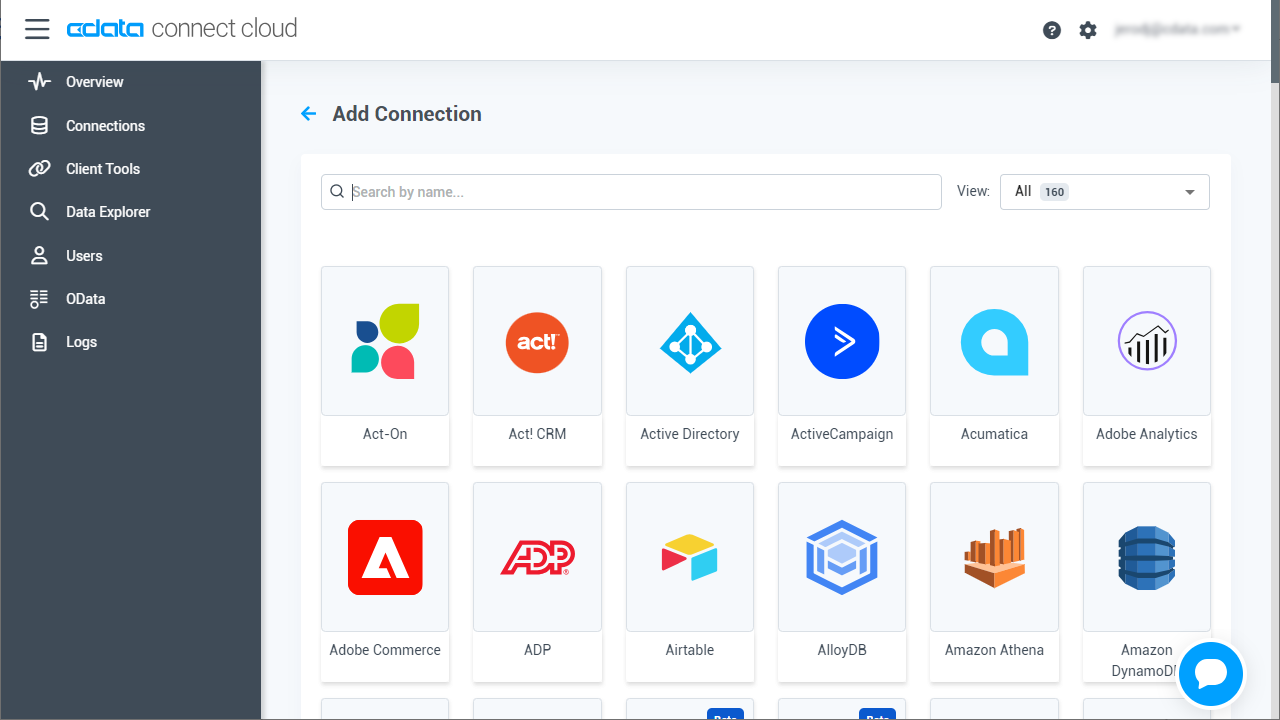

- Select "Azure Data Lake Storage" from the Add Connection panel

-

Enter the necessary authentication properties to connect to Azure Data Lake Storage.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

![Configuring a connection (Salesforce is shown)]()

- Click Create & Test

-

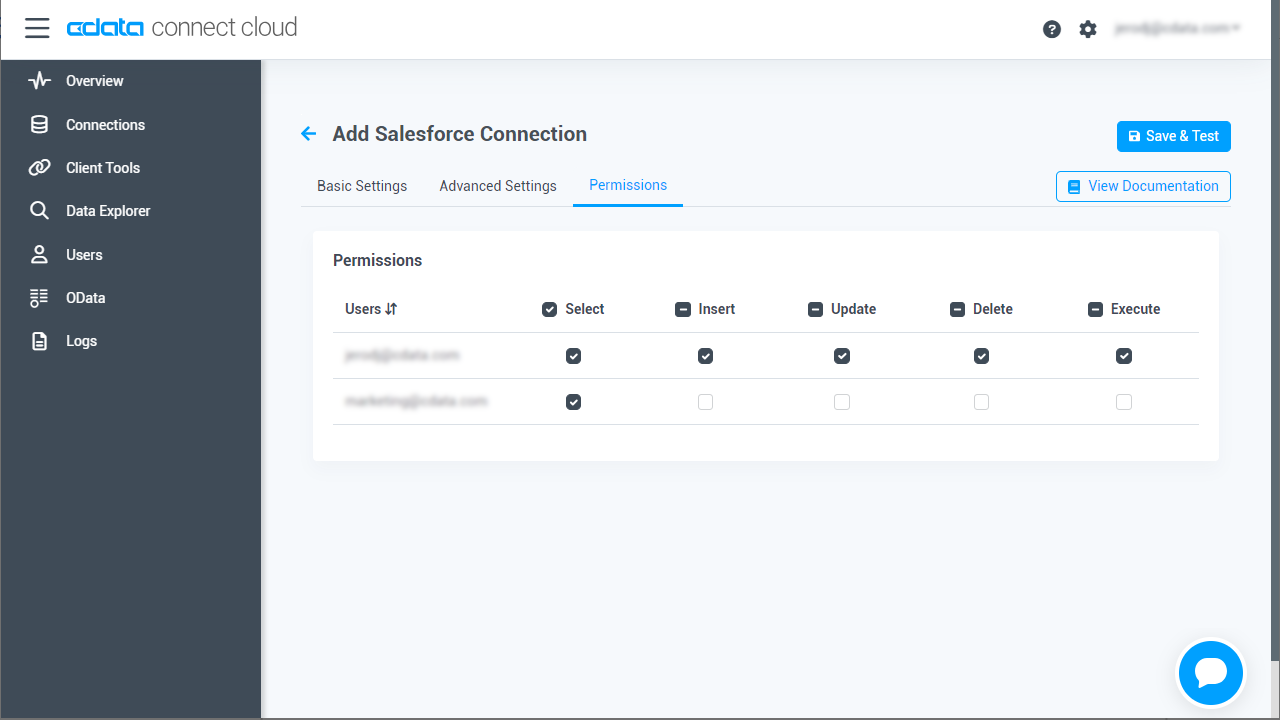

Navigate to the Permissions tab in the Add Azure Data Lake Storage Connection page and update the User-based permissions.

![Updating permissions]()

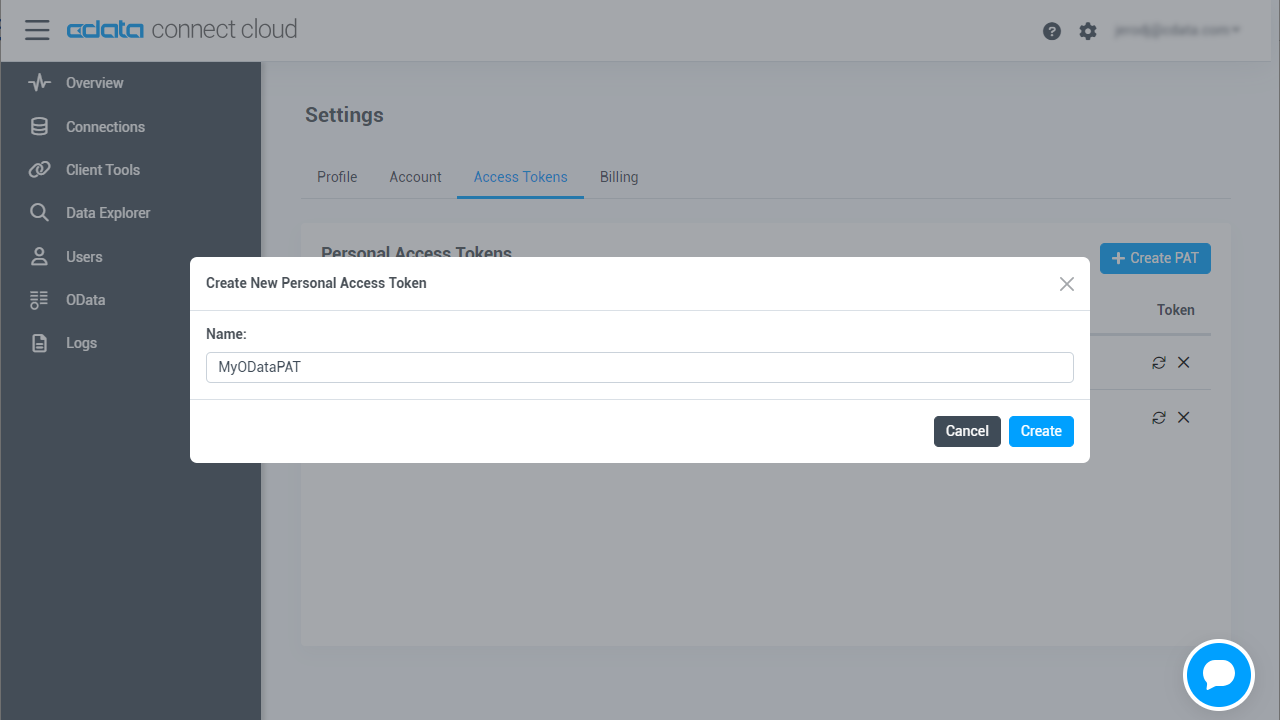

Add a Personal Access Token

If you are connecting from a service, application, platform, or framework that does not support OAuth authentication, you can create a Personal Access Token (PAT) to use for authentication. Best practices would dictate that you create a separate PAT for each service, to maintain granularity of access.

- Click on your username at the top right of the Connect Cloud app and click User Profile.

- On the User Profile page, scroll down to the Personal Access Tokens section and click Create PAT.

- Give your PAT a name and click Create.

- The personal access token is only visible at creation, so be sure to copy it and store it securely for future use.

Connecting to Azure Data Lake Storage from datapine

Once you configure your connection to Azure Data Lake Storage in Connect Cloud, you are ready to connect to Azure Data Lake Storage from datapine.

- Log into datapine

- Click Connect to navigate to the "Connect" page

- Select MS SQL Server as the data source

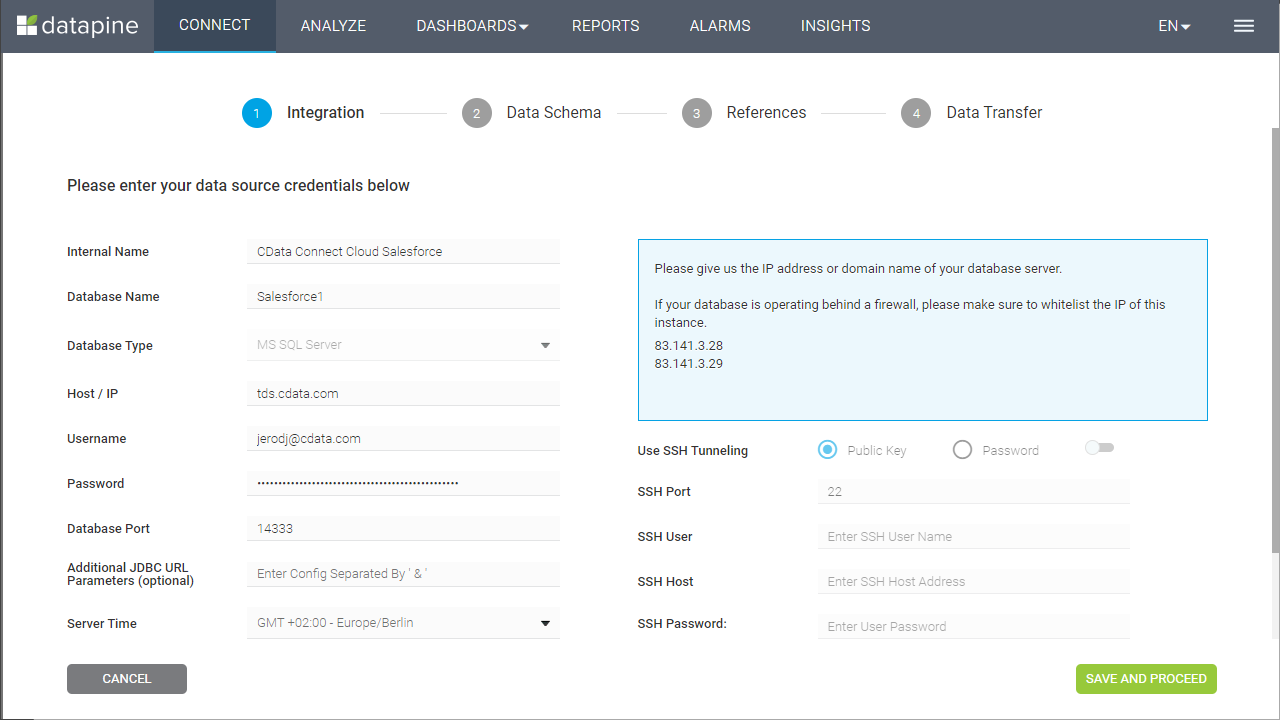

- In the Integration step, fill in the connection properties and click "Save and Proceed"

- Set the Internal Name

- Set Database Name to the name of the connection we just configured (e.g. ADLS1)

- Set Host / IP to "tds.cdata.com"

- Set Username to your Connect Cloud username (e.g. user@mydomain.com)

- Set Password to the corresponding PAT

- Set Database Port to "14333"

![Configuring the connection to CData Connect Cloud]()

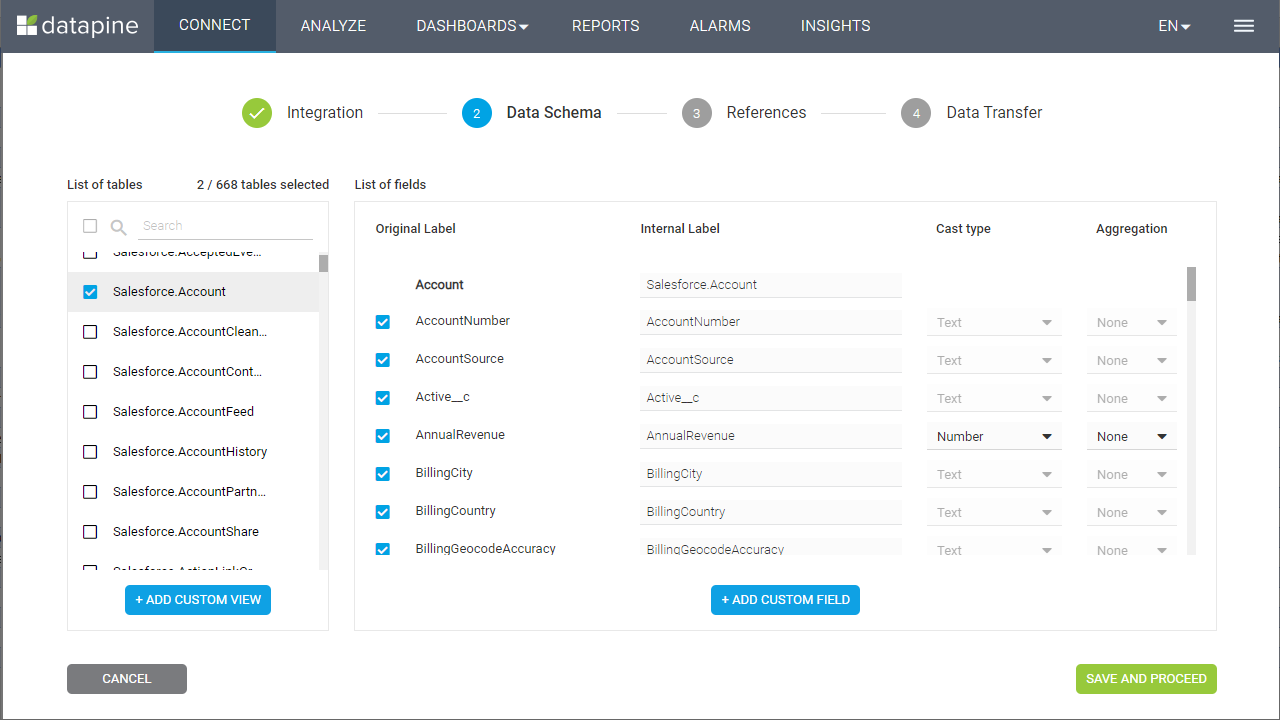

- In the Data Schema step, select the tables and fields to visualize and click "Save and Proceed"

![Selecting tables and fields to visualize (Salesforce is shown)]()

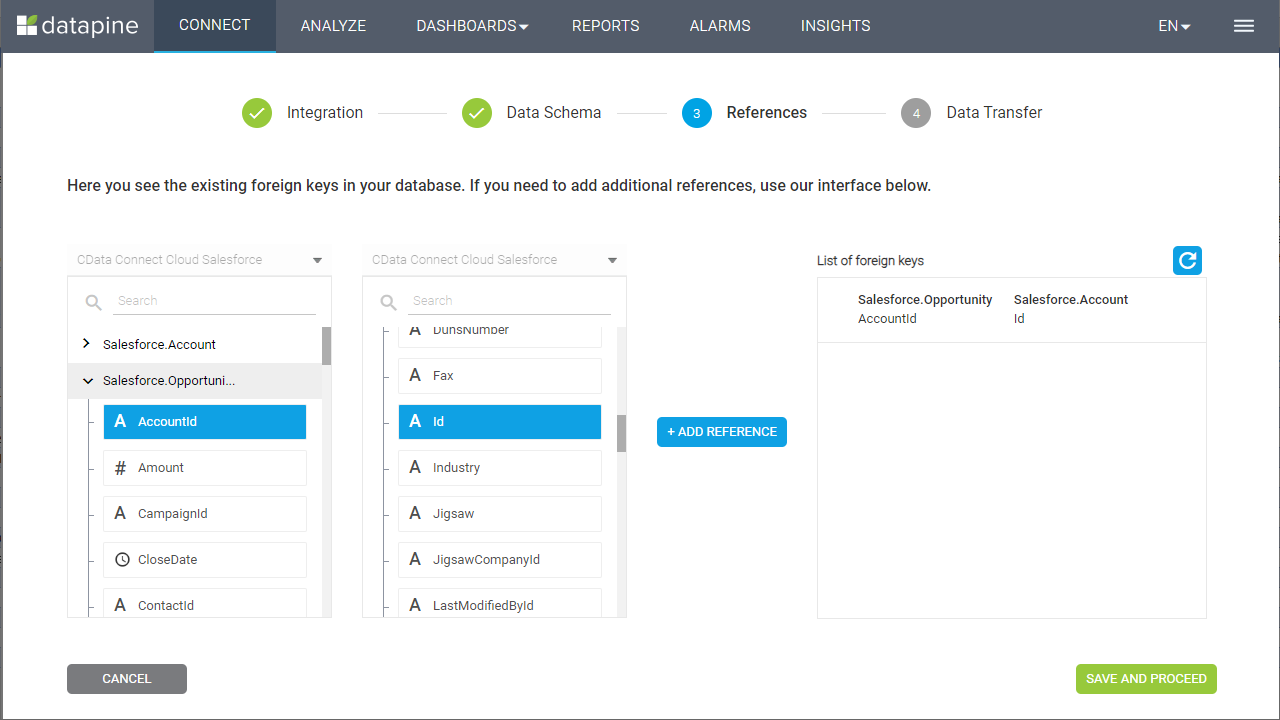

- In the References step, define any relationships between your selected tables and click "Save and Proceed"

![Defining foreign key relationships]()

- In the Data Transfer step, click "Go to Analyzer"

Visualize Azure Data Lake Storage Data in datapine

After connecting to CData Connect Cloud, you are ready to visualize your Azure Data Lake Storage data in datapine. Simply select the dimensions and measures you wish to visualize!

Having connect to Azure Data Lake Storage from datapine, you are now able to visualize and analyze real-time Azure Data Lake Storage data no matter where you are. To get live data access to 100+ SaaS, Big Data, and NoSQL sources directly from datapine, try CData Connect Cloud today!