Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Connect to Databricks Data as an External Data Source using PolyBase

Use CData Connect Cloud and PolyBase to create an external data source in SQL Swerver with access to live Databricks data.

PolyBase for SQL Server allows you to query external data by using the same Transact-SQL syntax used to query a database table. When paired with the CData ODBC Driver for Databricks, you get access to your Databricks data directly alongside your SQL Server data. This article describes creating an external data source and external tables to grant access to live Databricks data using T-SQL queries.

NOTE: PolyBase is only available on SQL Server 19 and above, and only for Standard SQL Server.

CData Connect Cloud provides a pure SQL Server interface for Databricks, allowing you to query data from Databricks without replicating the data to a natively supported database. Using optimized data processing out of the box, CData Connect Cloud pushes all supported SQL operations (filters, JOINs, etc.) directly to Databricks, leveraging server-side processing to return the requested Databricks data quickly.

Configure Databricks Connectivity for PolyBase

Connectivity to Databricks from PolyBase is made possible through CData Connect Cloud. To work with Databricks data from PolyBase, we start by creating and configuring a Databricks connection.

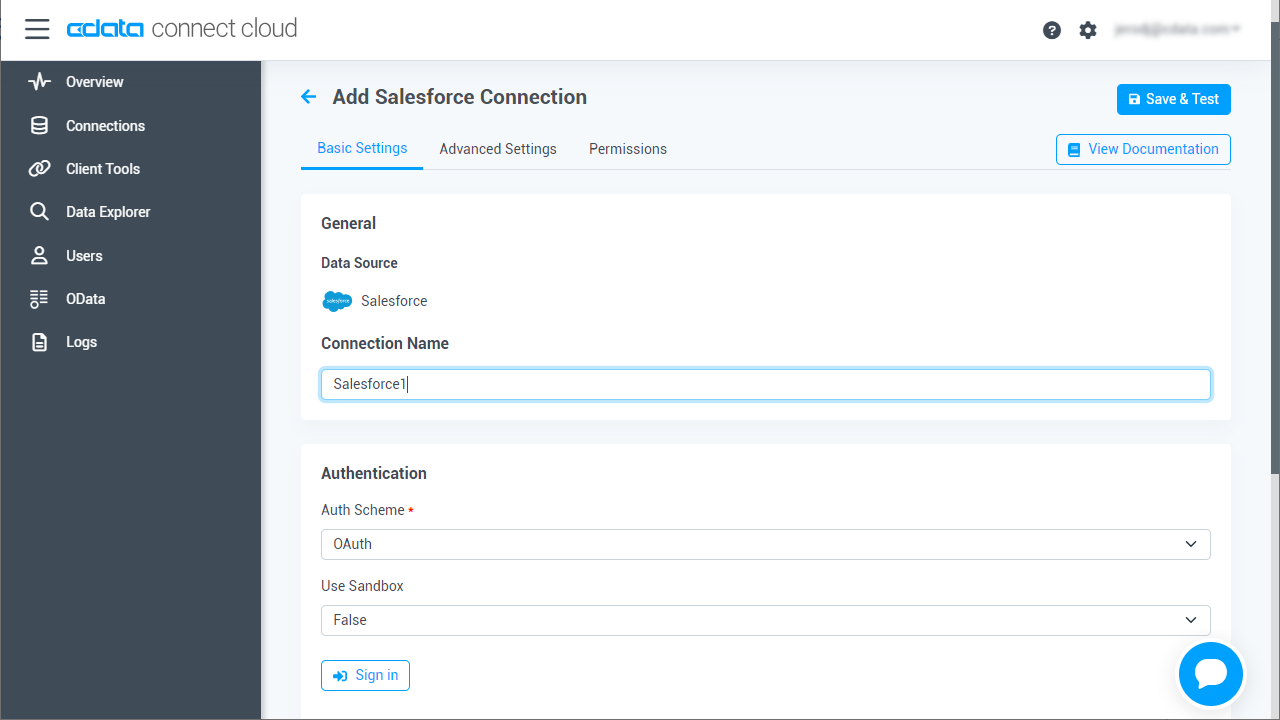

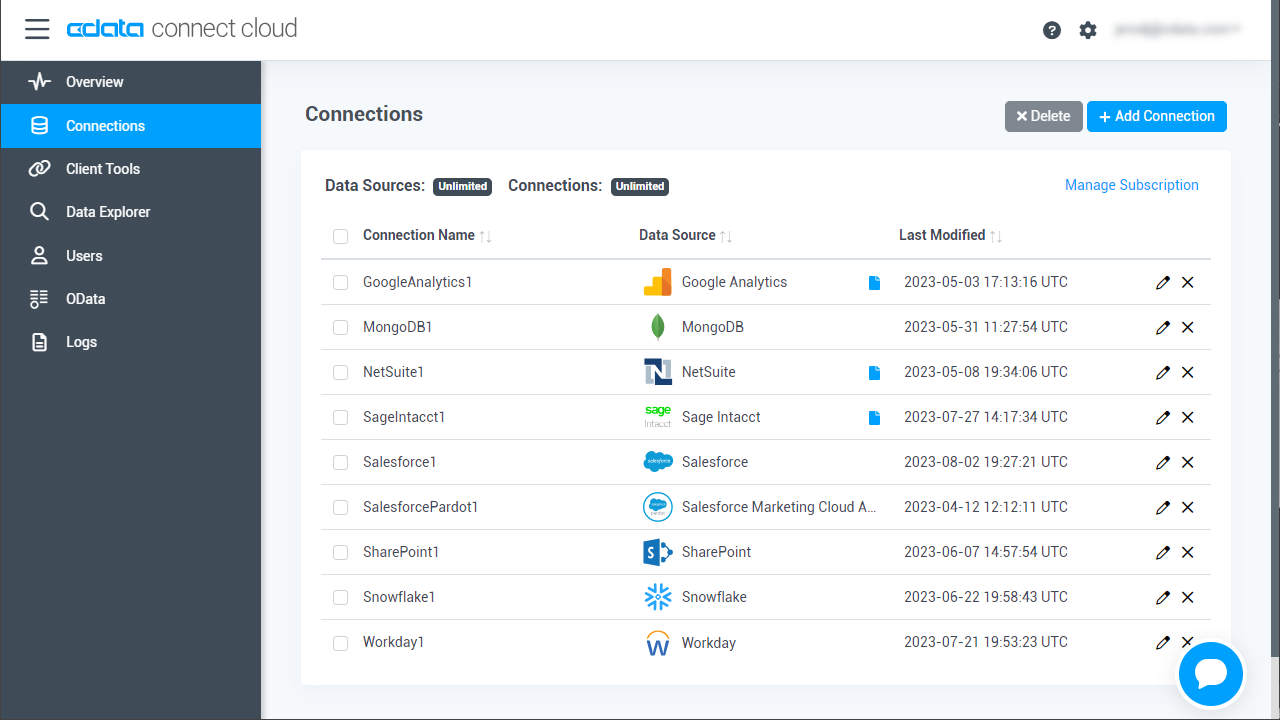

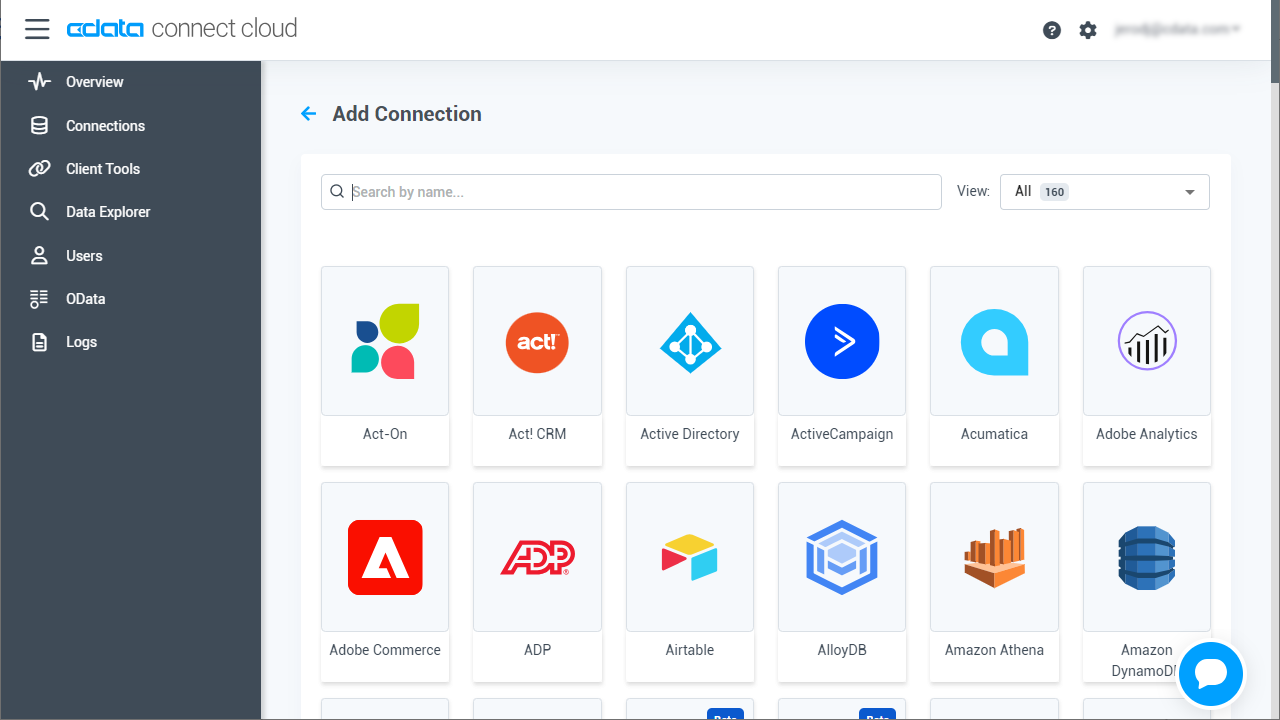

- Log into Connect Cloud, click Connections and click Add Connection

- Select "Databricks" from the Add Connection panel

-

Enter the necessary authentication properties to connect to Databricks.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

![Configuring a connection (Salesforce is shown)]()

- Click Create & Test

-

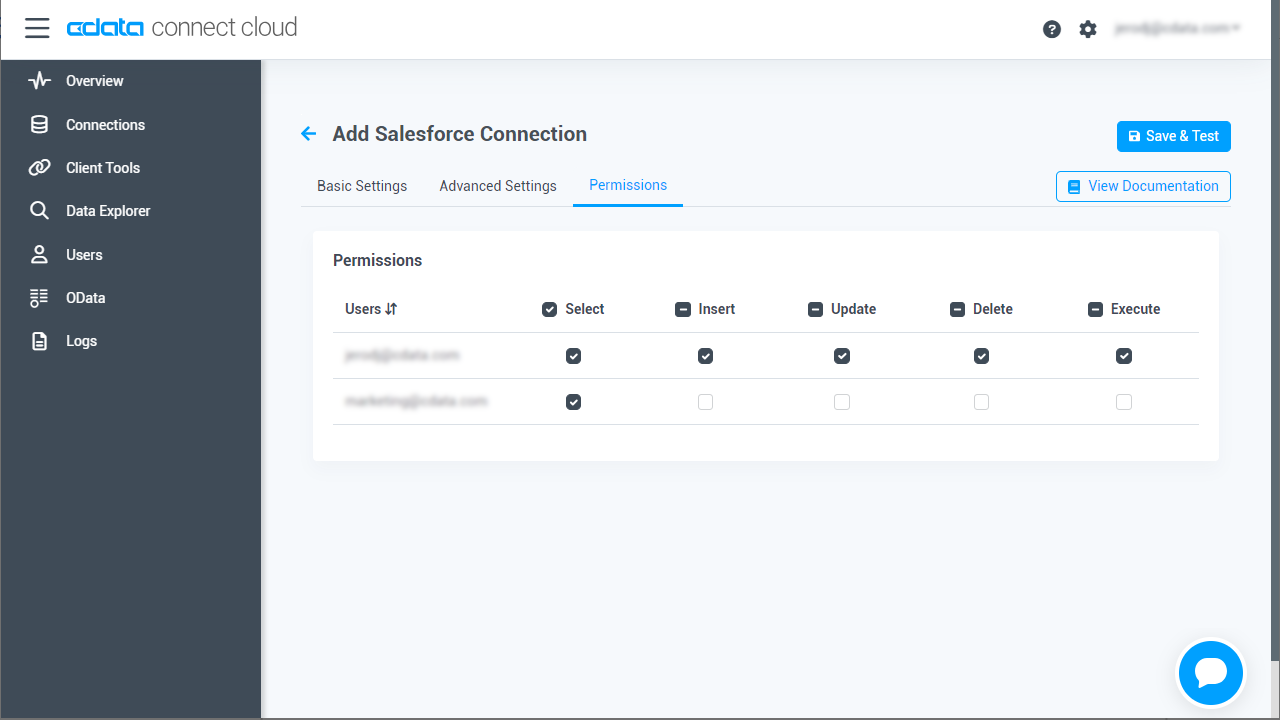

Navigate to the Permissions tab in the Add Databricks Connection page and update the User-based permissions.

![Updating permissions]()

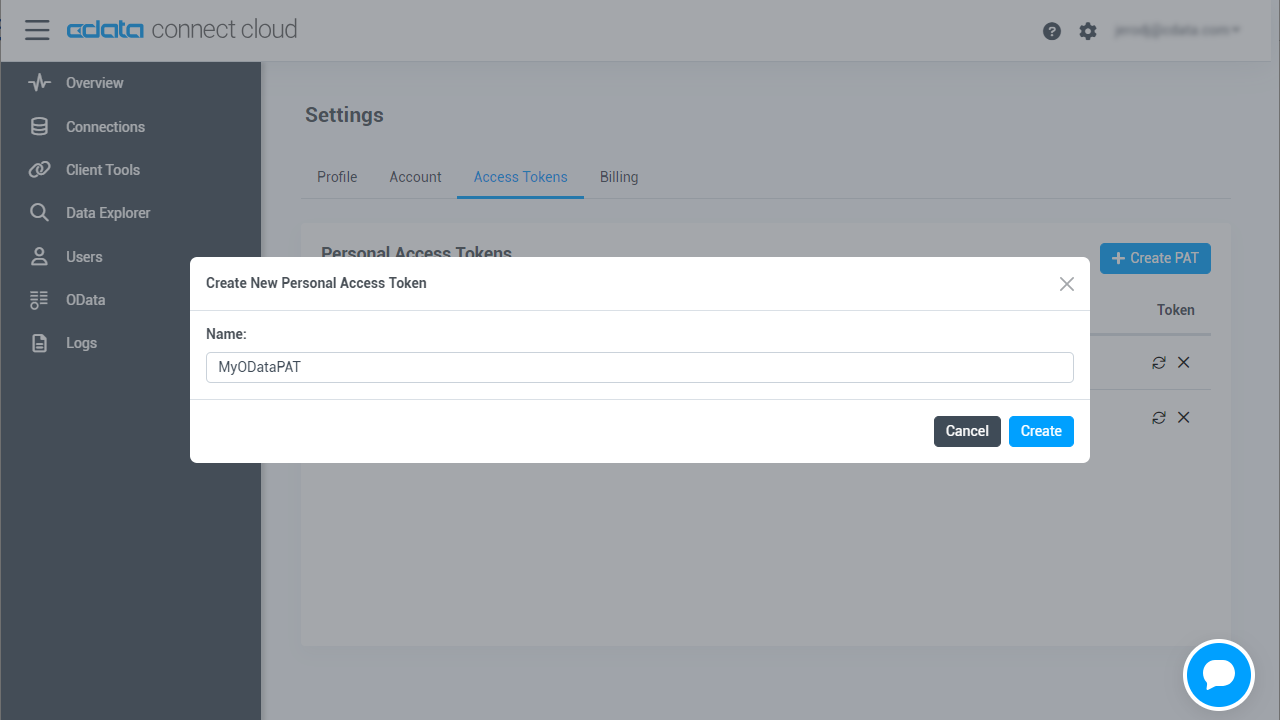

Add a Personal Access Token

If you are connecting from a service, application, platform, or framework that does not support OAuth authentication, you can create a Personal Access Token (PAT) to use for authentication. Best practices would dictate that you create a separate PAT for each service, to maintain granularity of access.

- Click on your username at the top right of the Connect Cloud app and click User Profile.

- On the User Profile page, scroll down to the Personal Access Tokens section and click Create PAT.

- Give your PAT a name and click Create.

- The personal access token is only visible at creation, so be sure to copy it and store it securely for future use.

Create an External Data Source for Databricks Data

After configuring the connection, you need to create a credential database for the external data source.

Creating a Credential Database

Execute the following SQL command to create credentials for the external data source connected to Databricks data.

NOTE: Set IDENTITY to your Connect Cloud username and set SECRET to your Personal Access Token.

CREATE DATABASE SCOPED CREDENTIAL ConnectCloudCredentials WITH IDENTITY = 'yourusername', SECRET = 'yourPAT';

Create an External Data Source for Databricks

Execute a CREATE EXTERNAL DATA SOURCE SQL command to create an external data source for Databricks with PolyBase:

CREATE EXTERNAL DATA SOURCE ConnectCloudInstance WITH ( LOCATION = 'sqlserver://tds.cdata.com:14333', PUSHDOWN = ON, CREDENTIAL = ConnectCloudCredentials );

Create External Tables for Databricks

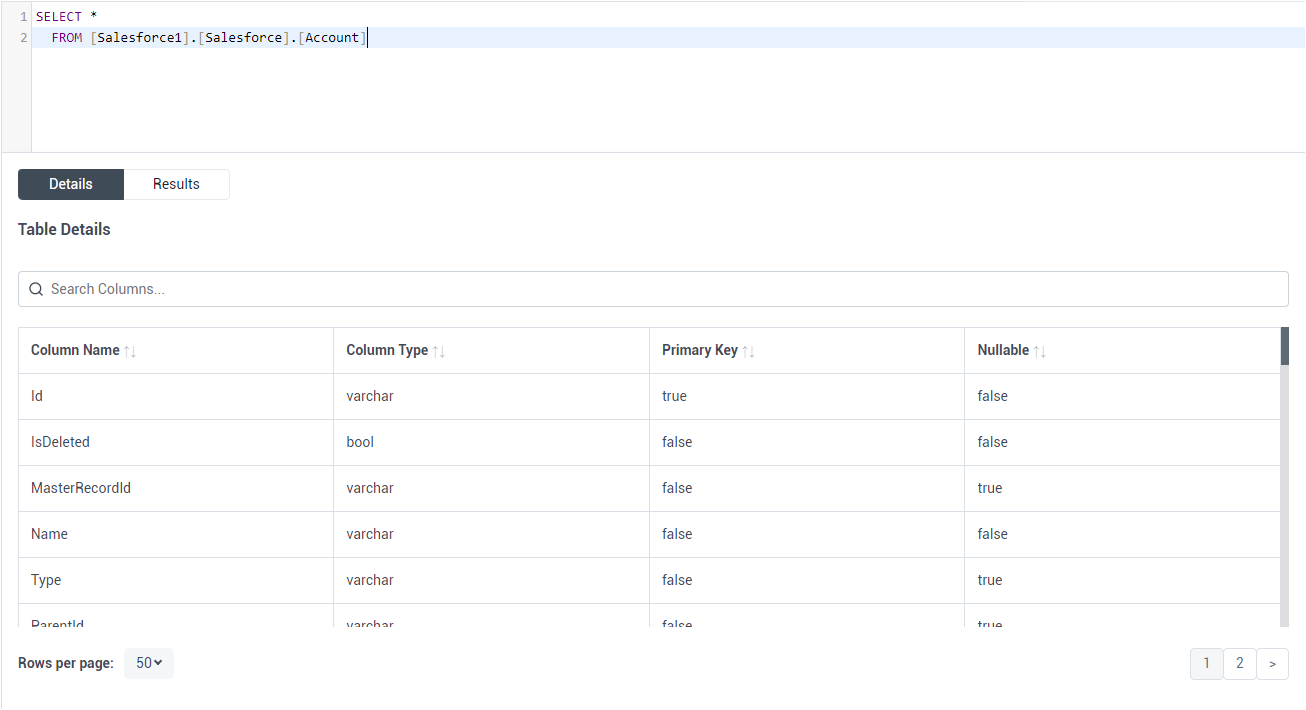

After creating the external data source, use CREATE EXTERNAL TABLE statements to link to Databricks data from your SQL Server instance. The table column definitions must match those exposed by CData Connect Cloud. You can use the Data Explorer in Connect Cloud to see the table definition.

Sample CREATE TABLE Statement

Execute a CREATE EXTERNAL TABLE SQL command to create the external table(s), using the collation and setting the LOCATION to three-part notation for the connection, catalog, and table. The statement to create an external table based on a Databricks Customers would look similar to the following.

CREATE EXTERNAL TABLE Customers( City COLLATE [nvarchar](255) NULL, CompanyName COLLATE [nvarchar](255) NULL, ... ) WITH ( LOCATION='Databricks1.Databricks.Customers', DATA_SOURCE=ConnectCloudInstance );

Having created external tables for Databricks in your SQL Server instance, you are now able to query local and remote data simultaneously. To get live data access to 100+ SaaS, Big Data, and NoSQL sources directly from your SQL Server database, try CData Connect Cloud today!