Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Create Informatica Mappings From/To a JDBC Data Source for Databricks

Create Databricks data objects in Informatica using the standard JDBC connection process: Copy the JAR and then connect.

Informatica provides a powerful, elegant means of transporting and transforming your data. By utilizing the CData JDBC Driver for Databricks, you are gaining access to a driver based on industry-proven standards that integrates seamlessly with Informatica's powerful data transportation and manipulation features. This tutorial shows how to transfer and browse Databricks data in Informatica PowerCenter.

Deploy the Driver

To deploy the driver to the Informatica PowerCenter server, copy the CData JAR and .lic file, located in the lib subfolder in the installation directory, to the following folder: Informatica-installation-directory\services\shared\jars\thirdparty.

To work with Databricks data in the Developer tool, you will need to copy the CData JAR and .lic file, located in the lib subfolder in the installation directory, into the following folders:

- Informatica-installation-directory\client\externaljdbcjars

- Informatica-installation-directory\externaljdbcjars

Create the JDBC Connection

Follow the steps below to connect from Informatica Developer:

- In the Connection Explorer pane, right-click your domain and click Create a Connection.

- In the New Database Connection wizard that is displayed, enter a name and Id for the connection and in the Type menu select JDBC.

- In the JDBC Driver Class Name property, enter:

cdata.jdbc.databricks.DatabricksDriver - In the Connection String property, enter the JDBC URL, using the connection properties for Databricks.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

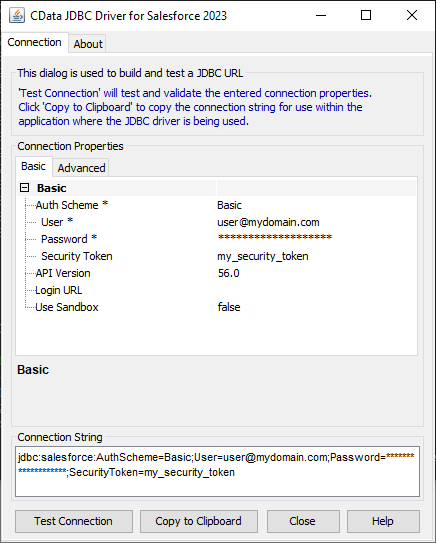

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Databricks JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.databricks.jarFill in the connection properties and copy the connection string to the clipboard.

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

A typical connection string is below:

jdbc:databricks:Server=127.0.0.1;Port=443;TransportMode=HTTP;HTTPPath=MyHTTPPath;UseSSL=True;User=MyUser;Password=MyPassword;

Browse Databricks Tables

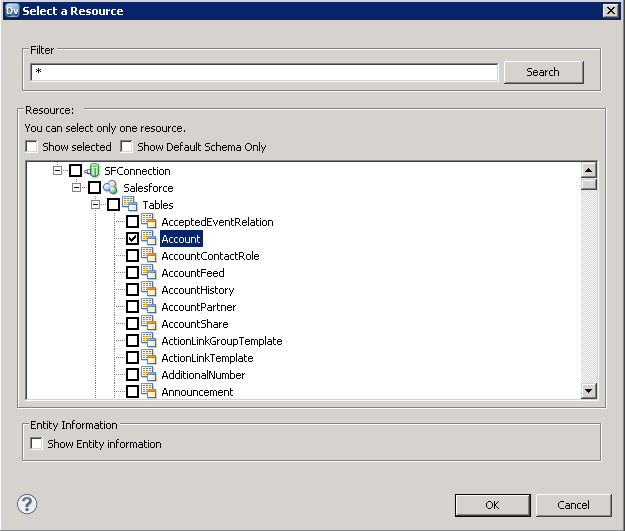

After you have added the driver JAR to the classpath and created a JDBC connection, you can now access Databricks entities in Informatica. Follow the steps below to connect to Databricks and browse Databricks tables:

- Connect to your repository.

- In the Connection Explorer, right-click the connection and click Connect.

- Clear the Show Default Schema Only option.

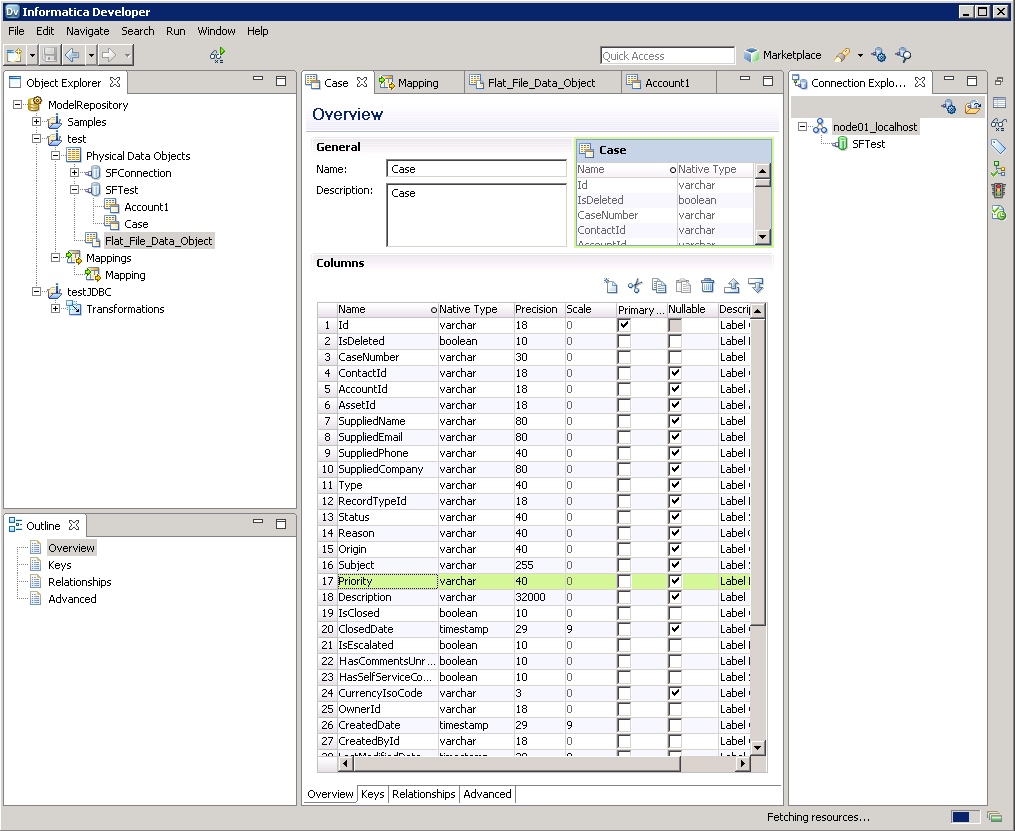

![The driver models Databricks entities as relational tables. (Salesforce is shown.)]()

You can now browse Databricks tables in the Data Viewer: Right-click the node for the table and then click Open. On the Data Viewer view, click Run.

Create Databricks Data Objects

Follow the steps below to add Databricks tables to your project:

- Select tables in Databricks, then right-click a table in Databricks, and click Add to Project.

- In the resulting dialog, select the option to create a data object for each resource.

- In the Select Location dialog, select your project.

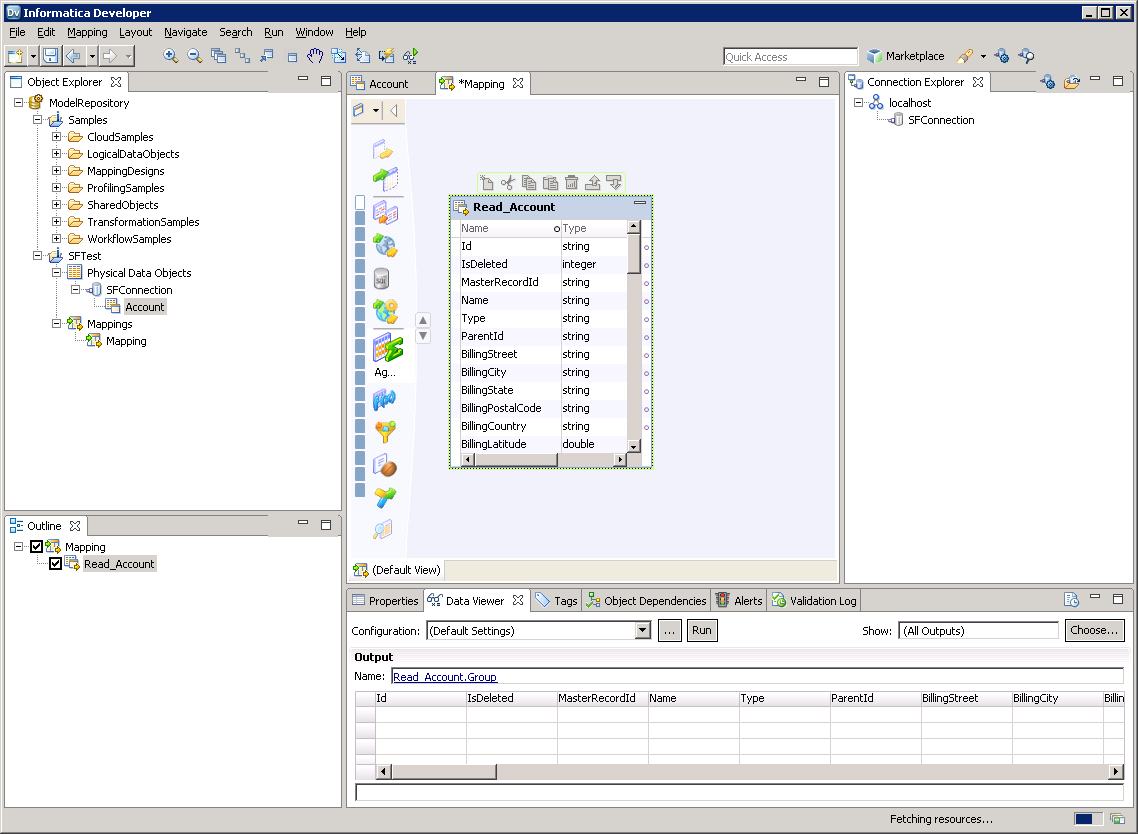

Create a Mapping

Follow the steps below to add the Databricks source to a mapping:

- In the Object Explorer, right-click your project and then click New -> Mapping.

- Expand the node for the Databricks connection and then drag the data object for the table onto the editor.

- In the dialog that appears, select the Read option.

![The source Databricks table in the mapping. (Salesforce is shown.)]()

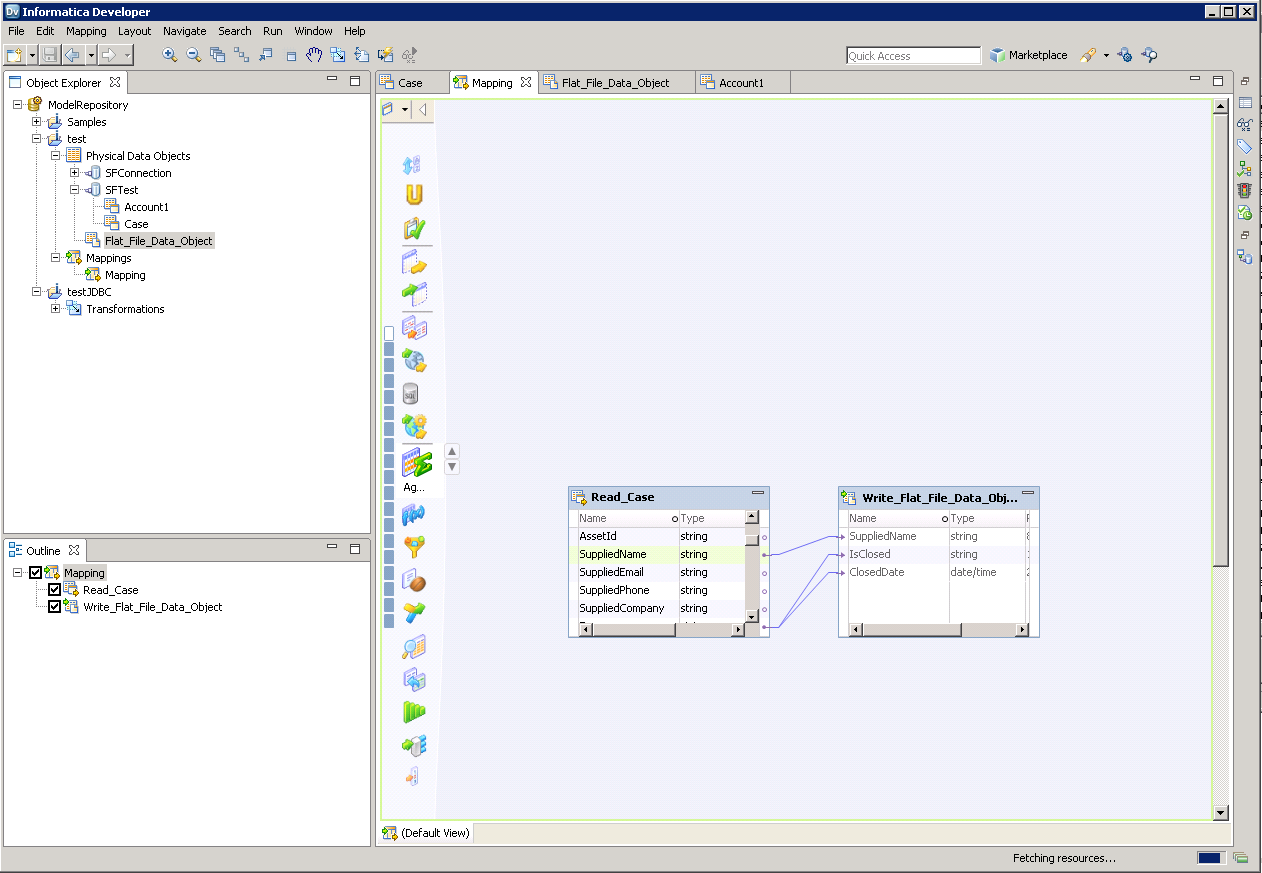

Follow the steps below to map Databricks columns to a flat file:

- In the Object Explorer, right-click your project and then click New -> Data Object.

- Select Flat File Data Object -> Create as Empty -> Fixed Width.

- In the properties for the Databricks object, select the rows you want, right-click, and then click copy. Paste the rows into the flat file properties.

- Drag the flat file data object onto the mapping. In the dialog that appears, select the Write option.

- Click and drag to connect columns.

To transfer Databricks data, right-click in the workspace and then click Run Mapping.

![The completed mapping. (Salesforce is shown.)]()