Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →How to Import Databricks Data into SQL Server using SSIS

Easily back up Databricks data to SQL Server using the SSIS components for Databricks.

Using SQL Server as a backup for critical business data provides an essential safety net against loss. Backing up data to SQL Server enables business users to more easily connect that data with features like reporting, analytics, and more.

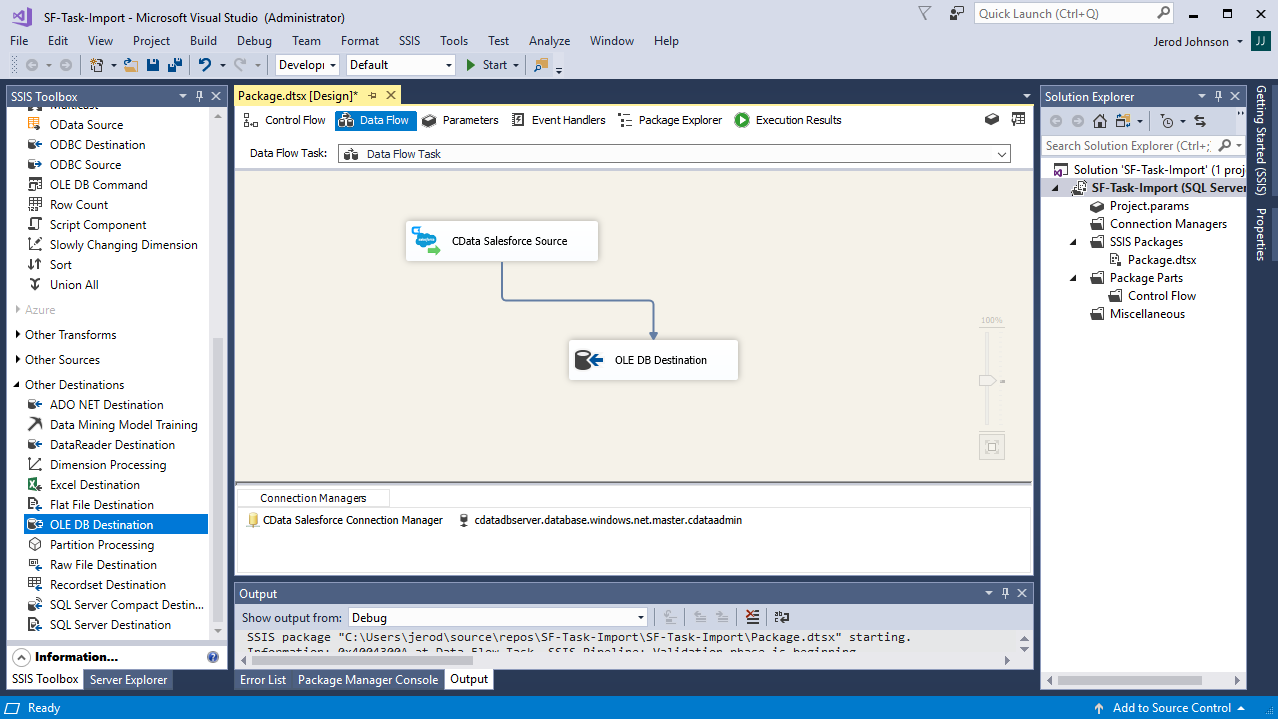

This example demonstrates how to use the CData SSIS Tasks for Databricks inside of a SQL Server SSIS workflow to transfer Databricks data into a Microsoft SQL Server database.

Add the Components

To get started, add a new Databricks source and SQL Server ADO.NET destination to a new data flow task.

Create a New Connection Manager

Follow the steps below to save Databricks connection properties in a connection manager.

- In the Connection Manager window, right-click and then click New Connection. The Add SSIS Connection Manager dialog is displayed.

- In the Connection Manager type menu, select Databricks. The CData Databricks Connection Manager is displayed.

- Configure connection properties.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

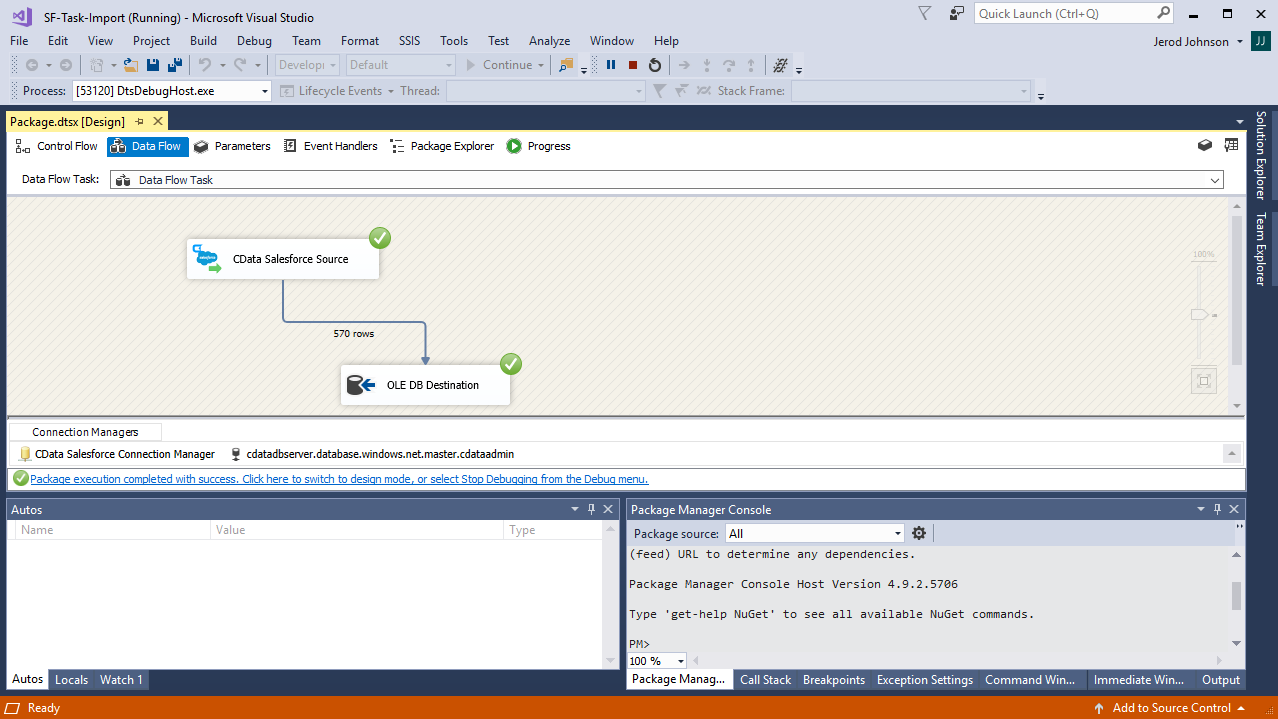

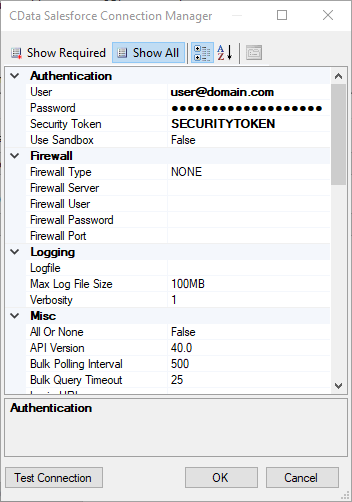

![Configuring a connection (Salesforce is shown).]()

Configure the Databricks Source

Follow the steps below to specify the query to be used to extract Databricks data.

- Double-click the Databricks source to open the source component editor.

- In the Connection Manager menu, select the connection manager previously created.

- Specify the query to use for the data extraction. For example:

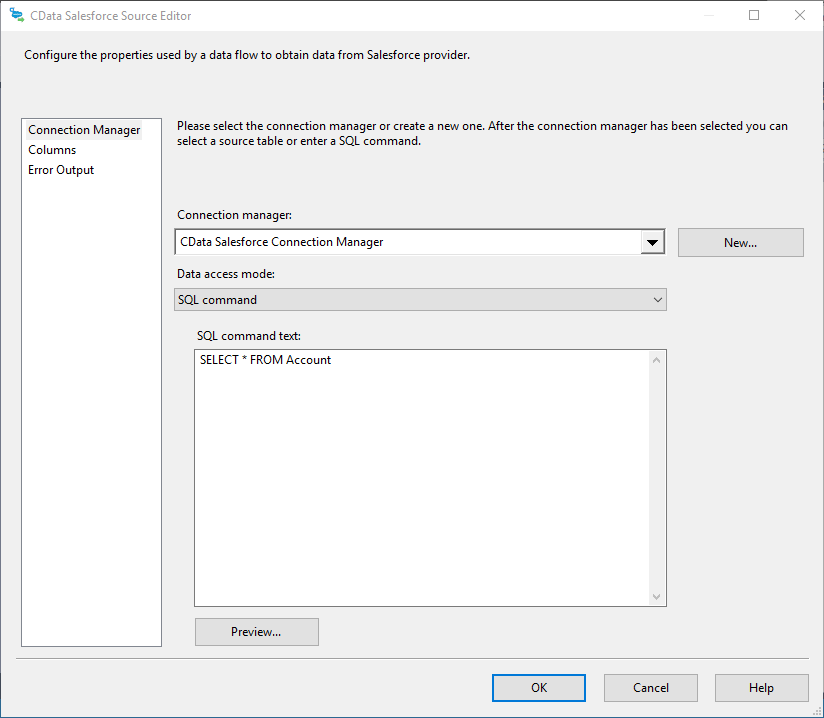

SELECT City, CompanyName FROM Customers WHERE Country = 'US'![The SQL query to retrieve records. (Salesforce is shown.)]()

- Close the Databricks Source control and connect it to the ADO.NET Destination.

Configure the SQL Server Destination

Follow the steps below to specify the SQL server table to load the Databricks data into.

- Open the ADO.NET Destination and add a New Connection. Enter your server and database information here.

- In the Data access mode menu, select "table or view".

- In the Table Or View menu, select the table or view to populate.

- Configure any properties you wish to on the Mappings screen.

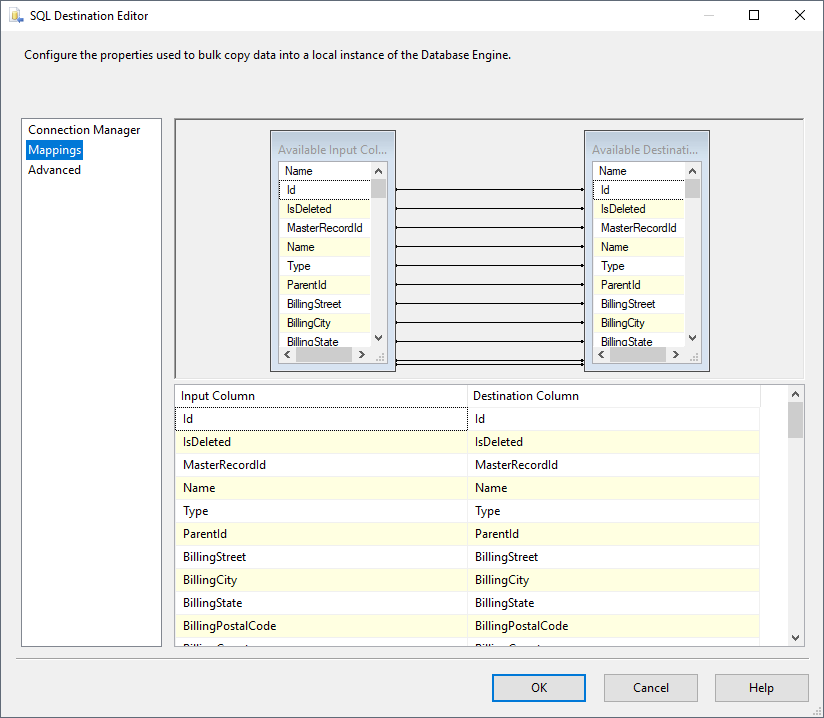

![The mappings from the SSIS source component to SQL Server. (Salesforce is shown.)]()

Run the Project

You can now run the project. After the SSIS Task has finished executing, your database will be populated with Databricks data.