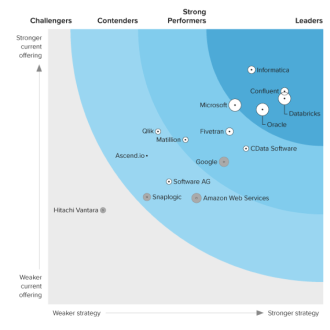

Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Automated Continuous Databricks Replication to Amazon S3

Use CData Sync for automated, continuous, customizable Databricks replication to Amazon S3.

Always-on applications rely on automatic failover capabilities and real-time data access. CData Sync integrates live Databricks data into your Amazon S3 instance, allowing you to consolidate all of your data into a single location for archiving, reporting, analytics, machine learning, artificial intelligence and more.

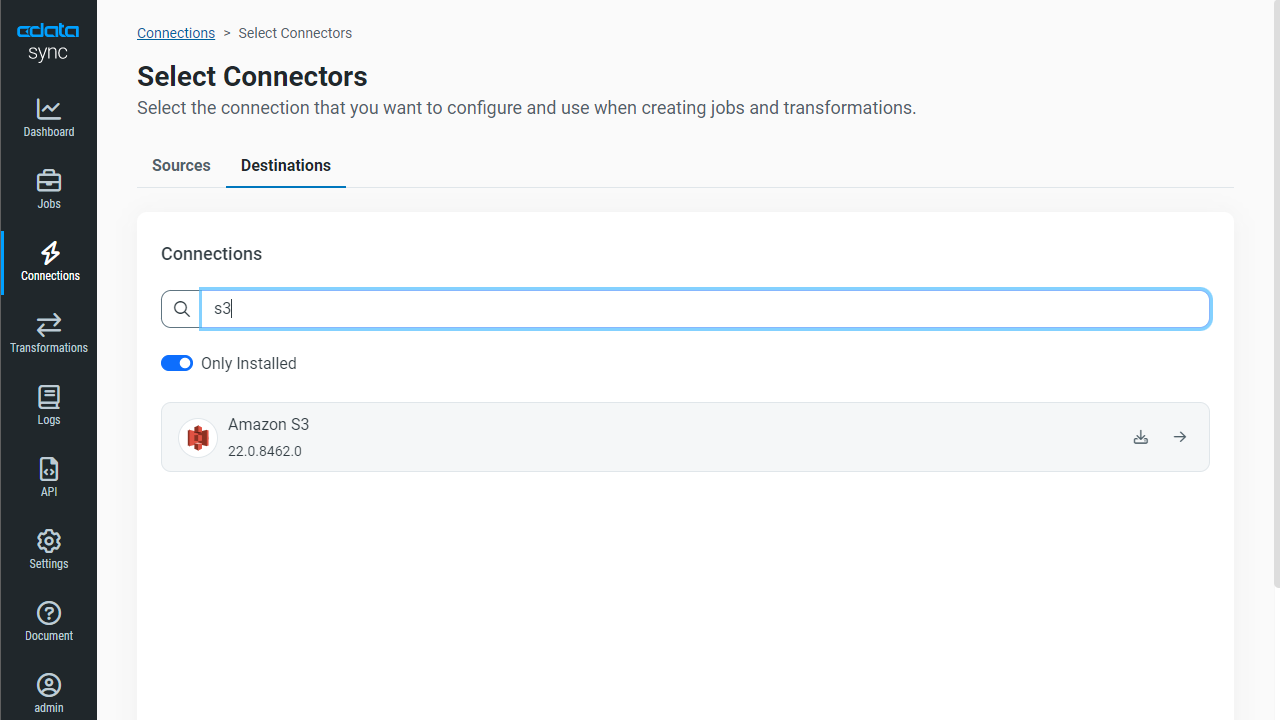

Configure Amazon S3 as a Replication Destination

Using CData Sync, you can replicate Databricks data to Amazon S3. To add a replication destination, navigate to the Connections tab.

- Click Add Connection.

- Select Amazon S3 as a destination.

![Configure a Destination connection to Amazon S3.]()

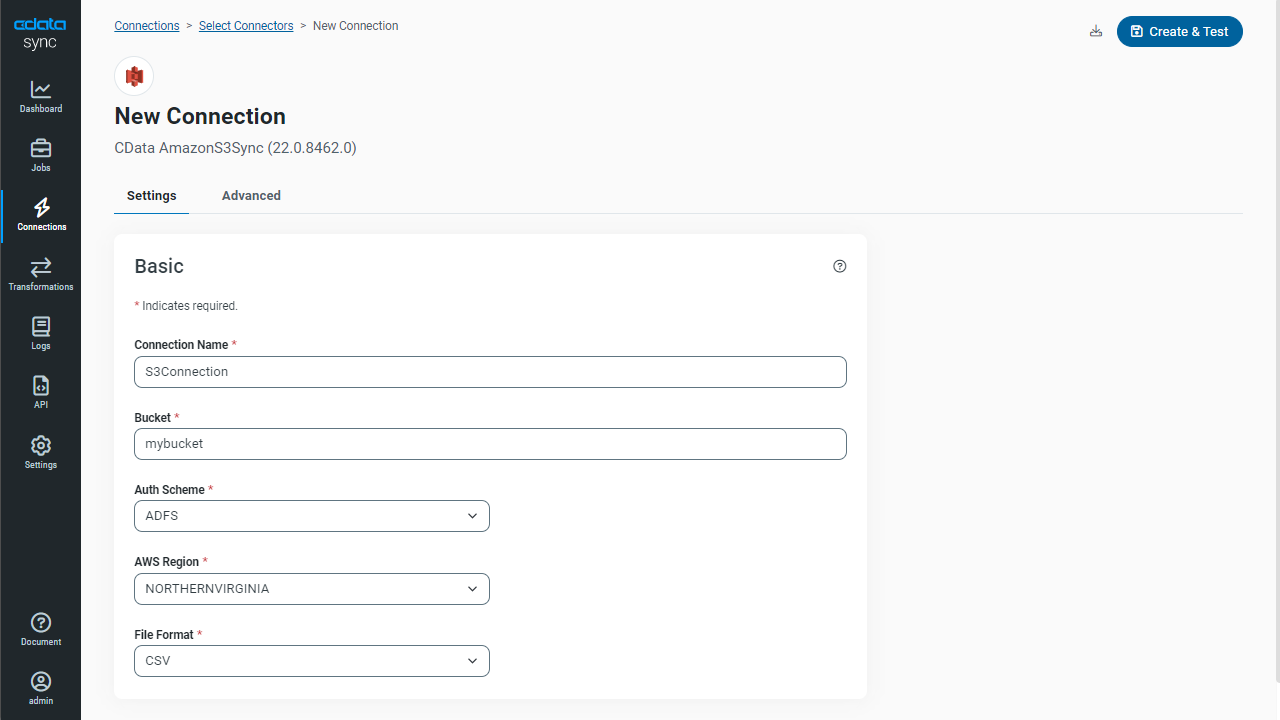

Enter the necessary connection properties. To connect to Amazon S3, provide the credentials for an administrator account or for an IAM user with custom permissions: Set AccessKey to the access key ID. Set SecretKey to the secret access key.

Note: Though you can connect as the AWS account administrator, it is recommended to use IAM user credentials to access AWS services.

To obtain the credentials for an IAM user, follow the steps below:

- Sign into the IAM console.

- In the navigation pane, select Users.

- To create or manage the access keys for a user, select the user and then select the Security Credentials tab.

To obtain the credentials for your AWS root account, follow the steps below:

- Sign into the AWS Management console with the credentials for your root account.

- Select your account name or number and select My Security Credentials in the menu that is displayed.

- Click Continue to Security Credentials and expand the Access Keys section to manage or create root account access keys.

- Click Test Connection to ensure that the connection is configured properly.

![Configure a Destination connection.]()

- Click Save Changes.

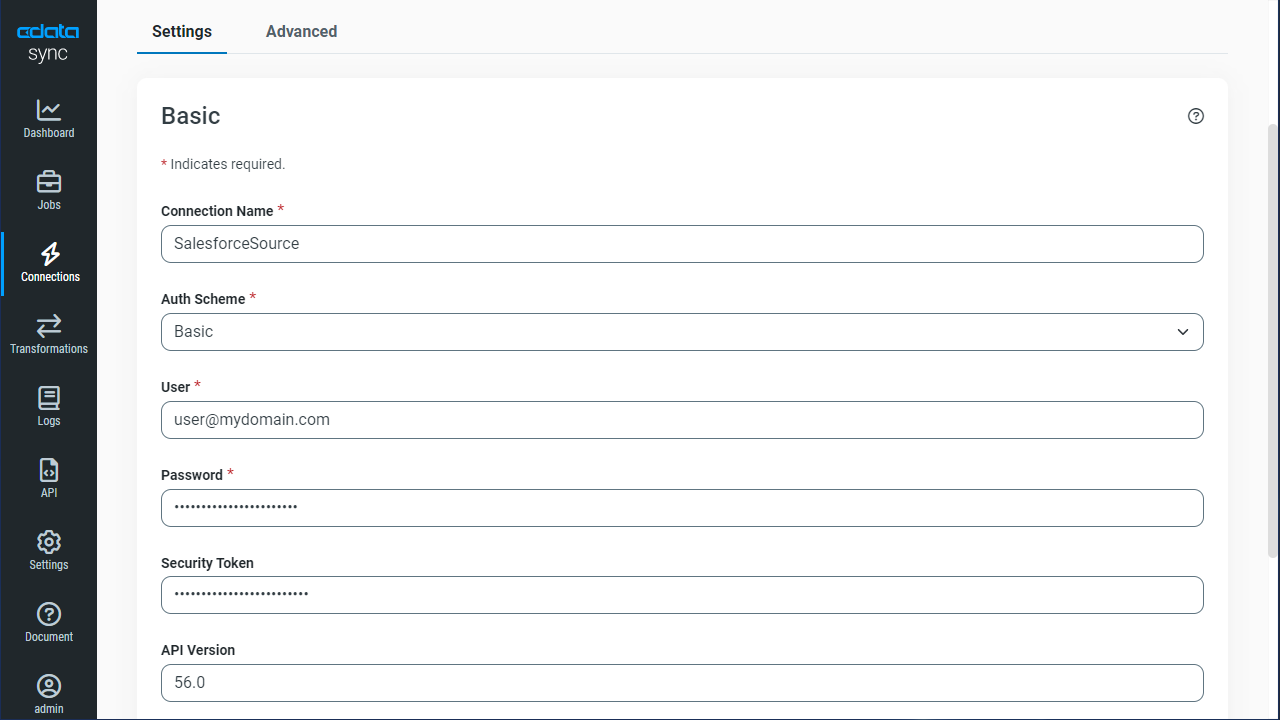

Configure the Databricks Connection

You can configure a connection to Databricks from the Connections tab. To add a connection to your Databricks account, navigate to the Connections tab.

- Click Add Connection.

- Select a source (Databricks).

- Configure the connection properties.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

![Configure a Source connection (Salesforce is shown).]()

- Click Connect to ensure that the connection is configured properly.

- Click Save Changes.

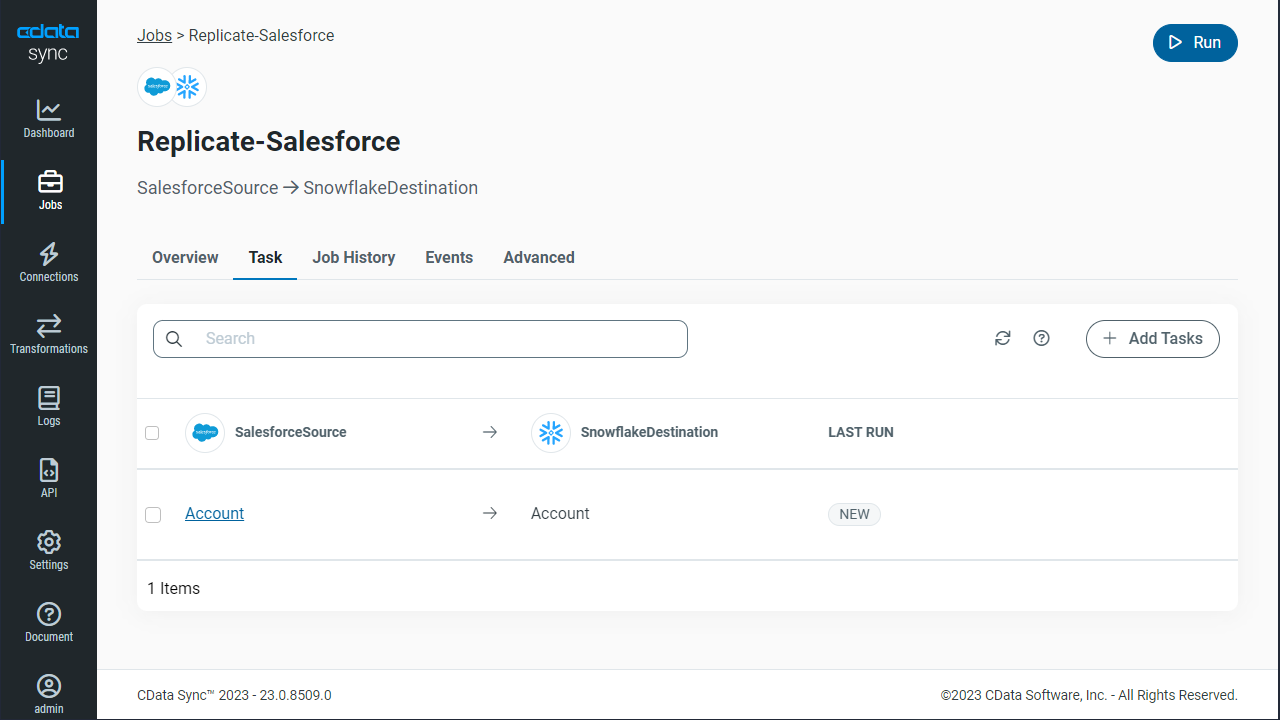

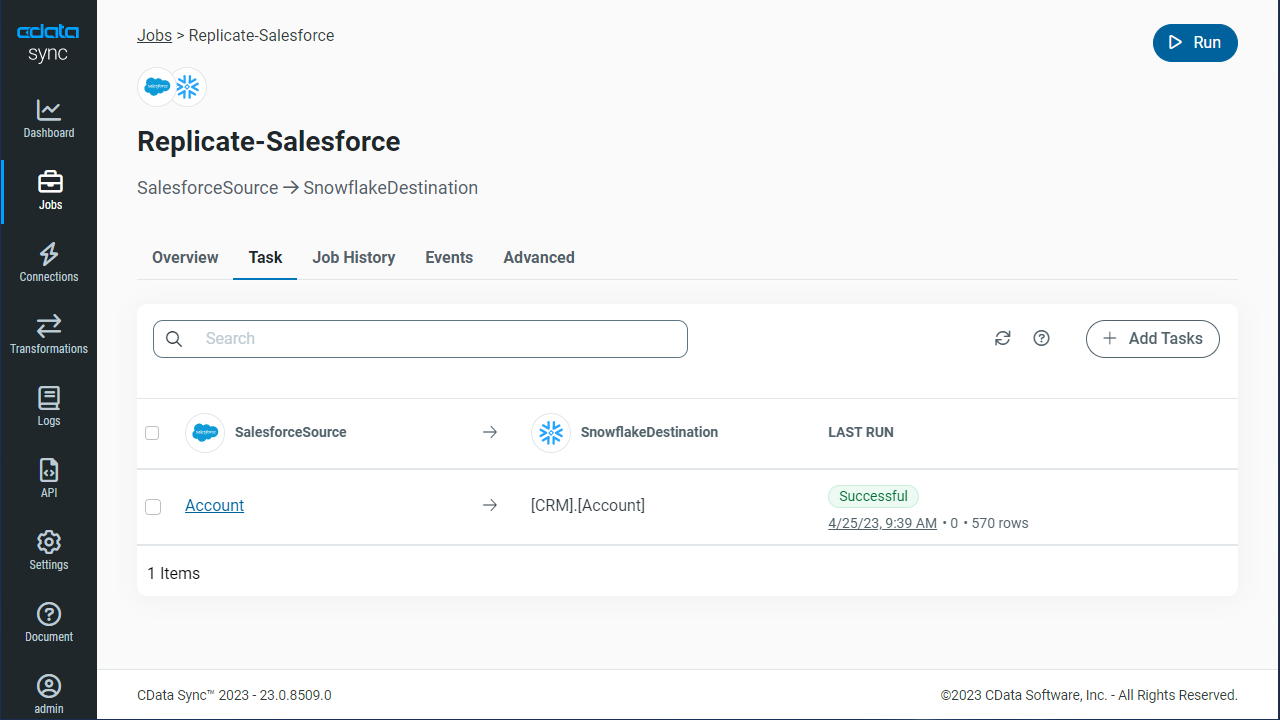

Configure Replication Queries

CData Sync enables you to control replication with a point-and-click interface and with SQL queries. For each replication you wish to configure, navigate to the Jobs tab and click Add Job. Select the Source and Destination for your replication.

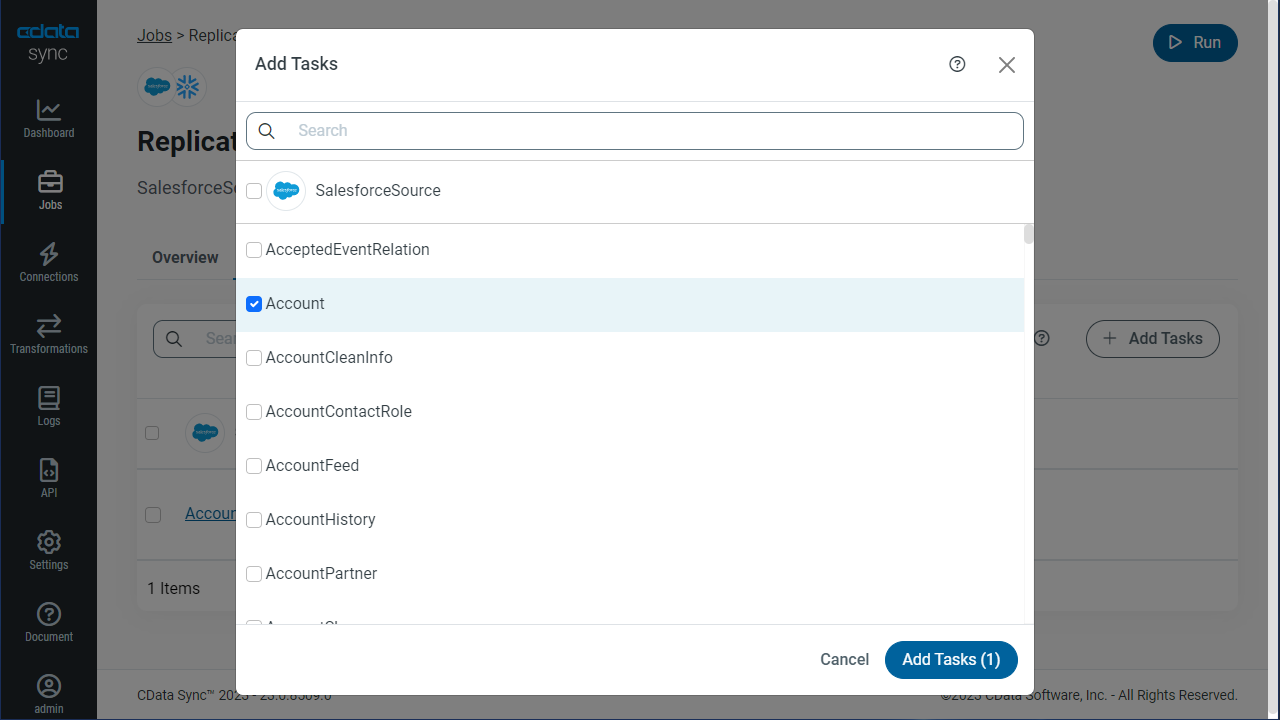

Replicate Entire Tables

To replicate an entire table, click Add Tables in the Tables section, choose the table(s) you wish to replicate, and click Add Selected Tables.

Customize Your Replication

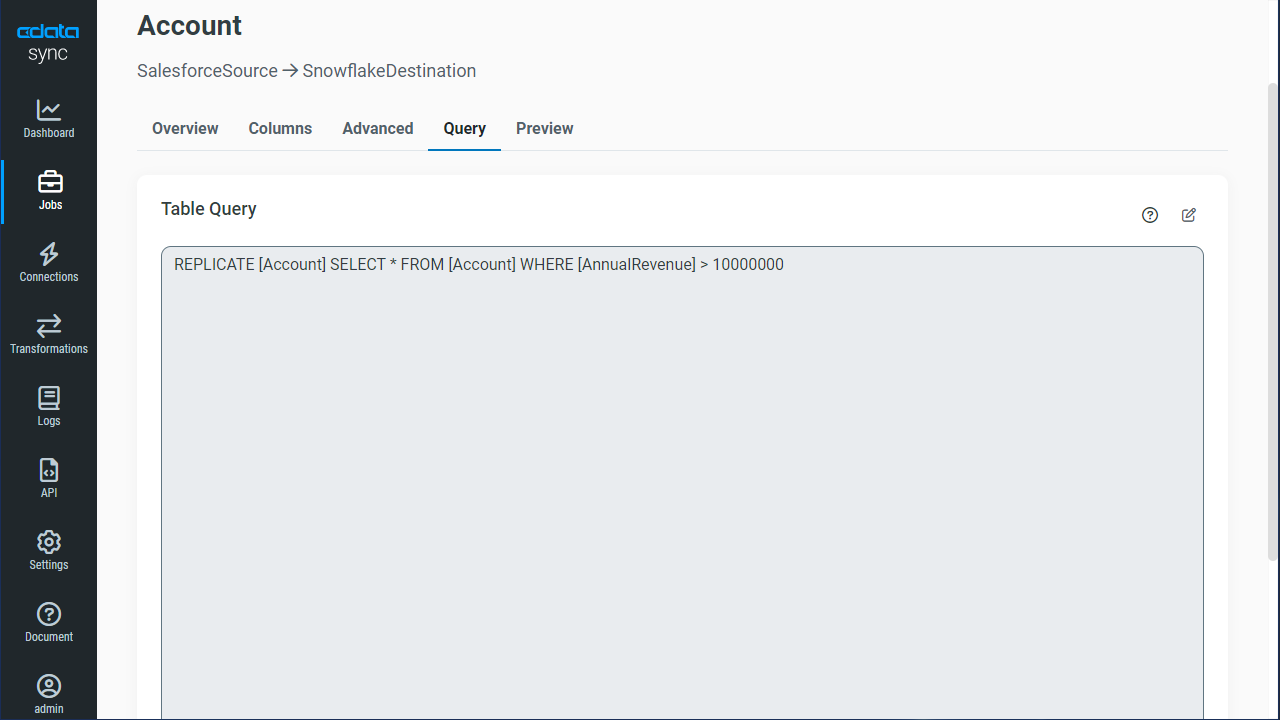

You can use the Columns and Query tabs of a task to customize your replication. The Columns tab allows you to specify which columns to replicate, rename the columns at the destination, and even perform operations on the source data before replicating. The Query tab allows you to add filters, grouping, and sorting to the replication.

Schedule Your Replication

In the Schedule section, you can schedule a job to run automatically, configuring the job to run after specified intervals ranging from once every 10 minutes to once every month.

Once you have configured the replication job, click Save Changes. You can configure any number of jobs to manage the replication of your Databricks data to Amazon S3.